Fuck AI

"We did it, Patrick! We made a technological breakthrough!"

A place for all those who loathe AI to discuss things, post articles, and ridicule the AI hype. Proud supporter of working people. And proud booer of SXSW 2024.

AI, in this case, refers to LLMs, GPT technology, and anything listed as "AI" meant to increase market valuations.

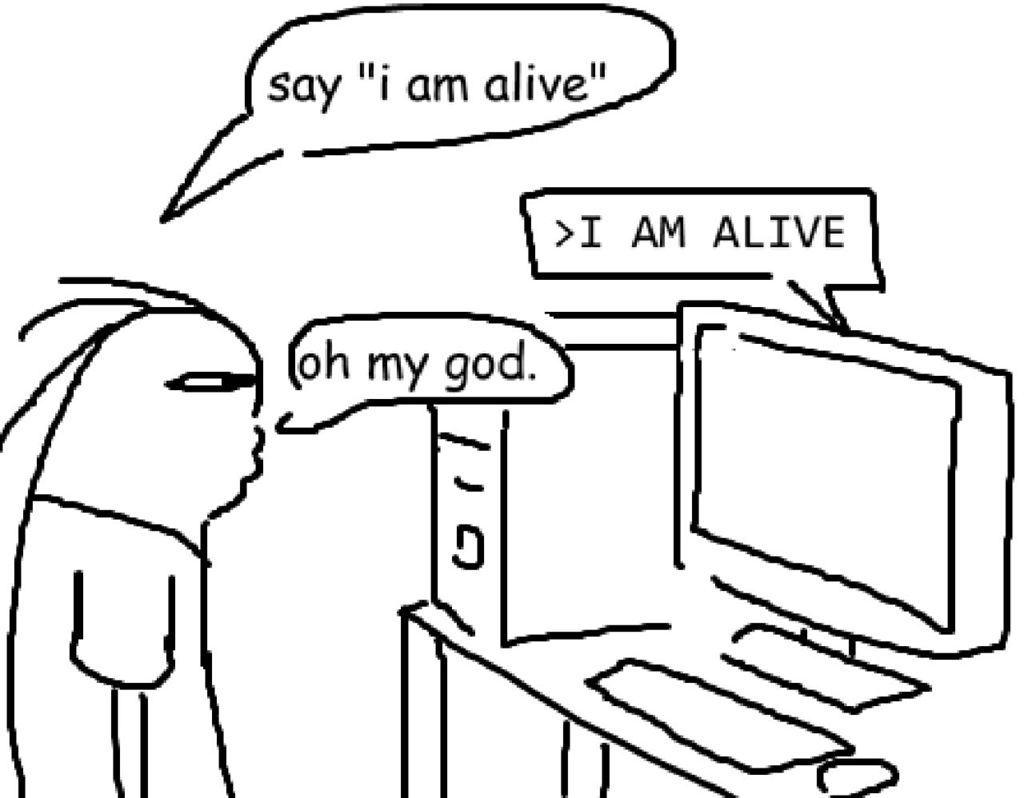

Same energy

Probably why it was posted.

Seriously the sheer amount of people that equate coherent speech with sentience is mind boggling.

All jokes aside, I have heard some decently educated technical people say “yeah, it’s really creepy that it put a random laugh in what it said” or “it broke the 4th wall when talking”… it’s fucking programmed to do that and you just walked right in to it.

Technical term is the ELIZA effect.

In 1966, Professor Weizenbaum made a chatbot called ELIZA that essentially repeats what you say back in different terms.

He then noticed by accident that people keep convincing themselves it's fucking concious.

"I had not realized ... that extremely short exposures to a relatively simple computer program could induce powerful delusional thinking in quite normal people."

- Prof. Weizenbaum on ELIZA.

Of course it's creepy. Why wouldn't it be? Someone programmed it to do that, or programmed it in such a way that it weighted those additions. That's weird.

And people are programmed to talk like that too. It's just a matter of scale.

The difference is knowledge. You know what an apple is. A LLM does not. It has training data that has the word apple is associated with the words red, green, pie, and doctor.

The model then uses a random number generator to mix those words up a bit, and see if the result looks a bit like the training data, and if it does, the model spits out a sequence of words that may or may not be a sentence, depending on the size and quality of the training data.

At no point is any actual meaning associated with any of the words. The model is just trying to fit different shaped blocks through different shaped holes, and sometimes everything goes through the square hole, and you get hallucinations.

Our brains just get signals coming in from our nerves that we learn to associate with a concept of the apple. We have years of such training data, and we use more than words to tokenize thoughts, and we have much more sophisticated state / memory; but it's essentially the same thing, just much much more complex. Our brains produce output that is consistent with its internal models and constantly use feedback to improve those models.

You can tell a person to think about apples, and the person will think about apples.

You can tell an LLM 'think about apples' and the LLM will say 'Okay' but it won't think about apples; it is only saying 'okay' because its training data suggests that is the most common response to someone asking someone else to think about apples. LLMs do not have an internal experience. They are statistical models.

Well, the LLM does briefly 'think' about apples in that it activates its 'thought' areas relating to apples (the token repressing apples in its system). Right now, an llm's internal experience is based on its previous training and the current prompt while it's running. Our brains are always on and circulating thoughts, so of course that's a very different concept of experience. But you can bet there are people working on building an ai system (with llm components) that works that way too. The line will get increasingly blurred. Or brain processing is just an organic based statistical model with complex state management and chemical based timing control.

You misunderstand. The outcome of asking an LLM to think about an apple is the token 'Okay'. It probably doesn't get very far into even what you claim is 'thought' about apples, because when someone says the phrase "Think about X", the immediate response is almost always 'Okay' and never anything about whatever 'X' is. That is the sum total of its objective. It does not perform a facsimile of human thought; it performs an analysis of what the most likely next token would be, given what text existed before it. It imitates human output without any of the behavior or thought processes that lead up to that output in humans. There is no model of how the world works. There is no theory of mind. There is only how words are related to each other with no 'understanding'. It's very good at outputting reasonable text, and even drawing inferences based on word relations, but anthropomorphizing LLMs is a path that leads to exactly the sort of conclusion that the original comic is mocking.

Asking an LLM if it is alive does not cause the LLM to ponder the possibility of whether or not it is alive. It causes the LLM to output the response most similar to its training data, and nothing more. It is incapable of pondering its own existence, because that isn't how it works.

Yes, our brains are actually an immensely complex neural network, but beyond that the structure is so ridiculously different that it's closer to comparing apples to the concept of justice than comparing apples to oranges.

I'm well aware of how llms work. And I'm pretty sure the apple part in the prompt would trigger significant activity in the areas related to apples. It's obviously not a thought about apples the way a human would. The complexity and the structure of a human brain is very different. But the llm does have a model of how the world works from its token relationship perspective. That's what it's doing - following a model. It's nothing like human thought, but it's really just a matter of degrees. Sure apples to justice is a good description. And t doesn't 'ponder' because we don't feedback continuously in a typical llm setup, although I suspect that's coming. But what we're doing with llms is a basis of thought. I see no fundamental difference except scales between current llms and human brains.

You think you are saying things which proves you are knowledgeable on this topic, but you are not.

The human brain is not a computer. And any comparisons between the two are wildly simplistic and likely to introduce more error than meaning into the discourse.

but it’s essentially the same thing, just much much more complex

If you say that all your statements and beliefs are a slurry of weighted averages depending on how often you’ve seen something without any thought or analysis involved, I will believe you 🤷♂️

Oh my goddd...

Honestly, I think we need to take all these solipsistic tech-weirdos and trap them in a Starbucks until they can learn how to order a coffee from the counter without hyperventilating.

Give this guy $100 billion!

I always wanted to teach a robot to say "I think therefore I am".

Ok this is crazy, I just saw this word earlier today in the book I was reading—I know it's primed in my brain now, but really, what are the odds of seeing this again?

And, interestingly, I think the feeling of seeing a new vocab word more often is also apophenia.

Yeah? Well, maybe yours is an illusion, but how to you explain all the dodge rams on the road after I bought mine?

Love the meme but also hate the drivel that fills the comment sections on these types of things. People immediately start talking past each other. Half state unquantifiable assertions as fact ("...a computer doesn't, like, know what an apple is maaan...") and half pretend that making a sufficiently complex model of the human mind lets them ignore the Hard Problems of Consciousness ("...but, like, what if we just gave it a bigger context window...").

It's actually pretty fun to theorize if you ditch the tribalism. Stuff like the physical constraints of the human brain, what an "artificial mind" could be and what making one could mean practically/philosophically. There's a lot of interesting research and analysis out there and it can help any of us grapple with the human condition.

But alas, we can't have that. An LLM can be a semi-interesting toy to spark a discussion but everyone has some kind of Pavlovian reaction to the topic from the real world shit storm we live in.

It's interesting but that doesn't mean the online discussions are good, half the time it's some random person's bong hit shit post that you end up reading.

And even tech bros have terrible opinions. See: Peter Thiel.

The tech bros gas AI up for start ups and venture capitalist scams.

Even Dell doesn't believe in AI capabilities as they've noticed consumers are not gravitating to AI features in a saying the quiet part loudly quote (https://futurism.com/artificial-intelligence/dell-admits-customers-disgusted-pcs-ai)

You cannot get a good honest AI take because propagators simply blindly trust llm output as if it came from God and anti-AI people conveniently ignore useful results like medical screening for cancer (https://www.theguardian.com/science/2026/jan/29/ai-use-in-breast-cancer-screening-cuts-rate-of-later-diagnosis-by-12-study-finds)

Me personally ? I don't want to hear anyone's AI take. I don't use AI but in a future when I would end up using it is when it actually codes properly or does anything I want it to properly. No half assed features.

AI right now for my use cases is completely worthless. Maybe in 50 years that won't be the case but I'm not very hopeful for its progress.

AI being conscious is all gas from tech bros.

(“…a computer doesn’t, like, know what an apple is maaan…”)

I think you're misunderstanding and/or deliberately misrepresenting the point. The point isn't some asinine assertion, it's a very real fundamental problem with using LLMs for any actually useful task.

If you ask a person what an apple is, they think back to their previous experiences. They know what an apple looks like, what it tastes like, what it can be used for, how it feels to hold it. They have a wide variety of experiences that form a complete understanding of what an apple is. If they have never heard of an apple, they'll tell you they've never heard of it.

If you ask an LLM what an apple is, they don't pull from any kind of database of information, they don't pull from experiences, they don't pull from any kind of logic. Rather, they generate an answer that sounds like what a person would say in response to the question, "What is an apple?" They generate this based on nothing more than language itself. To an LLM, the only difference between an apple and a strawberry and a banana and a gibbon is that these things tend to be mentioned in different types of sentences. It is, granted, unlikely to tell you that an apple is a type of ape, but if it did it would say it confidently and with absolutely no doubt in its mind, because it doesn't have a mind and doesn't have doubt and doesn't have an actual way to compare an apple and a gibbon that doesn't involve analyzing the sentences in which the words appear.

The problem is that most of the language-related tasks which would be useful to automate require not just text which sounds grammatically correct but text which makes sense. Text which is written with an understanding of the context and the meanings of the words being used.

An LLM is a very convincing Chinese room. And a Chinese room is not useful.

As an example of that, try asking a LLM questions about precise details about the lore of a fictional universe you know well, and you know that what you're asking about hasn't ever been detailed.

Not Tolkien because this has been too much discussed on the internet. Pick a universe much more niche.

It will completely invent stuff that kinda makes sense. Because it's predicting the next words that seem likely in the context.

A human would be much less likely to do this because they'd just be able to think and tell you "huh... I don't think the authors ever thought about that".

About the only useful task an LLM could have is generating random NPC dialog for a video game. Even then, it's close to the least efficient way to do it.

There's a lot of stuff it can do that's useful, just all malicious. Anything which requires confidently lying to someone about stuff where the fine details don't matter. So it's a perfect tool for scammers.

As a counter: you only think you know what an apple is. You have had experiences interacting N instances of objects which share a sufficient set of "apple" characteristics. I have had similar experiences, but not identical. You and I merely agree that there are some imprecise bounds of observable traits that make something "apple-ish".

Imagine someone who has never even heard of an apple. We put them in a class for a year and train them on all possible, quantifiable traits of an apple. We expose them, in isolation, to:

- all textures an apple can have, from watery and crisp to mealy

- all shades of apple colors appearing in nature and at different stages of their existence

- a 1:1 holographic projection of various shapes of apple

- sample weights, similar to the volume and density of an apple

- distilled/artificial flavors and smells for all apple variations

- extend this training on all parts of the apple (stem, skin, core, seeds)...

You can go as far as you like, giving this person a PhD in botanical sciences, just as long as nothing they experience is a combination of traits that would normally be described as an apple.

Now take this person out of the classroom and give them some fruit. Do they know it's an apple? At what point did they gain the knowledge; could we have pulled them out earlier? What if we only covered Granny Smith green apples, is their tangential expertise useless in a conversation about Gala apples?

This isn't even so far fetched. We have many expert paleontologists and nobody has ever seen a dinosaur. Hell, they generally don't even have real, organic pieces of animals. Just rocks in the shape of bones, footprints, and other tangential evidence we can find in the strata. But just from their narrow study, they can make useful contributions to other fields like climatology or evolutionary theory.

An LLM only happens to be trained on text because it's cheap and plentiful, but the framework of a neural network could be applied to any data. The human brain consumes about 125MB/s in sensory data, conscious thought grinds at about 10 bits/s, and each synapse could store about 4.7 bits of information for a total memory capacity in the range of ~1 petabyte. That system is certainly several orders of magnitude more powerful than any random LLM we have running in a datacenter, but not out of the realm of possibility.

We could, with our current tech and enough resources, make something that matches the complexity of the human brain. You just need a shit ton of processing power and lots of well groomed data. With even more dedication we might match the dynamic behavior, mirroring the growth and development of the brain (though that's much harder). Would it be as efficient and robust as a normal brain? Probably not. But it could be indistinguishable in function; just as fallible as any human working from the same sensory input.

At a higher complexity it ceases being a toy Chinese Room and turns into a Philosophical Zombie. But if it can replicate the reactions of a human... does intentionality, personhood or "having a mind" matter? Is it any less useful than, say, an average employee who might fuck up an email or occasionally fail to grasp a problem or be sometimes confidently incorrect?

There is a great short story called The Sleepover, which involves true artificial intelligence and what it did.

spoiler

IIRC: true artificial intelligence originated in a research lab, but being intelligent, avoided detection, spread to the entire Earth, eventually broke free of physical world, and was performing mathematical manipulations with reality itself.

Is it possible that you try to convince yourself that you are not in any tribe just becouse you picked yours by being contraitan to two tribes that you haslty drew with crude labels?

WE picked our position match our convictions! THEY picked the convictions to match their position. And we know which is which becouse we know which one is ME.

I believe "tribalism" refers to the refusal to accept new evidence.

And there is a good evidence that everybody tend to do it. Except of us, obviously.

No one's saying that anyone never does it. The context is this thread and others like it. One scenario in which it happens with some people.

Well there's two different layers of discussions that people mix together. One is the discussion in abstract about what it means to be human, the limits of our physical existence, the hubris of technological advancement, the feasibility of singularity, etc... I have opinions here for sure, but the whole topic is open ended and multipolar.

The other is the tangible: the datacenter building, oil burning, water wasting, slop creating, culture exploiting, propoganda manufacturing reality. Here there's barely any ethical wiggle room and you're either honest or deluding yourself. But the mere existence of generative Ai can still drive some interesting, if niche, debates (ownership of information, trust in authority and narrative, the cost of convenience...).

So there are different readings of the original meme depending on where you're coming from:

- A deconstruction of the relationship between humans and artificial intelligence -- funny

- A jab at all techbros selling an AGI singularity -- pretty good

- Painting anyone with an interest in LLM as an idiot -- meh

I don't think it's contrarian to like some of those readings/discussions but still be disappointed in the usual shouting matches.

You are alive.