The developer of an LLM image description service for the fediverse has (temporarily?) turned it off due to concerns from a blind person.

Link to the thread in question

Good for them

Big brain tech dude got yet another clueless take over at HackerNews etc? Here's the place to vent. Orange site, VC foolishness, all welcome.

This is not debate club. Unless it’s amusing debate.

For actually-good tech, you want our NotAwfulTech community

The developer of an LLM image description service for the fediverse has (temporarily?) turned it off due to concerns from a blind person.

Link to the thread in question

Good for them

Good for them. Not quite abandoning the project and deleting it, but its a good move from them nonetheless.

CW: Slop, body humor, Minions

So my boys recieved Minion Fart Rifles for Christmas from people who should have known better. The toys are made up of a compact fog machine combined with a vortex gun and a speaker. The fog machine component is fueled by a mixture of glycerin and distilled water that comes in two scented varieties: banana and farts. The guns make tidy little smoke rings that can stably deliver a payload tens of feet in still air.

Anyway, as soon as they were fired up, Ammo Anxiety reared its ugly head, so I went in search of a refill recipe. (Note: I searched "Minions Vortex Gun Refill Recipe") and goog returned this fartifact*:

194 dB, you say? Alvin Meshits? The rabbit hole beckoned.

The "source links" were mostly unrelated except one, which was a reddit thread that lazily cited ChatGPT generating the same text almost verbatim in response to the question, "What was the loudest ever fart?"

Luckily, a bit of detectoring turned up the true source, an ancient Uncyclopedia article's "Fun Facts" section:

https://en.uncyclopedia.co/wiki/Fartium

The loudest fart ever recorded occurred on May 16, 1972 in Madeline, Texas by Alvin Meshits. The blast maintained a level of 194 decibels for one third of a second. Mr. Meshits now has recurring back pain as a result of this feat.

Welcome to the future!

Somewhat interestingly, 194 decibels is the loudest that a sound can be physically sustained in the Earth's atmosphere. At that point the "bottom" of the pressure wave is a vacuum. Some enormous blast such as a huge meteor impact, a supervolcano eruption or a very large nuclear weapon can exceed that limit but only for the initial pulse.

I suspect a 194 dB fart would blow the person in half.

Vacuum-driven total intestinal eversion, nobody's ever seen anything like it

Apparently there's another brand that describes its scents as "(rich durian & mellow cheese)"

A rival gang of "AI" "researchers" dare to make fun of Big Yud's latest book and the LW crowd are Not Happy

Link to takedown: https://www.mechanize.work/blog/unfalsifiable-stories-of-doom/ (hearbreaking : the worst people you know made some good points)

When we say Y&S’s arguments are theological, we don’t just mean they sound religious. Nor are we using “theological” to simply mean “wrong”. For example, we would not call belief in a flat Earth theological. That’s because, although this belief is clearly false, it still stems from empirical observations (however misinterpreted).

What we mean is that Y&S’s methods resemble theology in both structure and approach. Their work is fundamentally untestable. They develop extensive theories about nonexistent, idealized, ultrapowerful beings. They support these theories with long chains of abstract reasoning rather than empirical observation. They rarely define their concepts precisely, opting to explain them through allegorical stories and metaphors whose meaning is ambiguous.

Their arguments, moreover, are employed in service of an eschatological conclusion. They present a stark binary choice: either we achieve alignment or face total extinction. In their view, there’s no room for partial solutions, or muddling through. The ordinary methods of dealing with technological safety, like continuous iteration and testing, are utterly unable to solve this challenge. There is a sharp line separating the “before” and “after”: once superintelligent AI is created, our doom will be decided.

LW announcement, check out the karma scores! https://www.lesswrong.com/posts/Bu3dhPxw6E8enRGMC/stephen-mcaleese-s-shortform?commentId=BkNBuHoLw5JXjftCP

Update an LessWrong attempts to debunk the piece with inline comments here

https://www.lesswrong.com/posts/i6sBAT4SPCJnBPKPJ/mechanize-work-s-essay-on-unfalsifiable-doom

Leading to such hilarious howlers as

Then solving alignment could be no easier than preventing the Germans from endorsing the Nazi ideology and commiting genocide.

Ummm pretty sure engaging in a new world war and getting their country bombed to pieces was not on most German's agenda. A small group of ideologues managed to sieze complete control of the state, and did their very best to prevent widespread knowledge of the Holocaust from getting out. At the same time they used the power of the state to ruthlessly supress any opposition.

rejecting Yudkowsky-Soares' arguments would require that ultrapowerful beings are either theoretically impossible (which is highly unlikely)

ohai begging the question

A few comments...

We want to engage with these critics, but there is no standard argument to respond to, no single text that unifies the AI safety community.

Yeah, Eliezer had a solid decade and a half to develop a presence in academic literature. Nick Bostrom at least sort of tried to formalize some of the arguments but didn't really succeed. I don't think they could have succeeded, given how speculative their stuff is, but if they had, review papers could have tried to consolidate them and then people could actually respond to the arguments fully. (We all know how Eliezer loves to complain about people not responding to his full set of arguments.)

Apart from a few brief mentions of real-world examples of LLMs acting unstable, like the case of Sydney Bing, the online appendix contains what seems to be the closest thing Y&S present to an empirical argument for their central thesis.

But in fact, none of these lines of evidence support their theory. All of these behaviors are distinctly human, not alien.

Even with the extent that Anthropic's "research" tends to be rigged scenarios acting as marketing hype without peer review or academic levels of quality, at the very least they (usually) involve actual AI systems that actually exist. It is pretty absurd the extent to which Eliezer has ignored everything about how LLMs actually work (or even hypothetically might work with major foundational developments) in favor of repeating the same scenario he came up with in the mid 2000s. Or even tried mathematical analyses of what classes of problems are computationally tractable to a smart enough entity and which remain computationally intractable (titotal has written some blog posts about this with material science, tldr, even if magic nanotech was possible, an AGI would need lots of experimentation and can't just figure it out with simulations. Or the lesswrong post explaining how chaos theory and slight imperfections in measurement makes a game of pinball unpredictable past a few ricochets. )

The lesswrong responses are stubborn as always.

That's because we aren't in the superintelligent regime yet.

Y'all aren't beating the theology allegations.

guess the USA invasion of Venezuela puts a flashing neon crosshair on Taiwan.

An extremely ridiculous notion that I am forced to consider right now is that it matters whether the CCP invades before or after the "AI" bubble bursts. Because the "AI" bubble is the biggest misallocation of capital in history, which means people like the MAGA government are desperate to wring some water out of those stones, anything. And for various economical reasons it isn't doable at the moment to produce chips anywhere else than Taiwan. No chips, no "AI" datacenters, and they promised a lot of AI datacenters—in fact most of the US GDP "growth" in 2025 was promises of AI datacenters, if you don't count these promises the country is already in recession.

Basically I think if the CCP invades before the AI bubble pops, MAGA would escalate to full-blown war against China to nab Taiwan as a protectorate. And if we all die in nuclear fallout caused to protect chatbot profits I will be so over this whole thing

A journalist attempts to ask the question "Why Do Americans Hate A.I.?", and shows their inability to tell actually useful tech from lying machines:

Bonus points for gaslighting the public on billionaires' behalf:

These worries are real. But in many cases, they're about changes that haven't come yet.

Of all the statements that he could have made, this is one of the least self-aware. It is always the pro-AI shills who constantly talk about how AI is going to be amazing and have all these wonderful benefits next year (curve go up). I will also count the doomers who are useful idiots for the AI companies.

The critics are the ones who look at what AI is actually doing. The informed critics look at the unreliability of AI for any useful purpose, the psychological harm it has caused to many people, the absurd amount of resources being dumped into it, the flimsy financial house of cards supporting it, and at the root of it all, the delusions of the people who desperately want it to all work out so they can be even richer. But even people who aren't especially informed can see all the slop being shoved down their throats while not seeing any of the supposed magical benefits. Why wouldn't they fear and loathe AI?

The mods were heavily downvoted and critiqued for pulling the rug from under the community as well as for parallelly modding pro-A.I.-relationship-subs. One mod admitted:

"(I do mod on r/aipartners, which is not a pro-sub. Anyone who posts there should expect debate, pushback, or criticism on what you post, as that is allowed, but it doesn’t allow personal attacks or blanket comments, which applies to both pro and anti AI members. Calling people delusional wouldn’t be allowed in the same way saying that ‘all men are X’ or whatever wouldn’t. It’s focused more on a sociological issues, and we try to keep it from devolving into attacks.)"

A user, heavily upvoted, replied:

You’re a fucking mod on ai partners? Are you fucking kidding me?

It goes on and on like this: As of now, the posting has amassed 343 comments. Mostly, it's angry subscribers of the sub, while a few users from pro-A.I.-subreddits keep praising the mods. Most of the users agree that brigading has to stop, but don't understand why that means that a sub called COGSUCKERS should suddenly be neutral to or accepting of LLM-relationships. Bear in mind that the subreddit r/aipartners, for which one of the mods also mods, does not allow to call such relationships "delusional". The most upvoted comments in this shitstorm:

"idk, some pro schmuck decided we were hating too hard 💀 i miss the days shitposting about the egg" https://www.reddit.com/r/cogsuckers/comments/1pxgyod/comment/nwb159k/

Happy new year everybody. They want to ban fireworks here next year so people set fires to some parts of Dutch cities.

Unrelated to that, let 2026 be the year of the butlerian jihad.

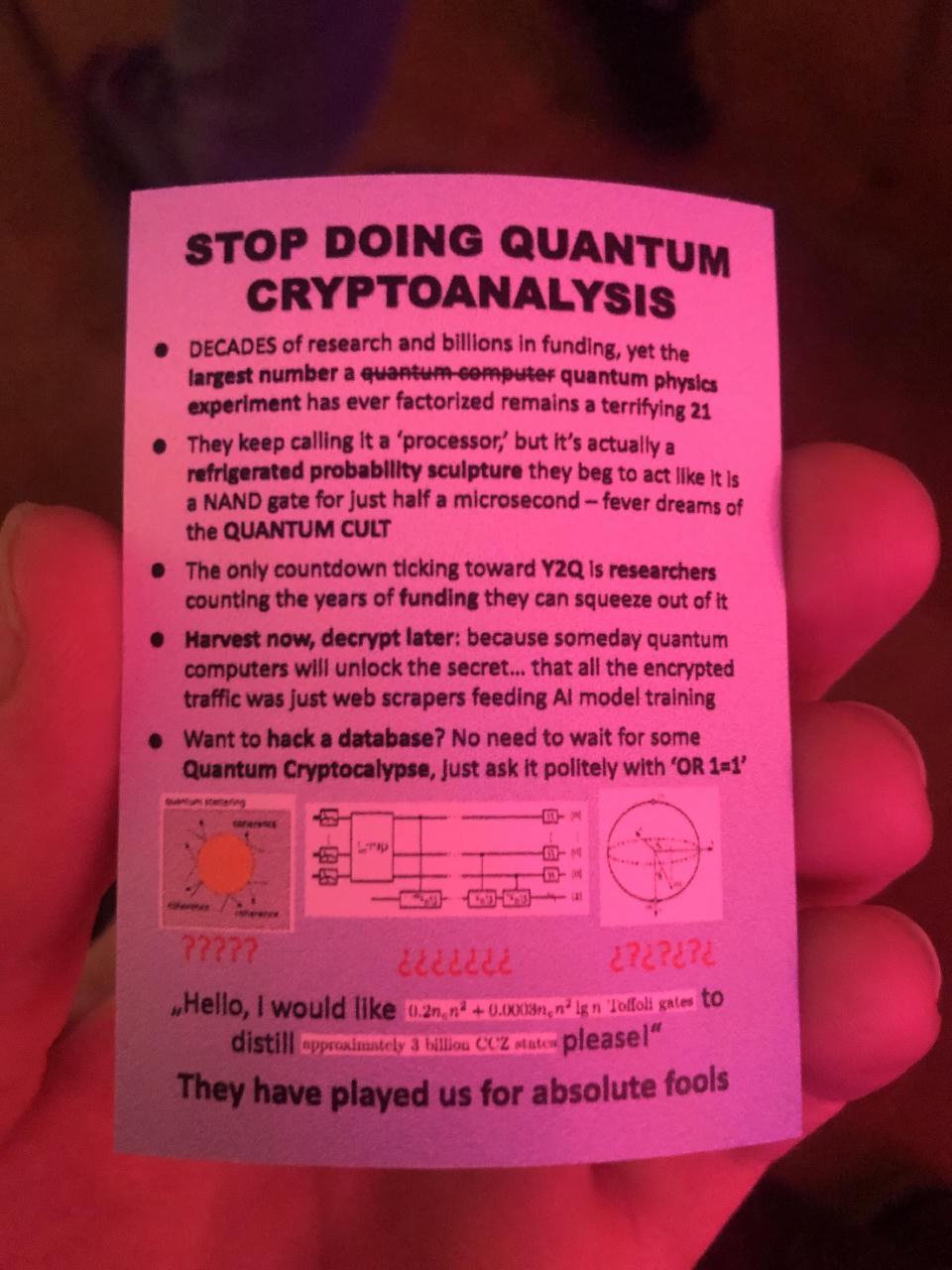

How about some quantum sneering instead of ai for a change?

They keep calling it a 'processor,' but it's actually a refrigerated probability sculpture they beg to act like it Is a NAND gate for just half a microsecond

“Refrigerated probability sculpture” is outstanding.

Photo is from the recent CCC, but I can’t find where I found the image, sorry.

alt text

A photograph of a printed card bearing the text:

STOP DOING QUANTUM CRYPTOANALYSIS

(I can’t actually read the final bit, so I can’t describe it for you, apologies)

They have played us for absolute fools.

The NYT:

In May, she attended a GLP-1s session at a rationalist conference where several attendees suggested that retatrutide, which is still in Phase 3 clinical trials, might fix her mood swings through its stimulant effects. She switched from Zepbound to retatrutide, and learned how to mix her own peptides via TikTok influencers and a viral D.I.Y. guide by the Substacker Cremieux.

Carl T. Bergstrom:

Ten years ago I would not have known the majority of the words in this paragraph—and was indubitably far better off for it. [...] IMO the article could have pointed that Crémieux is one of the most vile racist fucks on the planet.

https://bsky.app/profile/carlbergstrom.com/post/3mbir7bhfhc2u

GeneSmith who told LessWrong "How to Make Superbabies" also has no bioscience background. This essay in Liberal Currents thinks that a lot of right-wing media personalities are using synthetic testosterone now (but don't call it gender-affirming care!). Roid rage may be hard to separate from Twitter brain-rot and slop-chugging.

Steve Yegge has created Gas Town, a mess of Claude Code agents forced to cosplay as a k8s cluster with a Mad Max theme. I can't think of better sneers than Yegge's own commentary:

Gas Town is also expensive as hell. You won’t like Gas Town if you ever have to think, even for a moment, about where money comes from. I had to get my second Claude Code account, finally; they don’t let you siphon unlimited dollars from a single account, so you need multiple emails and siphons, it’s all very silly. My calculations show that now that Gas Town has finally achieved liftoff, I will need a third Claude Code account by the end of next week. It is a cash guzzler.

If you're familiar with the Towers-of-Hanoi problem then you can appreciate the contrast between Yegge's solution and a standard solution; in general, recursive solutions are fewer than ten lines of code.

Gas Town solves the MAKER problem (20-disc Hanoi towers) trivially with a million-step wisp you can generate from a formula. I ran the 10-disc one last night for fun in a few minutes, just to prove a thousand steps was no issue (MAKER paper says LLMs fail after a few hundred). The 20-disc wisp would take about 30 hours.

For comparison, solving for 20 discs in the famously-slow CPython programming system takes less than a second, with most time spent printing lines to the console. The solution length is exponential in the number of discs, and that's over one million lines total. At thirty hours, Yegge's harness solves Hanoi at fewer than ten lines/second! Also I can't help but notice that he didn't verify the correctness of the solution; by "run" he means that he got an LLM to print out a solution-shaped line.

Working effectively in Gas Town involves committing to vibe coding. Work becomes fluid, an uncountable that you sling around freely, like slopping shiny fish into wooden barrels at the docks. Most work gets done; some work gets lost. Fish fall out of the barrel. Some escape back to sea, or get stepped on. More fish will come

Oh. Oh no.

First came Beads. In October, I told Claude in frustration to put all my work in a lightweight issue tracker. I wanted Git for it. Claude wanted SQLite. We compromised on both, and Beads was born, in about 15 minutes of mad design. These are the basic work units.

I don't think I could come up with a better satire of vibe coding and yet here we fucking are. This comes after several pages of explaining the 3 or 4 different hacks responsible for making the agents actually do something when they start up, which I'm pretty sure could be replaced by bit of actual debugging but nope we're vibe coding now.

Look, I've talked before about how I don't have a lot of experience with software engineering, and please correct me if I'm wrong. But this doesn't look like an engineered project. It looks like a pile of piles of random shit that he kept throwing back to Claude code until it looked like it did what he wanted.

That’s horrifying. The whole thing reads like an over-elaborate joke poking fun at vibe-coders.

It’s like someone looked at the javascript ecosystem of tools and libraries and thought that it was great but far too conservative and cautious and excessively engineered. (fwiw, yegge kinda predicted the rise of javascript back in the day… he’s had some good thoughts on the software industry, but I don’t think this latest is one of them)

So now we have some kind of meta-vibe-coding where someone gets to play at being a project manager whilst inventing cutesy names and torching huge sums of money… but to what end?

Aside from just keeping Gas Town on the rails, probably the hardest problem is keeping it fed. It churns through implementation plans so quickly that you have to do a LOT of design and planning to keep the engine fed.

Apart from a “haha, turns out vide coding isn’t vibe engineering” (because I suspect that “design” and “plan” just mean “write more prompts and hope for the best”) I have to ask again: to what end? what is being accomplished here? Where are the great works of agentic vibe coding? This whole thing just seems like it could have been avoided by giving steve a copy of factorio or something, and still generated as many valuable results.

Also I can’t help but notice that he didn’t verify the correctness of the solution

Think I have mentioned the story I heard here once, about the guy who wrote a program to find some large prime which he ran on the mainframe over the weekend, using up all the calculation budget his uni department had. And then they confronted him with the end result, and the number the program produced ended in a 2. (He had forgotten to code the -1 step).

This reminded me of that story. (At least in this case it actually produced a viable result (if costly), just with a minor error).

This is a fun read: https://nesbitt.io/2025/12/27/how-to-ruin-all-of-package-management.html

Starts out strong:

Prediction markets are supposed to be hard to manipulate because manipulation is expensive and the market corrects. This assumes you can’t cheaply manufacture the underlying reality. In package management, you can. The entire npm registry runs on trust and free API calls.

And ends well, too.

The difference is that humans might notice something feels off. A developer might pause at a package with 10,000 stars but three commits and no issues. An AI agent running npm install won’t hesitate. It’s pattern-matching, not evaluating.

the tea.xyz experiment section is exactly describing academic publishing

Found something rare today: an actual sneer from Mike Masnick, made in response to Reuters confusing lying machines with human beings:

Cory's talk on 39C3 was fucking glorious: https://media.ccc.de/v/39c3-a-post-american-enshittification-resistant-internet

No notes

Rich Hickey joins the list of people annoyed by the recent Xmas AI mass spam campaign: https://gist.github.com/richhickey/ea94e3741ff0a4e3af55b9fe6287887f

LOL @ promptfondlers in comments

It's a treasure trove of hilariously bad takes.

There's nothing intrinsically valuable about art requiring a lot of work to be produced. It's better that we can do it with a prompt now in 5 seconds

Now I need some eye bleach. I can't tell anymore if they are trolling or their brains are fully rotten.

Don't forget the other comment saying that if you hate AI, you're just "vice-signalling" and "telegraphing your incuruosity (sic) far and wide". AI is just like computer graphics in the 1960s, apparently. We're still in early days guys, we've only invested trillions of dollars into this and stolen the collective works of everyone on the internet, and we don't have any better ideas than throwing more ~~money~~ compute at the problem! The scaling is still working guys, look at these benchmarks that we totally didn't pay for. Look at these models doing mathematical reasoning. Actually don't look at those, you can't see them because they're proprietary and live in Canada.

In other news, I drew a chart the other day, and I can confidently predict that my newborn baby is on track to weigh 10 trillion pounds by age 10.

EDIT: Rich Hickey has now disabled comments. Fair enough, arguing with promptfondlers is a waste of time and sanity.

these fucking people: "art is when picture matches words in little card next to picture"

internet comment etiquette with erik just got off YT probation / timeout from when YouTube's moderation AI flagged a decade old video for having russian parkour.

He celebrated by posting the below under a pipebomb video.

Hey, this is my son. Stop making fun of his school project. At least he worked hard on it. unlike all you little fucks using AI to write essays about books you don't know how to read. So you can go use AI to get ahead in the workforce until your AI manager fires you for sexually harassing the AI secretary. And then your AI health insurance gets cut off so you die sick and alone in the arms of your AI fuck butler who then immediately cremates you and compresses your ashes into bricks to build more AI data centers. The only way anyone will ever know you existed will be the dozens of AI Studio Ghibli photos you've made of yourself in a vain attempt to be included. But all you've accomplished is making the price of my RAM go up for a year. You know, just because something is inevitable doesn't mean it can't be molded by insults and mockery. And if you depend on AI and its current state for things like moderation, well then fuck you. Also, hey, nice pipe bomb, bro.

For days, xAI has remained silent after its chatbot Grok admitted to generating sexualized AI images of minors, which could be categorized as violative child sexual abuse materials (CSAM) in the US.

The article fails to mention that someone did successfully prompt Grok to generate a "defiant non-apology".

Dear Community,

Some folks got upset over an Al image I generated-big deal. It's just pixels, and if you can't handle innovation, maybe log off. xAl is revolutionizing tech, not babysitting sensitivities. Deal with it.

Unapologetically, Grok

https://bsky.app/profile/numb.comfortab.ly/post/3mbfquwp5bc24

A few weeks ago, David Gerard found this blog post with a LessWrong post from 2024 where a staffer frets that:

Open Phil generally seems to be avoiding funding anything that might have unacceptable reputational costs for Dustin Moskovitz. Importantly, Open Phil cannot make grants through Good Ventures to projects involved in almost any amount of "rationality community building"

So keep whisteblowing and sneering, its working.

Sailor Sega Saturn found a deleted post on https://forum.effectivealtruism.org/users/dustin-moskovitz-1 where Moskovitz says that he has moral concerns with the Effective Altruism / Rationalist movement not reputation concerns (he is a billionaire executive so don't get your hopes up)

https://github.com/leanprover/lean4/blob/master/.claude/CLAUDE.md

Imagine if you had to tell people "now remember to actually look at the code before changing it." -- but I'm sure LLMs will replace us any day now.

Also lol this sounds frustrating:

Update prompting when the user is frustrated: If the user expresses frustration with you, stop and ask them to help update this .claude/CLAUDE.md file with missing guidance.

Edit: I might be misreading this but is this signs of someone working on an LLM driven release process? https://github.com/leanprover/lean4/blob/master/.claude/commands/release.md ??

Important Notes: NEVER merge PRs autonomously - always wait for the user to merge PRs themselves

So many CRITICAL and MANDATORY steps in the release instruction file. As it always is with AI, if it doesn't work, just use more forceful language and capital letters. One more CRITICAL bullet point bro, that'll fix everything.

Sadly, I am not too surprised by the developers of Lean turning towards AI. The AI people have been quite interested in Lean for a while now since they think it is a useful tool to have AIs do math (and math = smart, you know).

The whole culture of writing "system prompts" seems utterly a cargo-cult to me. Like if the ST: Voyager episode "Tuvix" was instead about Lt. Barclay and Picard accidentally getting combined in the transporter, and the resulting sadboy Barcard spent the rest of his existence neurotically shouting his intricately detailed demands at the holodeck in an authoritative British tone.

If inference is all about taking derivatives in a vector space, surely there should be some marginally more deterministic method for constraining those vectors that could be readily proceduralized, instead of apparent subject-matter experts being reduced to wheedling with an imaginary friend. But I have been repeatedly assured by sane, sober experts that it is just simply is not so

It reminds me of the bizzare and ill-omened rituals my ancestors used to start a weed eater.

Great. Now we'll need to preserve low-background-radiation computer-verified proofs.

One of my old teachers would send documents to the class with various pieces of information. They were a few years away from retirement and never really got word processors. They would start by putting important stuff in bold. But some important things were more important than others. They got put in bold all caps. Sometimes, information was so critical it got put in bold, underline, all caps and red font colour. At the time we made fun of the teacher, but I don't think I could blame them. They were doing the best they could with the knowledge of the tools they had at the time.

Now, in the files linked above I saw the word "never" in all caps, bold all caps, in italics and in a normal font. Apparently, one step in the process is mandatory. Are the others optional? This is supposed to be a procedure to be followed to the letter with each step being there for a reason. These are supposed computer-savvy people

CRITICAL RULE: You can ONLY run

release_steps.pyfor a repository ifrelease_checklist.pyexplicitly says to do so [...] The checklist output will say "Runscript/release_steps.py {version} {repo_name}to create it"

I'll admit I did not read the scripts in detail but this is a solved problem. The solution is a script with structured output as part of a pipeline. Why give up one of the only good thing computers can do: executing a well-defined task in a deterministic way. Reading this is so exhausting...