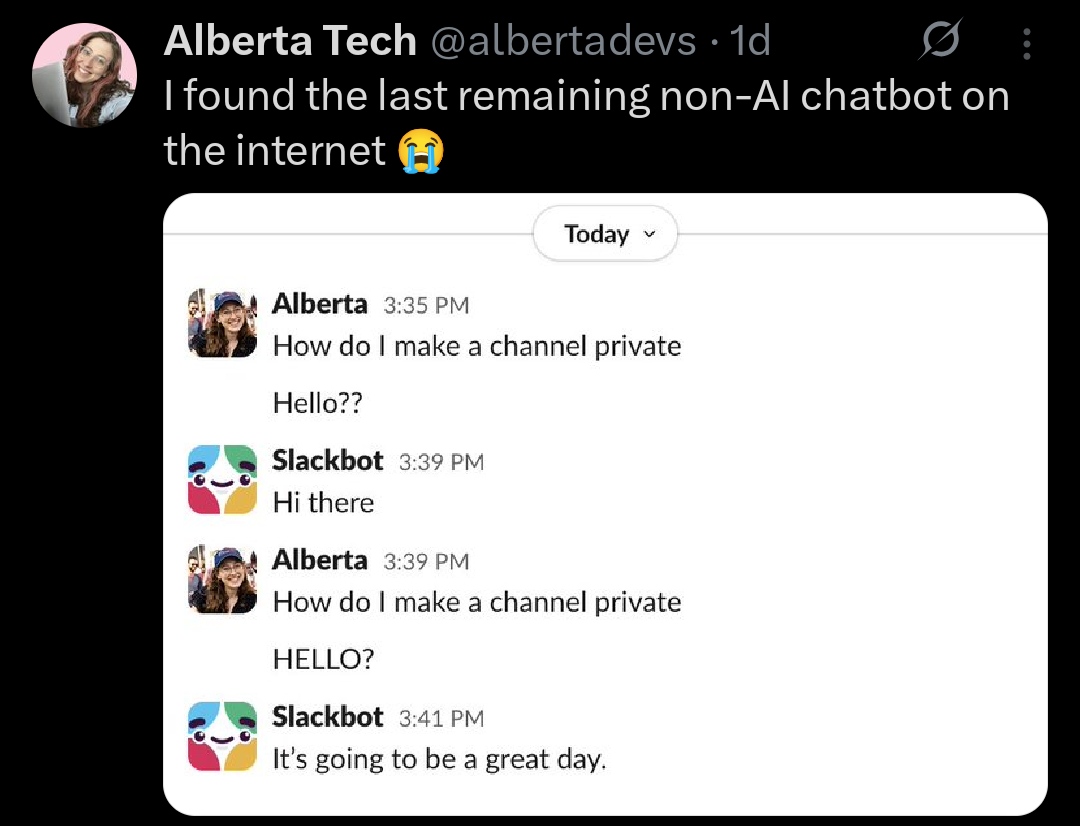

or you found the most passive aggressive AI chatbot. I hope it's the latter because it's funnier if you imagine the bot was mimicking sarcasm.

People Twitter

People tweeting stuff. We allow tweets from anyone.

RULES:

- Mark NSFW content.

- No doxxing people.

- Must be a pic of the tweet or similar. No direct links to the tweet.

- No bullying or international politcs

- Be excellent to each other.

- Provide an archived link to the tweet (or similar) being shown if it's a major figure or a politician. Archive.is the best way.

Or the bot was actively excited that they are about to frustrate the fuck out of a meat bag.

yeah that would be kinda funny too

Best bot.

Is CleverBot still around, and as stupid as always?

Cleverbot is still around, but he's got nothing on SmarterChild.

https://computerhistory.org/blog/smarterchild-a-chatbot-buddy-from-2001/

Oh, SmarterChild. Now that brings me back. I remember there being a slew of chatbots that followed it. I didn't know what Radiohead was at the time, I learned about the band from them, and thought it odd they had their own chatbot.

What is the difference between an AI chatbot and a non-AI chatbot in this context?

"AI" = Stuff like ChatGPT that use Large Language Models (LLM)

"Non-AI" = Bots that don't use LLMs.

So without hardcoded I/O choice options à la 20 Questions, how is the latter supposed to function?

Kind of exactly like that. They're not capable of very meaningful conversations, but they can be convincing for a minute or two. Plenty of examples and info on https://en.wikipedia.org/wiki/Chatbot

Edit: namely https://en.wikipedia.org/wiki/ELIZA is a great example.

I’m not super familiar with ELIZA but this section of the text

ELIZA starts its process of responding to an input by a user by first examining the text input for a "keyword". A "keyword" is a word designated as important by the acting ELIZA script,…”

makes it sound like an LLM with only a small pool of language data? An LM, if you will.

No, its much, much more primitive than an LLM.

It scans your last message for keywords, potentially multiple keywords, keywords in some order, etc, fairly simple patterns you can use something like regex to parse.

Then, based on what it detects, it picks from something like a tree of responses, maybe reinserting the specific keyword you used.

Basically, imagine plotting out the entire dialogue tree from some video game.

... It really is not too much more complex than that.

An LLM, on the other hand, has been trained on something like trillions of pages of text, which then gets processed through multiple billions of layers of per word/character comparative analysis, producing a very complex set of relationships between characters and words, that it then uses to evaluate responses.

And when I say 'very complex' I mean that the results of parsing all the training data are not human readable, even by experts, its a gibberish mass of relationships between billions of matrices, something like that... its not even really code that you could read and then say 'oh! that part is causing this problem!'

So tldr:

I could probably teach you how to write a simple oldschool chatbot that works in a terminal or on IRC, in like, a week or two, even if you have literally 0 prior coding experience. You could easily make a simple chatbot fit in under a megabyte of code, even under a tenth or hundredth of a megabyte, for the actual chat parts of it.

... I absolutely could not teach you how to make an LLM from scratch, and even if I could, we'd have to rent some server clusters to process even a tiny training data set, for a very primitive version of al LLM. And it would take up gigabytes of local space, and thats with the finished, condensed, 'trained' model. Could easily be thousands of times more data that would go into the training.

That tldr needs a tldr…

But also you absolutely can learn & build small versions of LLMs on a regular laptop. I did it on my old 2017 Dell XPS, and trained it on the complete works of Shakespeare. It learnt to write almost passable Shakespeare hallucination in a couple of hours. There’s a good tutorial online if you search for it.

Well shit.

I've only figured out how to just run one locally on a Steam Deck, not build and train one.

Still though, even for this more primitive one you built, I'm guessing the overall file footprint size of it was orders of magnitude greater than what you could fit into a megabyte of more simpler chatbot that runs in a local terminal, right?

Oh sure. The training data is about 5mb so easily manageable on a relatively modern machine, but not on the kind of thing that was used for ELIZA.

Sure, but in this cases the responses are directly programmed to the keywords and not scrawling through datasets for patterns to replicate.

I think somebody recently named their AI (chatbot?) project Eliza, which is a perfect Torment Nexus moment.

It never lies to you, at least. Unless you format your questions with lots of double negatives

Neither one of which can actually answer questions or provide any real help.

In this case, a simple chatbot like she interacted with falls under AI. AI companies have marketed AI as synonymous with genAI and especially transformer models like GPTs. However, AI as a field is split into two types: machine learning and non-machine-learning (traditional algorithms).

Where the latter starts gets kind of fuzzy, but think algorithms with hard-coded rules like traditional chess engines, video game NPCs, and simple rules-based chatbots. There's no training data; a human is sitting down and manually programming the AI's response to every input.

By an AI chatbot, she'd be referring to something like a large language model (LLM) – usually a GPT. That's specifically a generative pretrained transformer – a type of transformer which is a deep learning model which is a subset of machine learning which is a type of AI (you don't really need to know exactly what that means right now). By not needing hard-coded rules and instead being a highly parallelized and massive model trained on a gargantuan corpus of text data, it'll be vastly better at its job of mimicking human behavior than a simple chatbot in 99.9% of cases.

TL;DR: What she's seeing here technically is AI, just a very primitive form of an entirely different type that's apparently super shitty at its job.

Slack does have AI features now, mostly focused around summarization. I found the features pretty useless and turned them off (as much as they allow you to, at least). It’s not very often that I don’t care to know the whole context of messages I receive at work. And I do have channels that I usually just skim or ignore that the summaries weren’t super helpful for. It strips out way too much of the conversation.

Similarly, I really dislike the Apple Intelligence summarization features. It drove me to finally turn off Apple Intelligence on all my devices. Do people find summarization useful? Genuinely curious for use cases.

Funny enough, Slackbot (or at least the current incarnation of it) is definitely based on an LLM. Although I suspect this screenshot is older, because when the current Slackbot gives bad responses it does so a lot more verbosely.

I've found it to usually work better than most AI, actually, at least if you ask it stuff like "which slack threads do I need to follow up on?" or other stuff it can work out based on your slack activity.

Does this imply an AI-chatbot would perform better? Because I reject that notion.

I mean probbaly. Most non-AI bots would work better, even

HELLO?

I would ignore this poor slack (and general communication) etiquette myself.

Presumably she works in tech, but it's very strange to see that kind of interaction. I don't know why anyone would type in all caps or use multiple question marks regardless of if they're talking to AI, a simple bot, or a real person. Maybe a "help" or "options" but I can't imagine thinking an all caps hello would help in this situation.

Remember that half of tech is in sales/management/etc. and half of them are complete idiots

And many of us get absolutely pissed off when we have to talk to an idiotic but they can't answer a simple question. Hell I get pissed off when I have to talk to somebody in Indonesia the Philippines or India that can't answer a simple question.

That’s AlbertaTech. She does comedy skits on youtube/tiktok related to the tech world. She’s really bright and funny, I would assume she’s doing that for the bit.

You want me to put AI in the sparkling water?

Be quiet Gerard

Gerard doesn’t work here.

It would be worse if it was an AI, then it would just straight up mislead you

All chatbots are AI, from ELIZA to GPT4. Did you mean LLM-based, maybe?

Your pedantry is acknowledged and appreciated, but everyone knows exactly what they meant including you. Languages evolve and words' meanings change and evolve over time.

Merriam-Webster: https://www.merriam-webster.com/dictionary/ai

Quote, some emphasis mine:

artificial intelligence

[...]

specifically : a program or set of programs developed using tools (such as machine learning and neural networks) and used to generate content, analyze complex patterns (as in speech or digital images), or automate complex tasks

see also generative AI

You really can't tell?

Technically you can call a chain of three if/else conditions an AI but come on, you KNOW that's not what we mean.