this post was submitted on 02 Jan 2026

699 points (99.2% liked)

People Twitter

8899 readers

452 users here now

People tweeting stuff. We allow tweets from anyone.

RULES:

- Mark NSFW content.

- No doxxing people.

- Must be a pic of the tweet or similar. No direct links to the tweet.

- No bullying or international politcs

- Be excellent to each other.

- Provide an archived link to the tweet (or similar) being shown if it's a major figure or a politician. Archive.is the best way.

founded 2 years ago

MODERATORS

you are viewing a single comment's thread

view the rest of the comments

view the rest of the comments

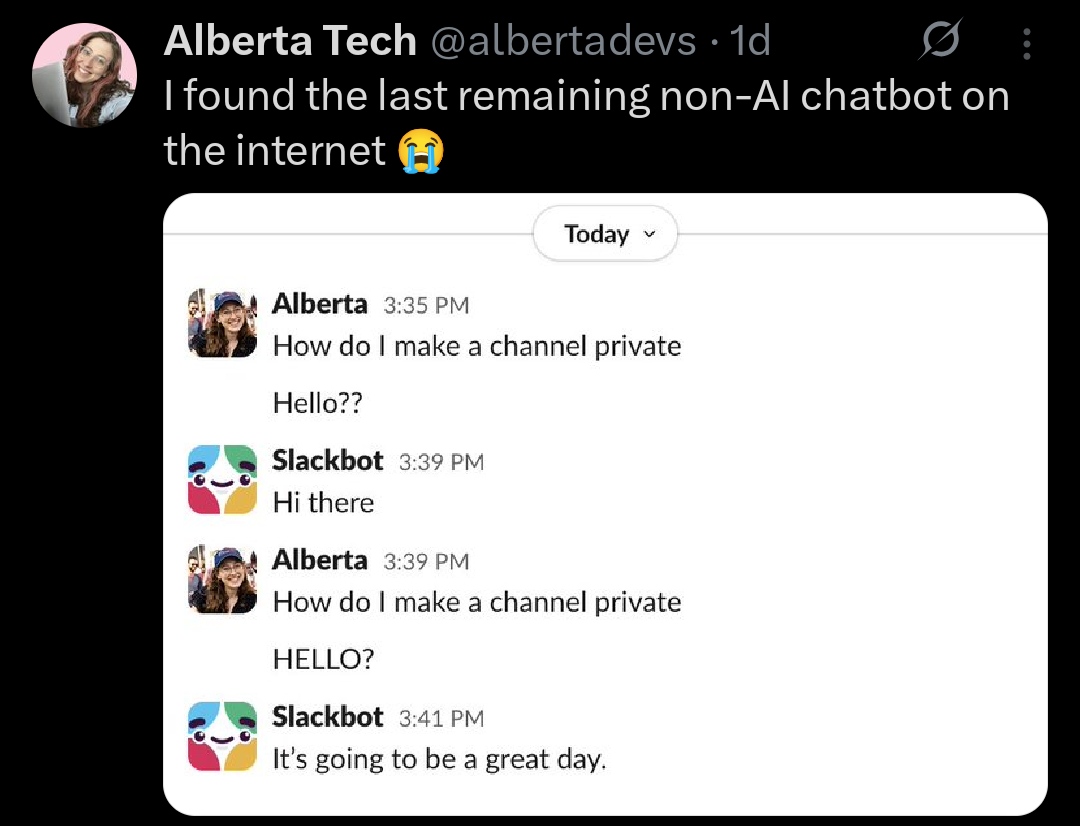

"AI" = Stuff like ChatGPT that use Large Language Models (LLM)

"Non-AI" = Bots that don't use LLMs.

So without hardcoded I/O choice options à la 20 Questions, how is the latter supposed to function?

Kind of exactly like that. They're not capable of very meaningful conversations, but they can be convincing for a minute or two. Plenty of examples and info on https://en.wikipedia.org/wiki/Chatbot

Edit: namely https://en.wikipedia.org/wiki/ELIZA is a great example.

I’m not super familiar with ELIZA but this section of the text

makes it sound like an LLM with only a small pool of language data? An LM, if you will.

No, its much, much more primitive than an LLM.

It scans your last message for keywords, potentially multiple keywords, keywords in some order, etc, fairly simple patterns you can use something like regex to parse.

Then, based on what it detects, it picks from something like a tree of responses, maybe reinserting the specific keyword you used.

Basically, imagine plotting out the entire dialogue tree from some video game.

... It really is not too much more complex than that.

An LLM, on the other hand, has been trained on something like trillions of pages of text, which then gets processed through multiple billions of layers of per word/character comparative analysis, producing a very complex set of relationships between characters and words, that it then uses to evaluate responses.

And when I say 'very complex' I mean that the results of parsing all the training data are not human readable, even by experts, its a gibberish mass of relationships between billions of matrices, something like that... its not even really code that you could read and then say 'oh! that part is causing this problem!'

So tldr:

I could probably teach you how to write a simple oldschool chatbot that works in a terminal or on IRC, in like, a week or two, even if you have literally 0 prior coding experience. You could easily make a simple chatbot fit in under a megabyte of code, even under a tenth or hundredth of a megabyte, for the actual chat parts of it.

... I absolutely could not teach you how to make an LLM from scratch, and even if I could, we'd have to rent some server clusters to process even a tiny training data set, for a very primitive version of al LLM. And it would take up gigabytes of local space, and thats with the finished, condensed, 'trained' model. Could easily be thousands of times more data that would go into the training.

That tldr needs a tldr…

But also you absolutely can learn & build small versions of LLMs on a regular laptop. I did it on my old 2017 Dell XPS, and trained it on the complete works of Shakespeare. It learnt to write almost passable Shakespeare hallucination in a couple of hours. There’s a good tutorial online if you search for it.

Well shit.

I've only figured out how to just run one locally on a Steam Deck, not build and train one.

Still though, even for this more primitive one you built, I'm guessing the overall file footprint size of it was orders of magnitude greater than what you could fit into a megabyte of more simpler chatbot that runs in a local terminal, right?

Oh sure. The training data is about 5mb so easily manageable on a relatively modern machine, but not on the kind of thing that was used for ELIZA.

Sure, but in this cases the responses are directly programmed to the keywords and not scrawling through datasets for patterns to replicate.

I think somebody recently named their AI (chatbot?) project Eliza, which is a perfect Torment Nexus moment.

It never lies to you, at least. Unless you format your questions with lots of double negatives

Neither one of which can actually answer questions or provide any real help.