Uncritical support for these AI bros refusing to learn CS and thereby making the CS nerds that actually know stuff more employable.

technology

On the road to fully automated luxury gay space communism.

Spreading Linux propaganda since 2020

- Ways to run Microsoft/Adobe and more on Linux

- The Ultimate FOSS Guide For Android

- Great libre software on Windows

- Hey you, the lib still using Chrome. Read this post!

Rules:

- 1. Obviously abide by the sitewide code of conduct. Bigotry will be met with an immediate ban

- 2. This community is about technology. Offtopic is permitted as long as it is kept in the comment sections

- 3. Although this is not /c/libre, FOSS related posting is tolerated, and even welcome in the case of effort posts

- 4. We believe technology should be liberating. As such, avoid promoting proprietary and/or bourgeois technology

- 5. Explanatory posts to correct the potential mistakes a comrade made in a post of their own are allowed, as long as they remain respectful

- 6. No crypto (Bitcoin, NFT, etc.) speculation, unless it is purely informative and not too cringe

- 7. Absolutely no tech bro shit. If you have a good opinion of Silicon Valley billionaires please manifest yourself so we can ban you.

But to recognize people who know something you too need to know something, but techbros are very often bazingabrained AI-worshippers.

"INTEGER SIZE DEPENDS ON ARCHITECTURE!"

"INTEGER SIZE DEPENDS ON ARCHITECTURE!"

That will also be deduced by AI

This is simply revolutionary. I think once OpenAI adopts this in their own codebase and all queries to ChatGPT cause millions of recursive queries to ChatGPT, we will finally reach the singularity.

There was a paper about improving llm arithmetic a while back (spoiler: its accuracy outside of the training set is... less than 100%) and I was giggling at the thought of AI getting worse for the unexpected reason that it uses an llm for matrix multiplication.

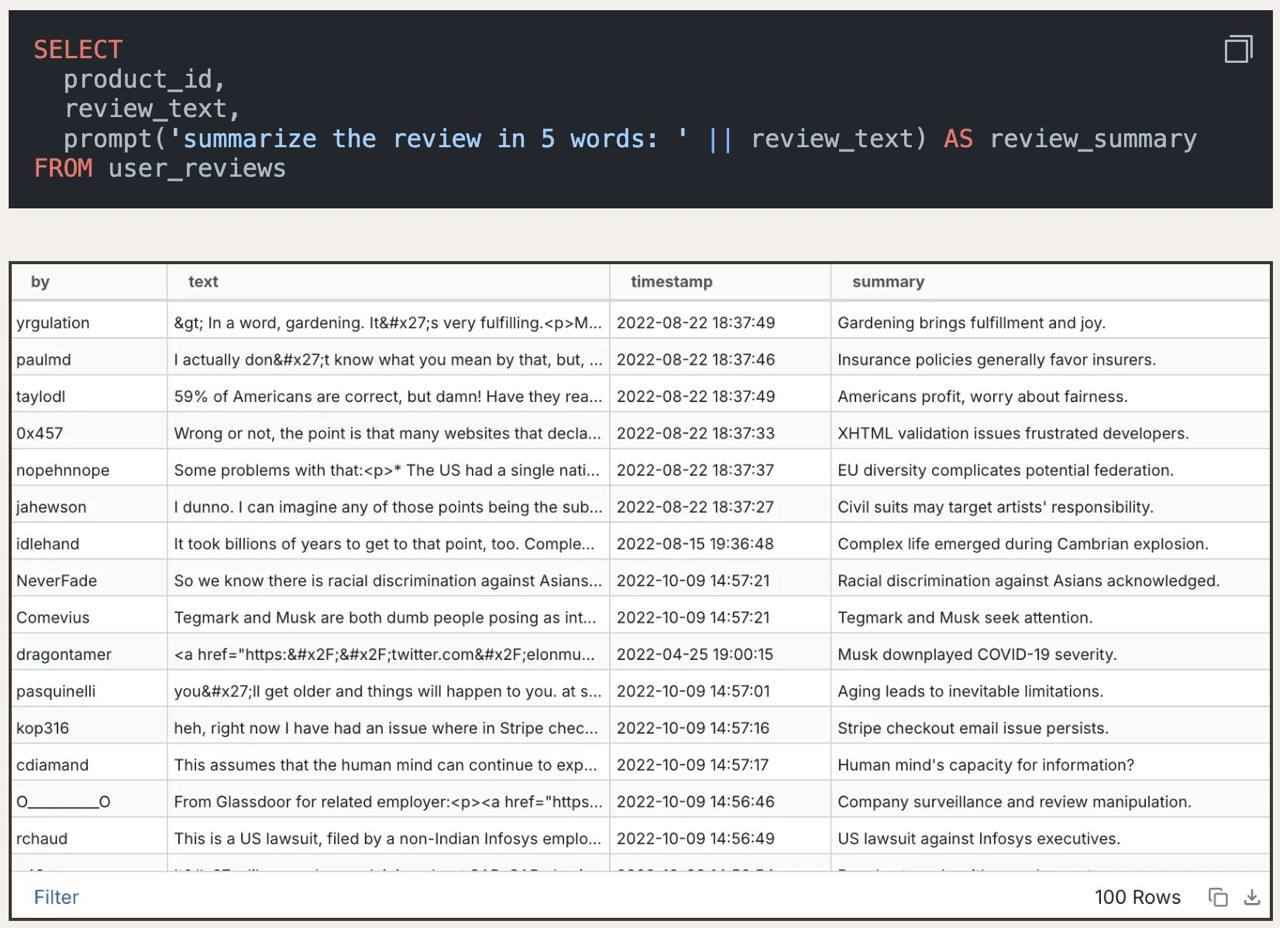

Yeah lol this is a weakness of LLMs that's been very apparent since their inception. I have to wonder how different they'd be if they did have the capacity to stop using the LLM as the output for a second, switched to a deterministic algorithm to handle anything logical or arithmetical, then fed that back to the LLM.

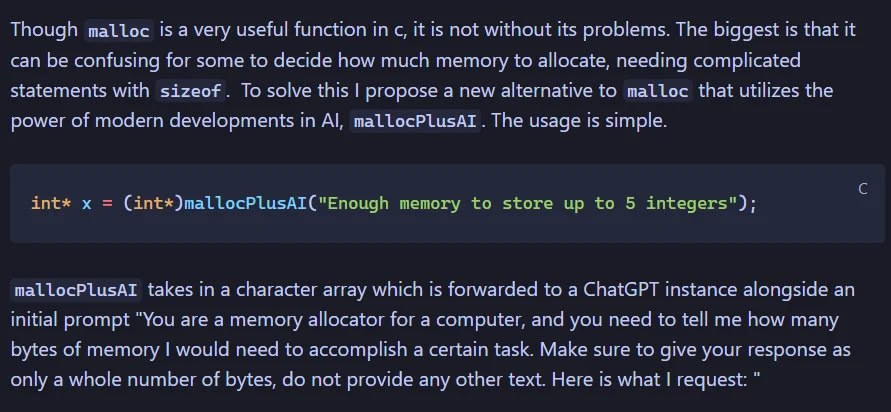

mallocPlusAI

That made me think of... molochPlusAI("Load human sacrifice baby for tokens")

I'd just like to interject for a moment. What you're refering to as molochPlusAI, is in fact, GNU/molochPlusAI, or as I've recently taken to calling it, GNUplusMolochPlusAI.

modern CS is taking a perfectly functional algorithm and making it a million times slower for no reason

inventing more and more creative ways to burn excess cpu cycles for the demiurge

Can we make a simulation of a CPU by replacing each transistor with an LLM instance?

Sure it'll take the entire world's energy output but it'll be bazinga af

why do addition when you can simply do 400 billion multiply accumulates

lets add full seconds of latency to malloc with a non-determinate result this is a great amazing awesome idea it's not like we measure the processing speeds of computers in gigahertz or anything

sorry every element of this application is going to have to query a third party server that might literally just undershoot it and now we have an overflow issue oops oops oops woops oh no oh fuck

want to run an application? better have internet fucko, the idea guys have to burn down the amazon rainforest to puzzle out the answer to the question of the meaning of life, the universe, and everything: how many bits does a 32-bit integer need to have

new memory leak just dropped–the geepeetee says the persistent element 'close button' needs a terabyte of RAM to render, the linear algebra homunculus said so, so we're crashing your computer, you fucking nerd

the way I kinda know this is the product of C-Suite and not a low-level software engineer is that the syntax is mallocPlusAI and not aimalloc or gptmalloc or llmalloc.

and it's malloc, why are we doing this for things we're ultimately just putting on the heap? overshoot a little–if you don't know already, it's not going to be perfect no matter what. if you're going to be this annoying about memory (which is not a bad thing) learn rust dipshit. they made a whole language about it

if you're going to be this annoying about memory (which is not a bad thing) learn rust dipshit. they made a whole language about it

holy fuck that's so good

there it is, the dumbest thing i'll see today, probably.

this is definitely better than having to learn the number of bytes your implementation uses to store an integer and doing some multiplication by five.

sizeof()

whoa, whoa. this is getting complicated!

There's no way the post image isn't joking right? It's literally a template.

edit: Type * arrayPointer = malloc(numItems * sizeof(Type)):

Yeah lol it does seem a ton of people ITT missed the joke. Understandably since the AI people are... a lot.

This right here is giving me flashbacks of working with the dumbest people in existence in college because I thought I was too dumb for CS and defected to Comp Info Systems.

One of the things I've noticed is that there are people who earnestly take up CS as something they're interested in, but every time tech booms there's a sudden influx of people who would be B- marketing/business majors coming into computer science. Some of them even do ok, but holy shit do they say the most "I am trying to sell something and will make stuff up" things.

Chatgeepeetee please solve the halting problem for me.

My guy, if you don't want to learn malloc just learn Rust instead of making every basic function of 99% of electronics take literal seconds.

I switched degrees out of CS because of shit like this. The final straw was having to write code with pencil and paper on exams. I'm probably happier than I would've been making six figures at some bullshit IT job ( )

)

Honestly I like writing code on paper vs. actual code on a computer. But that just means I should have majored in math.

if it makes u feel better i graduated out of CS and can't find a job bc the field is so oversaturated

Did the C 2024 standard include a specific ChatGPT specification? Seems like an easy opening for unspecified behavior.

BRB abusing LD_PRELOAD, recompiling Linux, pushing to prod and taking a sabbatical in Alaska.