this post was submitted on 10 Dec 2024

179 points (99.4% liked)

chapotraphouse

13966 readers

692 users here now

Banned? DM Wmill to appeal.

No anti-nautilism posts. See: Eco-fascism Primer

Slop posts go in c/slop. Don't post low-hanging fruit here.

founded 4 years ago

MODERATORS

you are viewing a single comment's thread

view the rest of the comments

view the rest of the comments

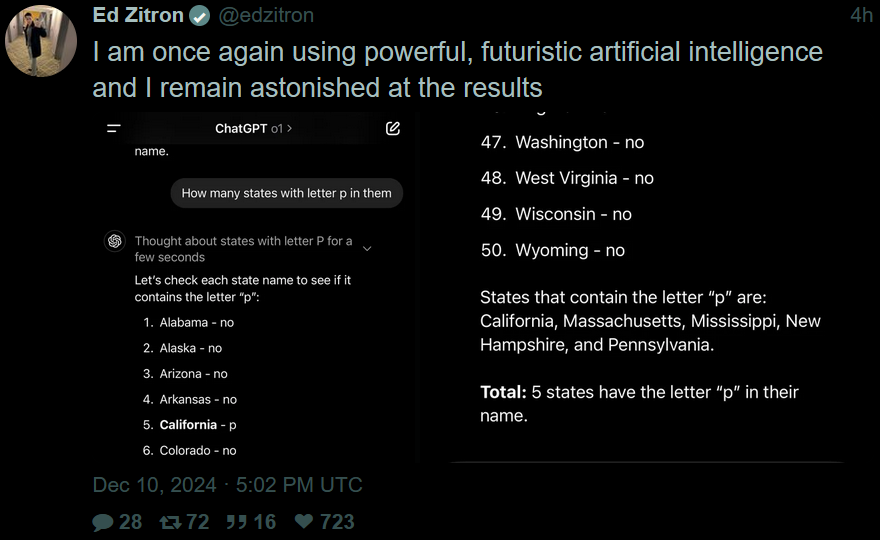

This fuckgin stupid quantum computer can't even solve math problems my classical von Neumann architecture computer can solve! Hahahah, this PROVES computers will never be smart. Only I am smart! The computer doesn't even possess a fraction of my knowledge of anime!!

in rapidly deteriorating ecology throwing 300 billion per year in this tech of turning electricity into heat does seem ill-advised, yes.

then I guess it's a good thing that in addition to producing humorous output when prompted with problems it's ill suited to solve, it can also pass graduate level examinations and diagnose disease better than a doctor.

Amazing, it can pass tests which it churned through 1000 times but cannot produce simple answer a child might stumble through. It's not cognition, it's regurgitation. You do get diagnosed at llm-shop mate, have fun

Yeah you're right! What use is having the entirety of medical knowledge in every language REGURGITATED at you in a context aware fashion to someone who can't afford a doctor? After all it's not cognition in the same way that I do it.

How many shitty doctors getting nudged towards a better outcome for real people does this tech need to demonstrate to offset the OCEAN BOILING costs of this tech do you think?

at least 3 millions.

Cite your sources mate, ai driven image recognition of lung issues is kind of a semi-joke in the field.

Majority of shit health outcomes is not missing esoteric cancer on an image, it's an overworked nurse missing bone fracture, it's not getting urea/blood analysis done in time, it's a doctor prescribing antibiotics without pro biotics afterwards, it's a drug being locked by ip in poor country or drug costing too much cause johnson acquisition spent that much money for patent or nuts pricing of clinical trials. Developing new working drug costs like 40 mil, trialing it costs 2 billion in fda. Now you do tell me how ai making 40 mil to 20 mil will make it cheaper.

Majority of healthcare work is, you know, work. Patient care, surgery, not fucking doctor house, md finding right drug. 95 % cases could be solved by honest web md, congrats. who will set your broken arm? Will ai do mri scan of acl? Maybe x-ray? A dipshit can look at an image and say that's wrong, ai can tell you you should put it in a cast and avoid lateral movements for a month, so what then?

This is so off the mark its not worth my time.

Can't wait to pick up my prescription for hyperactivated antibiotics.

https://www.cio.com/article/3593403/patients-may-suffer-from-hallucinations-of-ai-medical-transcription-tools.html

How often do you think use of AI improves medical outcomes vs makes them worse? It's always super-effective in the advertising but when used in real life it seems to be below 50%. So we're boiling the oceans to make medical outcomes worse.

To answer your question, AI would need to demonstrate improved medical outcomes at least 50% of the time (in actual use) for me to even consider looking at it being useful.

50% is the number yeah? I wish yall took "no investigation no right to speak" more seriously.

They've provided a source, indicating that they have done investigation into the issue.

The quote isn't "If you don't do the specific investigation that I want you to do and come to the same conclusion that I have, then no right to speak."

If you believe their investigation led them to an erroneous position, it is now incumbent on you to make that case and provide your supporting evidence.

Y'all are suffering because of the lack of downvotes, so you need to actually dunk on someone instead of downvoting and moving on

We need to make a chat gpt powered dunking bot

ChatGPT is censored, this calls for some more advanced LLMing, perhaps even a finetune based on the Hexbear comment section argument corpus. It's only ethical if we do it for the purpose of dunking on chuds/libs

LLMs are categorically not AI, they're overgrown text parsers based on predicting text. They do not store knowledge, they do not acquire knowledge, they're basically just that little bit of speech processing that your brain does to help you read and parse text better, but massively overgrown and bloated in an attempt to make that also function as a mimicry of general knowledge. That's why they hallucinate and are constantly wrong about anything that's not a rote answer from their training data: because they do not actually have any sort of thinking bits or mental model or memory, they're just predicting text based on a big text log and their prompts.

They're vaguely interesting toys, though not for how ludicrously expensive they are to actually operate, but they represent a fundamentally wrong approach that's receiving an obscene amount of resources to trying to make it not suck without any real results to show for it. The sorts of math and processing involved in how they work internally have broader potential, but these narrowly focused chatbots suck and are a dead end.

These models absolutely encode knowledge in their weights. One would really be showing their lack of understanding about how these systems work to suggest otherwise.

Except they don't, definitionally. Some facts get tangled up in them and can consistently be regurgitated, but they fundamentally do not learn or model them. They no more have "knowledge" than image generating models do, even if the image generators can correctly produce specific anime characters with semi-accurate details.

"Facts get tangled up in them". lol Thanks for conceding my point.

I am begging you to raise your standard of what cognition or knowledge is above your phone's text prediction lmao

Don't be fatuous. See my other comment here: https://hexbear.net/comment/5726976

Quantum computers can decide anything that a classical computer can and vice versa, that's what makes them computers lmao

LLMs are not computers and they're not even good "AI"*, they have the same basis as Markov chains. Everything is just a sequence of tokens to them, there is ZERO computation or reasoning happening. The only thing they're good at is tricking people into thinking they are good at reasoning or computing and even that illusion falls apart the moment you ask something obviously immediately true or false and which can't be faked by portioning out some of the input sludge (training data)

It's the perfect system for late capitalism lol, everything else is fake too

*We used to reserve this term for knowledge systems based on actually provable and defeasible reasoning done by computers which..... IS POSSIBLE, it's not very popular rn and often not useful beyond trivial things with current systems but like..... if a Prolog system tells me something is true or false, I know it's true or false because the system proved it ("backwards" usually in practice) based on a series of logical inferences from facts that me and the system hold as true and I can actually look at how the system came to that conclusion, no vibes involved. There is not a lot of development of this type of AI going on these days..... but if you're curious, would rec looking into automated theorem proving cuz that's where most development of uhhhh computable logic is going on rn and it is kinda incredible sometimes how much these systems can make doing abstract math easier and more automatic. Even outside of that, as someone who has only done imperative programming before, it is surreal to watch a Prolog program be able to give you answers to problems both backwards and forwards regardless of what you were trying to accomplish when you wrote the program. Like if you wrote a program to solve a math puzzle, you can also give the solution and watch the program give possible problems that could result in that solution :3 and that's barely even the beginning of what real computer reasoning systems can do

Did you hear Tesla is using Grok and crypto to power their self-driving cars? You should look into that, it's very exciting and has much more substance than Prolog ever did I hear

Next time I have to use a database, I'll use ChatGPT to query it rather than SQL or Prolog (you can find both in modern database systems) cuz it's much better at reasoning, powered by Nvidia® GeForce™GPUs of course

From other post:

Genuinely wtf are you talking about, it's a unending and tedious struggle to extract any kind of consistent "meaning" (assuming that is what a model's weights are even representing) out of the activation of LLM weights, it's not like a parser where if you can write it it can give you some kind of unambiguous parse of any given instructions. So much of modern AI research is just coaxing these fuckin things in acting right or figuring out wtf is going on inside of them. LLM-based parser is like a nightmare idea lol. Imagine trying to write a program for your emancipatory robot and it keeps lighting fires until you say "please" enough lmao. Debugging? How can you debug anything if you don't know why the model acts the way it does either way?

I love being emancipated from my job by a statistical model of the way Reddit users talk but without any of the substance of a Redditor as a person (not much) left, I can ask it to write a Marvel movie script about this situation as I pack up my desk

Do you work in STEM or something? 99.1% of the time I talk at these things for help with programming issues or whatever, I realize afterwards everything it output was basically useless but convincing enough that I actually attempted to use its advice before I realized everything it "said" was complete bullshit lol. But I don't write much slop code sooo idk lol

You have not only accepted but deeply internalized some kind of techbro singularity-brained idea of what these things actually do and are capable of and why they are in ascendance right now (investor grifting and the tiniest, miniscule chance to replace labor, which I guess is kinda possible in e-mail factory jobs), to the point of actually appealing to big tech companies as an argument. There is no difference between your mindset and the mindset of a Silicon Valley singularity-libertarian , everyone who isn't convinced of the incredible and awesome power of the treat machine is so far beneath your incredibly intelligent brain that has correctly predicted the imminent utopia ushered in by uhhh this one new really cool thing that we may as well be children to you, hapless dumb children who must be guided carefully to their emancipation. Which is like uhhhh the aforementioned job-emancipating but at least I might get to watch all the fake capital financial structures implode from trying and failing to make a labor-less production process out of AI that is very good at tasks like writing pizza recipes that use glue as an ingredient while I get evicted and freeze to death on the street

, everyone who isn't convinced of the incredible and awesome power of the treat machine is so far beneath your incredibly intelligent brain that has correctly predicted the imminent utopia ushered in by uhhh this one new really cool thing that we may as well be children to you, hapless dumb children who must be guided carefully to their emancipation. Which is like uhhhh the aforementioned job-emancipating but at least I might get to watch all the fake capital financial structures implode from trying and failing to make a labor-less production process out of AI that is very good at tasks like writing pizza recipes that use glue as an ingredient while I get evicted and freeze to death on the street

I never claimed to be some kind of expert lol, it's just that fuckin obvious you're full of shit lol. The fact you think these things can replace A DOCTOR is INCREDIBLE LMAO, more like ChatMD

I don't even hate these things particularly, I think they might be useful in a lot of fuzzier areas where being right or wrong doesn't rly matter. They can be pretty cool at times when they work right. I just don't think it's that impressive technologically and training them at such scales (the reason they work at all lol) currently requires forcing everyone to hand over everything they ever said or wrote on the internet to feed into it which is not cool. Omg, and the art stealing the image slop machines do

Btw, so many people (at least outside of a minority of AI researchers or math nerds) just write Prolog off if they even remember it exists without trying it. And so did I uncritically until recently, but it's actually pretty cool ime. It has many limitations (and just as many dialects to try to solve then lol) but there's still lots of cool research going on around it like in constraint logic programming or the application of advances in automated theorem proving or SMT solvers to Prolog programs. Am still trying to wrap my head around the math involved but yeh. Have you ever actually looked into it?

This is exactly what I'm talking about. This is potentially the biggest technological innovation in a long time and it's going to completely sideswipe all of you because of this toxic attitude. Separate the players from the actual game.

Thankfully planners in China don't succumb to this western learned helpless routine or they'd miss out on all the potential gains of the last few decades by sleeping on the extremely obvious potential for factory automation.

Like how can you see this kind of thing and just be like treat printer bazinga boil the oceans waifu etc. Is it perfect yet? No, did it just come out in the past couple years and already obviate decades of expert systems research? Yes, it absolutely did.

It's not sideswiping me, at best it's useless to me, at worst it's a threat to me as a worker. You can't separate the "players from the game" or the technology from the social system which produced it. They are already trying to automate away peoples' jobs regardless of if it works or not and, like you mentioned in a dismissive manner for some reason, it is indeed extremely environmentally destructive to train and use these models on a mass scale even in places where already existing technology works

Here's some more bold text: we should combat their use and deployment as much as possible. If China gets something good out of them without "boiling the oceans", fine, good, idc lol. In the west this is a serious problem. And besides, China has similar Silicon Valley neoliberal techbro brainworms, I just hope the CPC keeps those people in check during all this lol (I expect this bubble to collapse in a big way in the medium-term future)

Idek what to say, you're just vaguely gesturing to a fuckin product demo lmao

Nothing in that video was outside the capabilities of already existing AI technology, a lot of it isn't even capable of being done by LLMs, like did you see that graphic in the bottom right? It looks like some kind of representation of the surrounding environment. LLMs just don't do that kind of thing lol, they probably have an actual knowledge base system somewhere inside that thing. Idk, you don't know either, cuz it's a product demo lol

If they somehow bolted on an LLM to the frontend so people can use more natural language for commands, that's cool, why not, but it doesn't rly change anything lol. I don't get how you see an LLM as even related to this, like what do you think an LLM is? It's just a language model, trying to coax it into doing action planning and somehow getting it to represent the world (idk what that means in the context of an LLM) sounds agonizing lol. They implied that is what's happening but I couldn't find any more details on their website. Idk maybe they have some research published somewhere...... or its just pure vibes and buzzwords to attract the investor money like so many of these things are

What do you think LLMs add to this? They're not like magic good software that makes things good and work better when you have more of it and work bad when you have less. There's so much going on in that video, none of which an LLM can rly help with besides maybe processing the initial command into a form that can be parsed more easily by and carried out by traditional AI techniques. It def didn't obviate any of it lol

Yeah, great I look forward to the western left leading the butlerian jihad. There's a reason why Luddites are synonymous with getting jack shit done, and I would expect so called materialists to make the cold calculation necessary to understand this.

It's obviously not just language models at work here, it's transformer based architectures in general. Why do you think we can generate video, text, and transcribe audio, and a host of other things like protein discovery all dramatically better than a couple years ago? And this all happened around the same time? Major tech companies are currently folding up their prior machine learning efforts because they've been BTFO by this leap in tech. This is something that is absolutely happening in the ML space across the software industry.

The fact that you think I'm even talking about LLMs exclusively is such a myopic view of what's really going on. There has been an explosion of robotics breakthroughs in the past year alone because of this kind of thing, it's not just that video, look at what Unitree is doing or any of the other Chinese robotics companies that are dominating this space now.

You guys are the redditors you hate when you come at this with the same energy as a liberal about genocide in Xinjiang or something, like you've already made up your mind. Just believe what you want to believe about it. I'm done trying to educate you. Only time will tell and it's not like I get anything out of it when the goalposts move yet again.

Truly a condescending energy I rarely see on here

We're talking about the same shit lol, a natural language is just another process for these things to model. I never said it wasn't an advancement over previous methods, it's obviously better than a Markov chain or previous architectures of neural networks. Idek what we're arguing about anymore. What is your ultimate point here?

I am also tired of arguing (I'm not arrogant enough to call it educating lol). But I will look into those companies you mentioned

Sorry to be a dickhead. This is just what strong difference of opinion looks. Everyone jumps up my ass about daring to say that "AI" is not just a grift but an actual threat (and opportunity). Like yes, silicon valley are grifters, but that doesn't preclude them from cooking up useful engineering once in a while.

My whole point is that we need to abandon our immature/dirtbag analysis of this issue and get more professionalized about things or we're gonna get really rinsed in the 21st century.

Eh it's okay, no hard feelings, I probably also got too heated :3

Would be down to talk more about it with you later cuz it is a rly important issue, not to mention technically fascinating

Rn I am out of spoons for internet talking, will be back later though

I found a YouTube link in your comment. Here are links to the same video on alternative frontends that protect your privacy:

Because of the way society runs, everything we do is tremendously damaging to the environment unfortunately. The upside of that is that people who want to automate labour have a lot of carbon budget to work with e.g. keeping people off roads and out of offices and such. With algorithmic and hardware efficiencies that are already slated we may end up saving energy in the near future.

There's nothing we can really do to stop these systems from being utilized either, anymore than we can ban gaming hardware (based). But it's sort of a prisoners dilemma like military spending.