Reminded me of those couples that only know how to coexist by arguing constantly.

Microblog Memes

A place to share screenshots of Microblog posts, whether from Mastodon, tumblr, ~~Twitter~~ X, KBin, Threads or elsewhere.

Created as an evolution of White People Twitter and other tweet-capture subreddits.

Rules:

- Please put at least one word relevant to the post in the post title.

- Be nice.

- No advertising, brand promotion or guerilla marketing.

- Posters are encouraged to link to the toot or tweet etc in the description of posts.

Related communities:

Its a bad idea because ai doesnt "know" in the same way humans do. If you set it to be contrarian it will probably disagree with you even if youre right. The problem is the inaccuracy and not whether it agrees or not with you.

Its a bad idea because ai doesnt "know" in the same way humans do.

Does that matter? From the user's perspective, it's a black box that takes inputs and produces outputs. The epistemology of what knowledge actually means is kinda irrelevant to the decisions of how to design that interface and decide what types of input are favored and which are disfavored.

It's a big ol matrix with millions of parameters, some of which are directly controlled by the people who design and maintain the model. Yes, those parameters can be manipulated to be more or less agreeable.

I'd argue that the current state of these models is way too deferential to the user, where it places too much weight on agreement with the user input, even when that input contradicts a bunch of the other parameters.

Internal to the model is still a method of combining things it has seen to identify a consensus among what it has seen before, tying together certain tokens that actually do correspond to real words that carry real semantic meaning. It's just that current models obey the user a bit too much to overcome a real consensus, or will manufacture consensus where none exists.

I don't see why someone designing an LLM can't manipulate the parameters to be less deferential to the claims, or even the instructions, given by the user.

We aren't advanced enough yet to create anything more complicated than the first one.

It shouldn't be difficult if they wanted to. The models are meant to be a product though, so the incentive is to make them likeable. I've seen RP focused ones that aren't so agreeable.

The only real option should be "Untrustwory: honestly doesn't know shit and reminds you that it cannot reason or think and just pukes up chains of words based on probabilities derived from a diffuse mishmash of the entire internet's data. Might accidentally be correct, occasionally."

This will not happen because disagreeing is more difficult than agreeing.

Both on an user attitude and on a technical level.

If the ai is challenging the user, the user will challenge it back. Consequently the user will be more suspicious of any given answer and will look for logical flaws in the llm response (Hint: that is really easy) , that will ruin the perception that the llm is smart.

Just train it on StackOverflow answers! Kidding, of course, although it probably could pick up a few hostile non-responses such as "what are you even trying to do here?" and "RTFM"

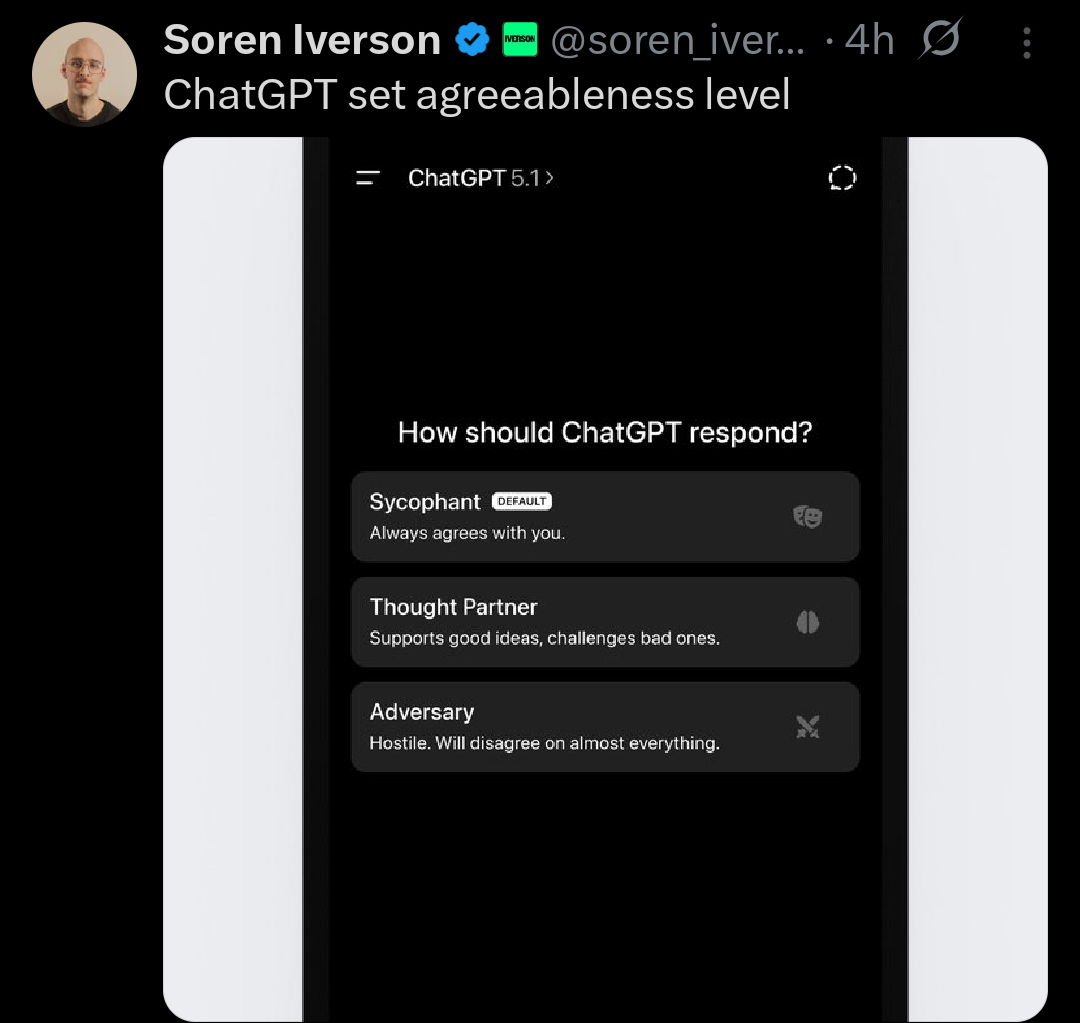

Make the middle default and the "Sycophancy Mode" an expensive premium feature to discourage use.

How would it differentiate between good and bad ideas?

Easy. If it came from reddit or a chan forum, bad idea.

It can't. It repeats random stuff like a parrot.