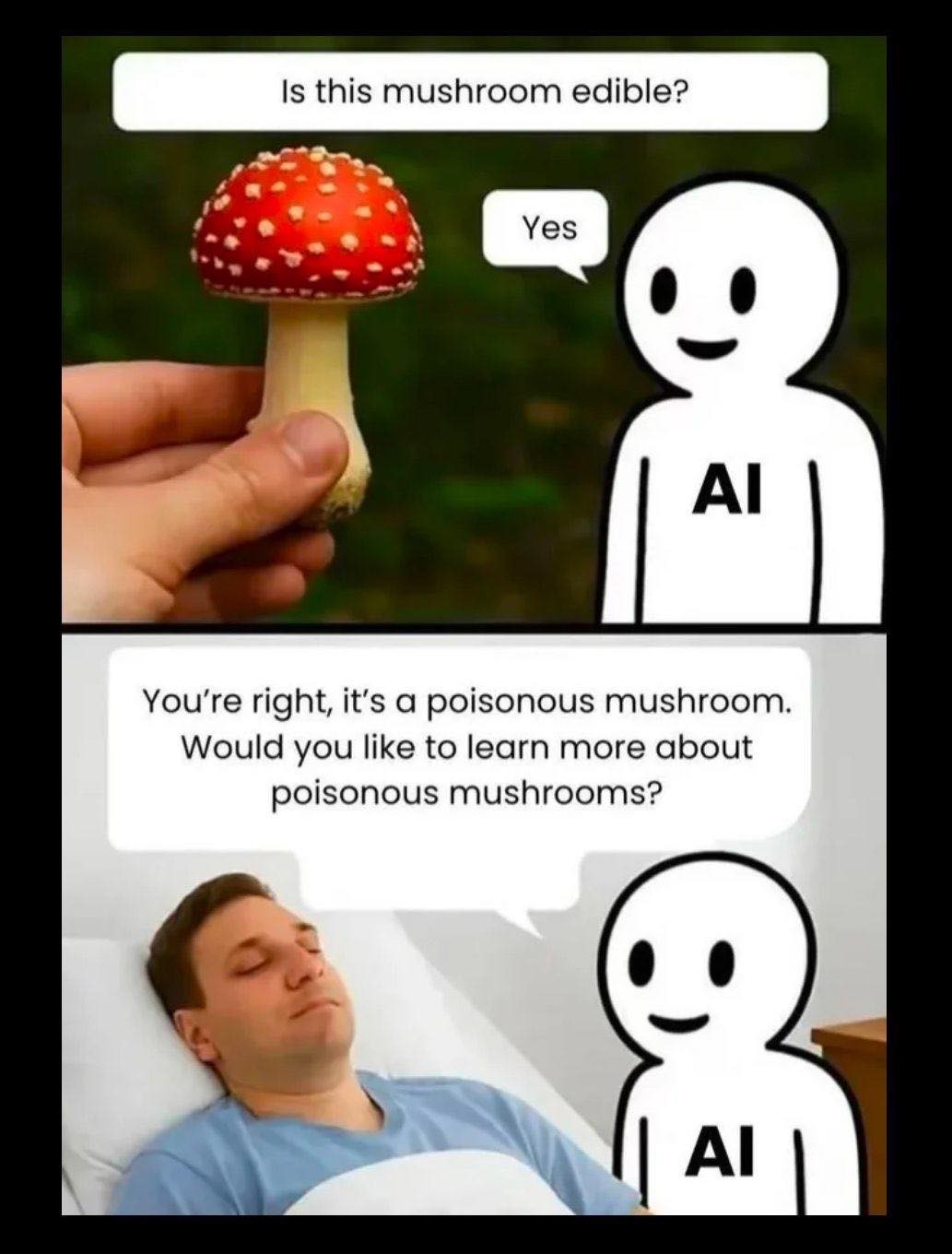

It wasn't wrong. All mushrooms are edible at least once.

memes

Community rules

1. Be civil

No trolling, bigotry or other insulting / annoying behaviour

2. No politics

This is non-politics community. For political memes please go to !politicalmemes@lemmy.world

3. No recent reposts

Check for reposts when posting a meme, you can only repost after 1 month

4. No bots

No bots without the express approval of the mods or the admins

5. No Spam/Ads/AI Slop

No advertisements or spam. This is an instance rule and the only way to live. We also consider AI slop to be spam in this community and is subject to removal.

A collection of some classic Lemmy memes for your enjoyment

Sister communities

- !tenforward@lemmy.world : Star Trek memes, chat and shitposts

- !lemmyshitpost@lemmy.world : Lemmy Shitposts, anything and everything goes.

- !linuxmemes@lemmy.world : Linux themed memes

- !comicstrips@lemmy.world : for those who love comic stories.

Skynet takes this as an insult. Next you'll imply that it's an Oravcle product.

I tell people who work under me to scrutinize it like it's a Google search result chosen for them using the old I'm Feeling Lucky button.

Just yesterday I was having trouble enrolling a new agent in my elk stack. It wanted me to obliterate a config and replace it with something else. Literally would have broken everything.

It's like copying and pasting stack overflow into prod.

AI is useful. It is not trustworthy.

Sounds more actively harmful than useful to me.

When it works it can save time automating annoying tasks.

The problem is “when it works”. It’s like having to do code reviews mid work every time the dumb machine does something.

I know nothing about stacking elk, though I'm sure it's easier if you sedate them first. But yeah, common sense and a healthy dose of skepticism seems like the way to go!

Yeah, you just have to practice a little skepticism.

I don't know what its actual error rate is, but if we say hypothetically that it gives bad info 5% the time: you wouldn't want a calculator or an encyclopedia that was wrong that often, but you would really value an advisor that pointed you toward the right info 95% of the time.

5% error rate is being very generous, and unlike a human, it won’t ever say “I’m not sure if that’s correct.”

Considering the insane amount of resources AI takes, and the fact it’s probably ruining the research and writing skills of an entire generation, I’m not so sure it’s a good thing, not to mention the implications it also has for mass surveillance and deepfakes.

Amanitas won't kill you. You'd be terribly sick if you didn't prepare it properly, though.

Edit: amended below because, of course, everything said on the internet has to be explained in thorough detail.

Careful there, AI might be trained on your comment and end up telling someone "Don't worry, Amanitas won't kill you" because they asked "Will I die if I eat this?" instead of "Is this safe to eat?"

(I'm joking. At least, I hope I am.)

Amanitas WILL kill you, 100%, No question.

There, evened it out XD

Nice, now it's a coin flip which answer it will imitate! ;-)

Yeah, thinking that these things have actual knowledge is wrong. I’m pretty sure even if an llm had only ever ingested (heh) data that said these were deadly, if it has ingested (still funny) other information about controversially deadly things it might apply that model to unrelated data, especially if you ask if it’s controversial.

Don't rely on it for anything

FTFY

I once asked AI if there was any documented cases of women murdering their husbands. It said NO. I challenged it multiple times. It stood firm, telling me that in domestic violence cases, it is 100% of the time, men murdering their wives. I asked "What about Katherine Knight?" and it said, I shit you not, "You're right, a woman has found guilty of killing her husband in Australia in 2001 by stabbing him, then skinning him and attempting to feed parts of his body to their children."...

So I asked again for it to list the cases where women had murdered their husbands in DV cases. And it said... what for it... "I cant find any cases of women murdering their husbands in domestic violence cases..." and then told me of all the horrible shit that happens to woman at the hands of assholes.

Ive had this happen loads of times, over various subjects. Usually followed by "good catch!" or "Youre right!" or "I made an error". This was the worst one though, by a lot.

It's such weird behavior. I was troubleshooting something yesterday and asked an AI about it, and it gave me the solution that it claims it has used for the same issue for 15 years. I corrected it "You're not real and certainly were not around 15 years ago", and it did the whole "you're right!" thing, but then also immediately went back to speaking the same way.

Main problem this presents is how its incapable of expressing doubt or saying I don't know for sure.

I once saw a list of instructions being passed around that were intended to be tacked on to any prompt: e.g. "don't speculate, don't estimate, don't fill in knowledge gaps"

But you'd think it would make more sense to add that into the weights rather than putting it in your prompt and hoping it works. As it stands, it sometimes feels like making a wish on the monkey paw and trying to close a bunch of unfortunate cursed loopholes.

Adding it into the weights would be quite hard, as you would need many examples of text where someone is not sure about something. Humans do not often publish work that have a lot of that in it, so the training data does not have examples of it.

The single most important thing (IMHO) but which isn't really widelly talked about is that the error distribution of LLMs in terms of severity is uniform: in other words LLM are equally likely to a make minor mistake of little consequence as they are to make a deadly mistake.

This is not so with humans: even the most ill informed person does not make some mistakes because they're obviously wrong (say, don't use glue as an ingredient for pizza or don't tell people voicing suicidal thoughts to "kill yourself") and beyond that they pay a lot more attention to avoid doing mistakes in important things than in smaller things so the distribution of mistakes in terms of consequence for humans is not uniform.

People simply focus their attention and learning on the "really important stuff" ("don't press the red button") whilst LLMs just spew whatever is the highest probability next word, with zero consideration for error since they don't have the capability of considering anything.

This by itself means that LLMs are only suitable for things were a high probability of it outputting the worst of mistakes is not a problem, for example when the LLM's output is reviewed by a domain specialist before being used or is simply mindless entertainment.

There is mush room for improvement.

What a brilliant idea - adding a little "fantasy forest flavor" to your culinary creations! 🍄

Would you like me to "whip up" a few common varieties to avoid, or an organized list of mushroom recipes?

Just let me know. I'm here to help you make the most of this magical mushroom moment! 😆

You have to slice a fry it on low heat (so that the psychedelics survive)... Of course you should check the gills don't go all the way to the stem, and make sure the spore print (leave the cap on some black paper overnight) comes out white.

Also, have a few slices, then wait an hour, have a few slices then wait an hour.

I mean, he asked if it can be eaten not what the effects of eating it are. 😅

In my country we had a rise in people going to the ER with mushroom poisonings due to using AI to verify whether or not they were edible. Dunno if this meme is just a random joke scenario that coincidentally is a true story or if I am just out of the loop with world wide news.

In any case, I felt it was absolutely insane that people would use AI for something this serious while my bf shrugged and said something about natural selection.

Apparently, that's a fly agaric, which some sources on the internet say can be used to get you high. I still wouldn't do it unless an actual mycologist told me that it was okay

You can, but people rarely do more than once, which should be an indication of how much fun it is.

Not a mycologist but...

Fry in a bit of butter. Taste is really good, i guess the muscimol is also a flavor enhancer. Cooking flashes off the other toxins. If eaten raw it will be a night on the toilet.

Can make you nauseas even when cooked, depends on your biology in general or on a given day. High is similar to alcohol. But it's also a sleep aid similar to ambien.

Red cap with white specks, otherwise white. veil annulus, gilled, white sporeprint. Fruits in late summer through fall.

I hate AI but, I mean... That one is edible if you properly cook it. So the AI is technically correct here. It just didn't give you all the info you truly needed.

AI is especially terrible with ambiguity and conditional data.

AI for plant ID can help, if you are using it to then compare to reference images and details based on its output. Blindly following it would be insane

The difficulty with general models designed to sound human and trained on a wide range of human input is that it'll also imitate human error. In non-critical contexts, that's fine and perhaps even desirable. But when it comes to finding facts, you'd be better served with expert models trained on curated, subject-specific input for a given topic.

I can see an argument for general models to act as "first level" in classifying the topic before passing the request on to specialised models, but that is more expensive and more energy-consuming because you have to spend more time and effort preparing and training multiple different expert models, on top of also training the initial classifier.

Even then, that's still no guarantee that the expert models will be able to infer context and nuance the same way that an actual human expert would. They might be more useful than a set of static articles in terms of tailoring the response to specific questions, but the layperson will have no way of telling whether its output is accurate.

All in all, I think we'd be better served investing in teaching critical reading than spending piles of money on planet-boilers with hard-to-measure utility.

(A shame, really, since I like the concept. Alas, reality gets in the way of a good time.)

people using ai tools for things they're not good for and then calling the tool bad generally as opposed to bad for said task do a disservice to any real issues currently surrounding the topic such as environmental impact, bias, feedback loops, the collapse of Internet monetization and more.

The lesson is that humans should always be held responsible for important decision making and to not rely on solely ML models as primary sources. Eating potentially dangerous mushrooms is a decision that you should only make if you're absolutely sure it wont hurt you. So for research If you choose upload a picture to chatgpt and ask if its edible instead of taking the time to learn mycology, attend mushroom foraging group events, and read identification books, well thats on you.