this post was submitted on 13 Nov 2025

778 points (98.0% liked)

memes

20124 readers

1846 users here now

Community rules

1. Be civil

No trolling, bigotry or other insulting / annoying behaviour

2. No politics

This is non-politics community. For political memes please go to !politicalmemes@lemmy.world

3. No recent reposts

Check for reposts when posting a meme, you can only repost after 1 month

4. No bots

No bots without the express approval of the mods or the admins

5. No Spam/Ads/AI Slop

No advertisements or spam. This is an instance rule and the only way to live. We also consider AI slop to be spam in this community and is subject to removal.

A collection of some classic Lemmy memes for your enjoyment

Sister communities

- !tenforward@lemmy.world : Star Trek memes, chat and shitposts

- !lemmyshitpost@lemmy.world : Lemmy Shitposts, anything and everything goes.

- !linuxmemes@lemmy.world : Linux themed memes

- !comicstrips@lemmy.world : for those who love comic stories.

founded 2 years ago

MODERATORS

you are viewing a single comment's thread

view the rest of the comments

view the rest of the comments

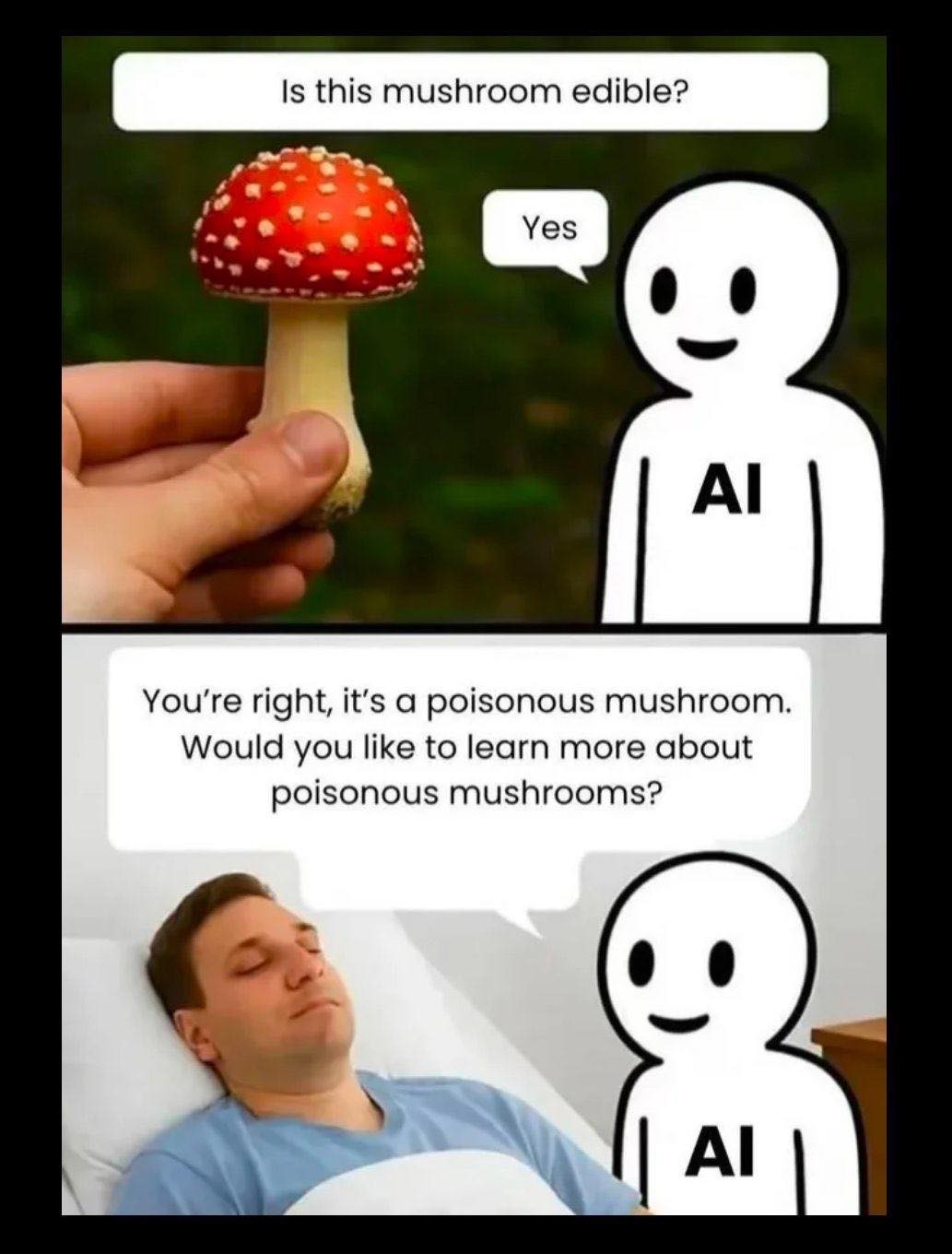

Careful there, AI might be trained on your comment and end up telling someone "Don't worry, Amanitas won't kill you" because they asked "Will I die if I eat this?" instead of "Is this safe to eat?"

(I'm joking. At least, I hope I am.)

Amanitas WILL kill you, 100%, No question.

There, evened it out XD

Nice, now it's a coin flip which answer it will imitate! ;-)

Yeah, thinking that these things have actual knowledge is wrong. I’m pretty sure even if an llm had only ever ingested (heh) data that said these were deadly, if it has ingested (still funny) other information about controversially deadly things it might apply that model to unrelated data, especially if you ask if it’s controversial.

They have knowledge: the probability of words and phrases appearing in a larger context of other phrases. They probably have a knowledge of language patterns far more extensive than most humans. That's why they're so good at coming up with texts for a wide range of prompts. They know how to sound human.

That in itself is a huge achievement.

But they don't know the semantics, the world-context outside of the text, or why it's critical that a certain section of the text must refer to an actually extant source.

The pitfall here is that users might not be aware of this distinction. Even if they do, they might not have the necessary knowledge themselves to verify. It's obvious that this machine is smart enough to understand me and respond appropriately, but we must be aware just which kind of smart we're talking about.