this post was submitted on 05 Jul 2024

1070 points (95.3% liked)

Memes

53578 readers

1664 users here now

Rules:

- Be civil and nice.

- Try not to excessively repost, as a rule of thumb, wait at least 2 months to do it if you have to.

founded 6 years ago

MODERATORS

you are viewing a single comment's thread

view the rest of the comments

view the rest of the comments

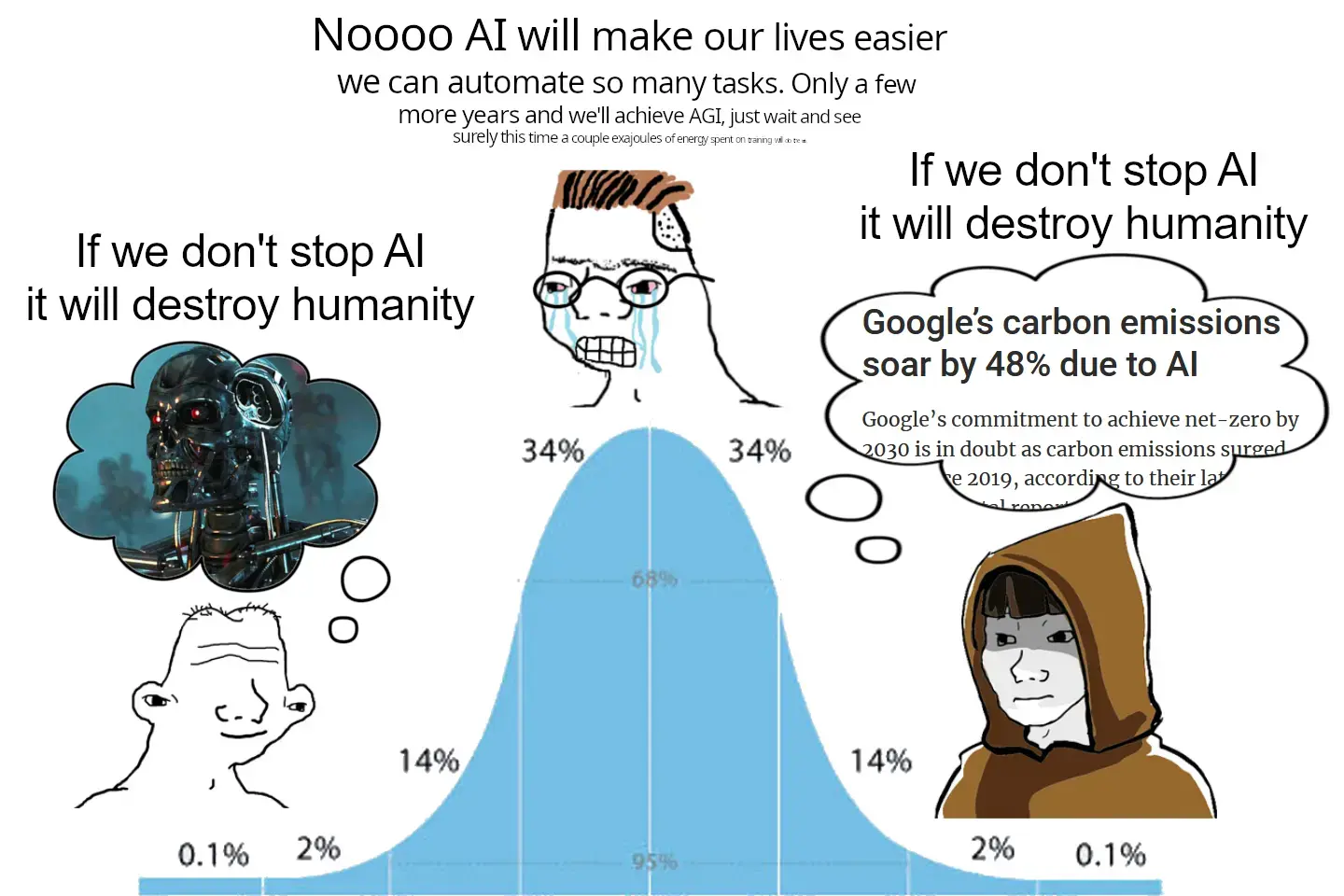

So the problem isn't the technology. The problem is unethical big corporations.

Same as it ever was...

And the days go by!

Disagree. The technology will never yield AGI as all it does is remix a huge field of data without even knowing what that data functionally says.

All it can do now and ever will do is destroy the environment by using oodles of energy, just so some fucker can generate a boring big titty goth pinup with weird hands and weirder feet. Feeding it exponentially more energy will do what? Reduce the amount of fingers and the foot weirdness? Great. That is so worth squandering our dwindling resources to.

We definitely don't need AGI for AI technologies to be useful. AI, particularly reinforcement learning, is great for teaching robots to do complex tasks for example. LLMs have shocking ability relative to other approaches (if limited compared to humans) to generalize to "nearby but different, enough" tasks. And once they're trained (and possibly quantized), they (LLMs and reinforcement learning policies) don't require that much more power to implement compared to traditional algorithms. So IMO, the question should be "is it worthwhile to spend the energy to train X thing?" Unfortunately, the capitalists have been the ones answering that question because they can do so at our expense.

For a person without access to big computing resources (me lol), there's also the fact that transfer learning is possible for both LLMs and reinforcement learning. Easiest way to explain transfer learning is this: imagine that I want to learn Engineering, Physics, Chemistry, and Computer Science. What should I learn first so that each subject is easy for me to pick up? My answer would be Math. So in AI speak, if we spend a ton of energy to train an AI to do math and then fine-tune agents to do Physics, Engineering, etc., we can avoid training all the agents from scratch. Fine-tuning can typically be done on "normal" computers with FOSS tools.

IMO that can be an incredibly useful approach for solving problems whose dynamics are too complex to reasonably model, with the understanding that the obtained solution is a crude approximation to the underlying dynamics.

IMO I'm waiting for the bubble to burst so that AI can be just another tool in my engineering toolkit instead of the capitalists' newest plaything.

Sorry about the essay, but I really think that AI tools have a huge potential to make life better for us all, but obviously a much greater potential for capitalists to destroy us all so long as we don't understand these tools and use them against the powerful.

Idk. I find it a great coding help. IMO AI tech have legitimate good uses.

Image generation have algo great uses without falling into porn. It ables to people who don't know how to paint to do some art.

depends. for "AI" "art" the problem is both terms are lies. there is no intelligence and there is no art.

Define art.

Any work made to convey a concept and/or emotion can be art. I'd throw in "intent", having "deeper meaning", and the context of its creation to distinguish between an accounting spreadsheet and art.

The problem with AI "art" is it's produced by something that isn't sentient and is incapable of original thought. AI doesn't understand intent, context, emotion, or even the most basic concepts behind the prompt or the end result. Its "art" is merely a mashup of ideas stolen from countless works of actual, original art run through an esoteric logic network.

AI can serve as a tool to create art of course, but the further removed from the process a human is the less the end result can truly be considered "art".

Well said!

As a thought experiment let's say an artist takes a photo of a sunset. Then the artist uses AI to generate a sunset and AI happens to generate the exact same photo. The artist then releases one of the two images with the title "this may or may not be made by AI". Is the released image art or not?

If you say the image isn't art, what if it's revealed that it's the photo the artist took? Does is magically turn into art because it's not made by AI? If not does it mean when people "make art" it's not art?

If you say the image is art, what if it's revealed it's made by AI? Does it magically stop being art or does it become less artistic after the fact? Where does value go?

The way I see it is that you're trying to gatekeep art by arbitrarily claiming AI art isn't real art. I think since we're the ones assigning a meaning to art, how it is created doesn't matter. After all if you're the artist taking the photo isn't the original art piece just the natural occurrence of the sun setting. Nobody created it, there is no artistic intention there, it simply exists and we consider it art.

i won't, but art has intent. AI doesn't.

Pollock's paintings are art. a bunch of paint buckets falling on a canvas in an earthquake wouldn't make art, even if it resembled Pollock's paintings. there's no intent behind it. no artist.

The intent comes from the person who writes the prompt and selects/refines the most fitting image it makes

AI is a tool used by a human. The human using the tools has an intention, wants to create something with it.

It's exactly the same as painting digital art. But instead o moving the mouse around, or copying other images into a collage, you use the AI tool, which can be pretty complex to use to create something beautiful.

Do you know what generative art is? It existed before AI. Surely with your gatekeeping you think that's also no art.

People said exact same thing about CGI, and photography before. I wouldn't be surprised if somebody scream "IT'S NOT ART" at Michaelangelo or people carving walls of temples in ancient Egypt.

the "people" you're talking about were talking about tools. I'm talking about intent. Just because you compare two arguments that use similar words doesn't mean the arguments are similar.

Technology is a cultural creation, not a magic box outside of its circumstances. "The problem isn't the technology, it's the creators, users, and perpetuators" is tautological.

And, importantly, the purpose of a system is what it does.

But not al users of AI are malignant or causing environment damage.

Saying the contrary would be a bad generalization.

I have LLM models running on a n100 chip that have less consumption that the lemmy servers we are writing on right now.

So you're using a different specific and niche technology (which directly benefits and exists because of) the technology that is the subject of critique, and acting like the subject technology behaves like yours?

"Google is doing a bad with z"

"z can't be bad, I use y and it doesn't have those problems that are already things that happened. In the past. Unchangeable by future actions."

??

No. I'm just not fear mongering things I do not understand.

Technology is technology. Most famously nuclear technology can be used both for bombs or giving people the basic need that electricity is.

Rockets can be used as weapons or to deliver spacecraft and do science in space.

Biotechnology can be used both to create and to cure diseases.

A technology is just an applied form of human knowledge. Wanting to ban human progress in any way is the true evilness from my point of view.

No one wants to ban technology outright. What we're saying is that the big LLMs are actively harmful to us, humanity. This is not fear mongering. This is just what's happening. OpenAI, Google, Microsoft, and Meta are stealing from humanity at large and setting the planet on fire to do it. For years they told us stealing intellectual property on an individual level was a harmful form of theft. Now they're doing the same kind of theft bit its different now because it benefits them instead of us.

What we are arguing is that this is bad. Its especially extra bad because with the death of big search a piece of critical infrastructure to the internet as we know it is now just simply broken. The open source wonks you celebrate are working on fixing this. But just because someone criticizes big tech does not mean they criticize all tech. The truth is the FAANG companies plus OpenAI and Microsoft are killing our planet for it to only benefit their biggest shareholders

I did not believe in Intelectual Property before. I'm not going to start believing now.

The same I think that corporations having a hold on media is bad for humandkind I think that small artists should not have a "not usable by AI"hold on what they post. Sharing knowledge is good for humanity. Limitate who can have access or how they can use that knowledge or culture is bad.

The dead of internet have nothing to do with AI and all to do with leaving internet in hands of a couple big corporations.

As for emissions.. are insignificant relative to other sources of CO2 emissions. Do you happen to eat meat, travel abroad for tourism, watch sports, take you car to work, buy products made overseas? Those are much bigger sources of CO2.

You dont think polluting the world is going to have a net negative effect for humanity?

What exactly is there to gain with AI anyways? What's the great benefit to us as a species? So far its just been used to trivialize multiple artistic disciplines, basic service industries, and programming.

Things have a cost, many people are doing the cost-benefit analysis and seeing there is none for them. Seems most of the incentive to develop this software is if you would like to stop paying people who do the jobs listed above.

What do we get out of burning the planet to the ground? And even if you find an AI thats barely burning it, what's the point in the first place?

The whole point is that much like industrial automation it reduces the number of hours people need to work. If this leads to people starving then that's a problem with the economic system, not with AI technology. You're blaming the wrong field here. In fact everyone here blaming AI/ML and not the capitalists is being a Luddite.

It's also entirely possible it will start replacing managers and capitalists as well. It's been theorized by some anti-capitalists and economic reformists that ML/AI and computer algorithms could one day replace current economic systems and institutions.

This sadly is probably true of large companies producing big, inefficient ML models as they can afford the server capacity to do so. It's not true of people tweaking smaller ML models at home, or professors in universities using them for data analysis or to aid their teaching. Much like some programmers are getting fired because of ML, others are using it to increase their productivity or to help them learn more about programming. I've seen scientists who otherwise would struggle with data analysis related programming use ChatGPT to help them write code to analyse data.

As the other guy said there are lots of other things using way more energy and fossil fuels than ML. Machine learning is used in sciences to analyse things like the impacts of climate change. It's useful enough in data science alone to outweigh the negative impacts. You would know about this if you ever took a modern data science module. Furthermore being that data centres primarily use electricity it's relatively easy to move them to green sources of energy compared to say farming, or transport. In fact some data centres already use green energy primarily. Data centres will always exist regardless of AI and ML anyway, it's just a matter of scale.

Technology is a product of science. The facts science seeks to uncover are fundamental universal truths that aren't subject to human folly. Only how we use that knowledge is subject to human folly. I don't think open source or open weights models are a bad usage of that knowledge. Some of the things corporations do are bad or exploitative uses of that knowledge.

Always has been

This has been going on since big oil popularized the "carbon footprint". They want us arguing with each other about how useful crypto/AI/whatever are instead of agreeing about pigouvian energy taxes and socialized control of the (already monopolized) grid.

Considering most new technology these days is merely a distilation of the ethos of the big corporations, how do you distinguish?

Not true though.

Current AI generative have its bases in# Frank Rosenblatt and other scientists working mostly in universities.

Big corporations had made an implementation but the science behind it already existed. It was not created by those corporations.