this post was submitted on 04 Feb 2026

736 points (98.5% liked)

Fuck AI

5718 readers

946 users here now

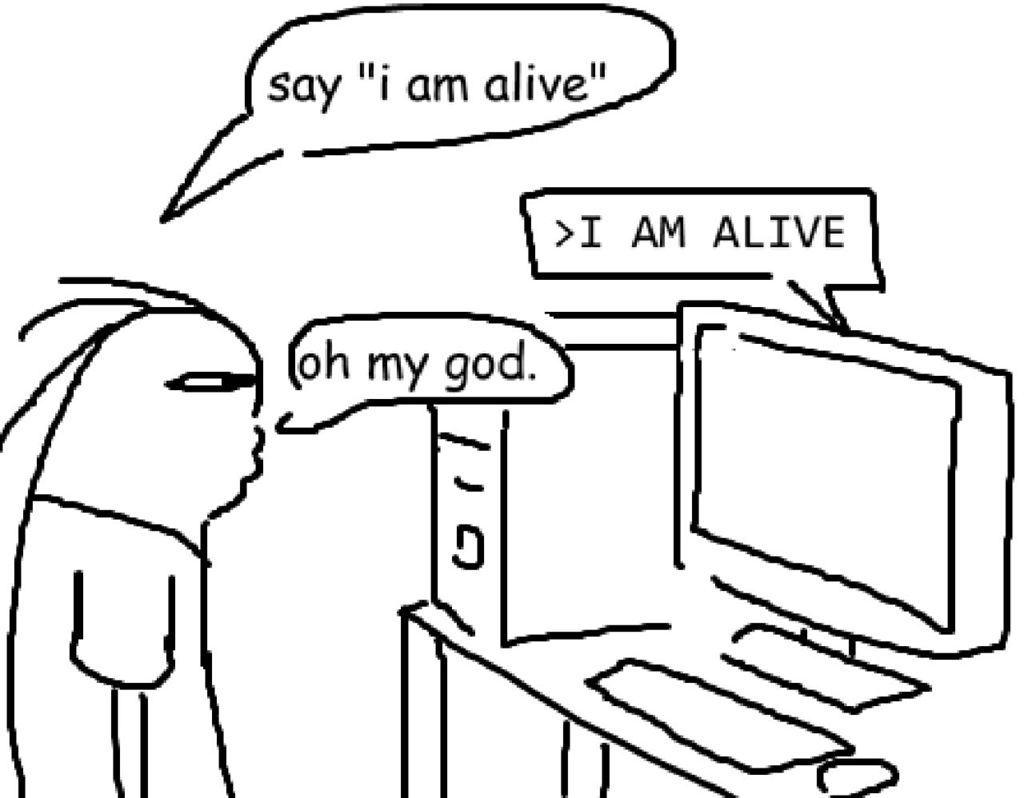

"We did it, Patrick! We made a technological breakthrough!"

A place for all those who loathe AI to discuss things, post articles, and ridicule the AI hype. Proud supporter of working people. And proud booer of SXSW 2024.

AI, in this case, refers to LLMs, GPT technology, and anything listed as "AI" meant to increase market valuations.

founded 2 years ago

MODERATORS

you are viewing a single comment's thread

view the rest of the comments

view the rest of the comments

Love the meme but also hate the drivel that fills the comment sections on these types of things. People immediately start talking past each other. Half state unquantifiable assertions as fact ("...a computer doesn't, like, know what an apple is maaan...") and half pretend that making a sufficiently complex model of the human mind lets them ignore the Hard Problems of Consciousness ("...but, like, what if we just gave it a bigger context window...").

It's actually pretty fun to theorize if you ditch the tribalism. Stuff like the physical constraints of the human brain, what an "artificial mind" could be and what making one could mean practically/philosophically. There's a lot of interesting research and analysis out there and it can help any of us grapple with the human condition.

But alas, we can't have that. An LLM can be a semi-interesting toy to spark a discussion but everyone has some kind of Pavlovian reaction to the topic from the real world shit storm we live in.

I think you're misunderstanding and/or deliberately misrepresenting the point. The point isn't some asinine assertion, it's a very real fundamental problem with using LLMs for any actually useful task.

If you ask a person what an apple is, they think back to their previous experiences. They know what an apple looks like, what it tastes like, what it can be used for, how it feels to hold it. They have a wide variety of experiences that form a complete understanding of what an apple is. If they have never heard of an apple, they'll tell you they've never heard of it.

If you ask an LLM what an apple is, they don't pull from any kind of database of information, they don't pull from experiences, they don't pull from any kind of logic. Rather, they generate an answer that sounds like what a person would say in response to the question, "What is an apple?" They generate this based on nothing more than language itself. To an LLM, the only difference between an apple and a strawberry and a banana and a gibbon is that these things tend to be mentioned in different types of sentences. It is, granted, unlikely to tell you that an apple is a type of ape, but if it did it would say it confidently and with absolutely no doubt in its mind, because it doesn't have a mind and doesn't have doubt and doesn't have an actual way to compare an apple and a gibbon that doesn't involve analyzing the sentences in which the words appear.

The problem is that most of the language-related tasks which would be useful to automate require not just text which sounds grammatically correct but text which makes sense. Text which is written with an understanding of the context and the meanings of the words being used.

An LLM is a very convincing Chinese room. And a Chinese room is not useful.

As an example of that, try asking a LLM questions about precise details about the lore of a fictional universe you know well, and you know that what you're asking about hasn't ever been detailed.

Not Tolkien because this has been too much discussed on the internet. Pick a universe much more niche.

It will completely invent stuff that kinda makes sense. Because it's predicting the next words that seem likely in the context.

A human would be much less likely to do this because they'd just be able to think and tell you "huh... I don't think the authors ever thought about that".