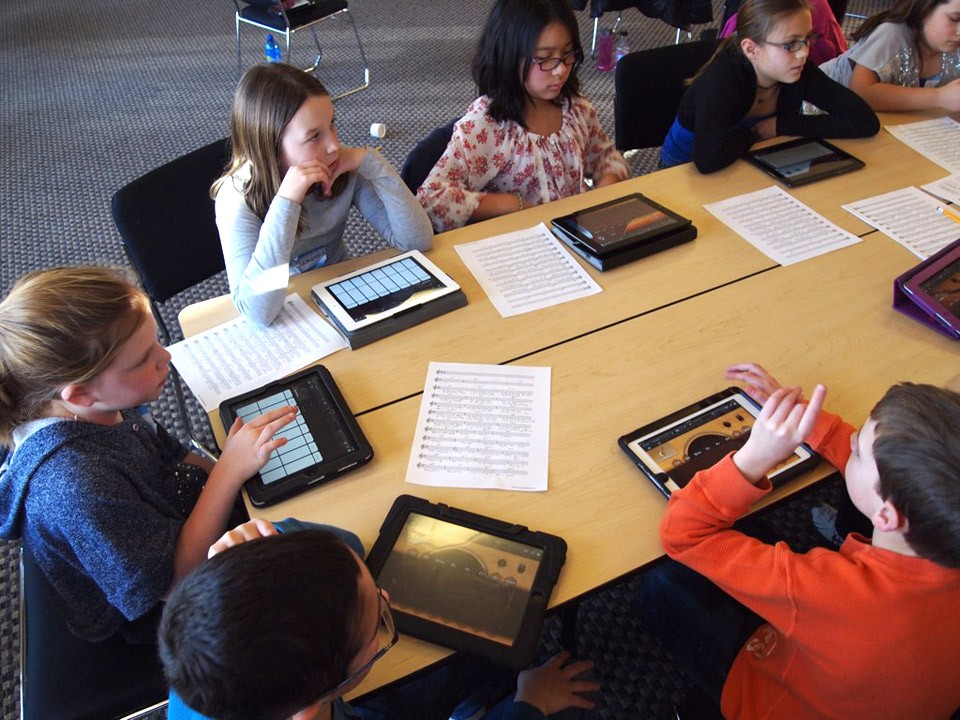

Across the world schools are wedging AI between students and their learning materials; in some countries greater than half of all schools have already adopted it (often an "edu" version of a model like ChatGPT, Gemini, etc), usually in the name of preparing kids for the future, despite the fact that no consensus exists around what preparing them for the future actually means when referring to AI.

Some educators have said that they believe AI is not that different from previous cutting edge technologies (like the personal computer and the smartphone), and that we need to push the "robots in front of the kids so they can learn to dance with them" (paraphrasing a quote from Harvard professor Houman Harouni). This framing ignores the obvious fact that AI is by far, the most disruptive technology we have yet developed. Any technology that has experts and developers alike (including Sam Altman a couple years ago) warning of the need for serious regulation to avoid potentially catastrophic consequences isn't something we should probably take lightly. In very important ways, AI isn't comparable to technologies that came before it.

The kind of reasoning we're hearing from those educators in favor of AI adoption in schools doesn't seem to have very solid arguments for rushing to include it broadly in virtually all classrooms rather than offering something like optional college courses in AI education for those interested. It also doesn't sound like the sort of academic reasoning and rigorous vetting many of us would have expected of the institutions tasked with the important responsibility of educating our kids.

ChatGPT was released roughly three years ago. Anyone who uses AI generally recognizes that its actual usefulness is highly subjective. And as much as it might feel like it's been around for a long time, three years is hardly enough time to have a firm grasp on what something that complex actually means for society or education. It's really a stretch to say it's had enough time to establish its value as an educational tool, even if we had come up with clear and consistent standards for its use, which we haven't. We're still scrambling and debating about how we should be using it in general. We're still in the AI wild west, untamed and largely lawless.

The bottom line is that the benefits of AI to education are anything but proven at this point. The same can be said of the vague notion that every classroom must have it right now to prevent children from falling behind. Falling behind how, exactly? What assumptions are being made here? Are they founded on solid, factual evidence or merely speculation?

The benefits to Big Tech companies like OpenAI and Google, however, seem fairly obvious. They get their products into the hands of customers while they're young, potentially cultivating their brands and products into them early. They get a wealth of highly valuable data on them. They get to maybe experiment on them, like they have previously been caught doing. They reinforce the corporate narratives behind AI — that it should be everywhere, a part of everything we do.

While some may want to assume that these companies are doing this as some sort of public service, looking at the track record of these corporations reveals a more consistent pattern of actions which are obviously focused on considerations like market share, commodification, and bottom line.

Meanwhile, there are documented problems educators are contending with in their classrooms as many children seem to be performing worse and learning less.

The way people (of all ages) often use AI has often been shown to lead to a tendency to "offload" thinking onto it — which doesn't seem far from the opposite of learning. Even before AI, test scores and other measures of student performance have been plummeting. This seems like a terrible time to risk making our children guinea pigs in some broad experiment with poorly defined goals and unregulated and unproven technologies which may actually be more of an impediment to learning than an aid in their current form.

This approach has the potential to leave children even less prepared to deal with the unique and accelerating challenges our world is presenting us with, which will require the same critical thinking skills which are currently being eroded (in adults and children alike) by the very technologies being pushed as learning tools.

This is one of the many crazy situations happening right now that terrify me when I try to imagine the world we might actually be creating for ourselves and future generations, particularly given personal experiences and what I've heard from others. One quick look at the state of society today will tell you that even we adults are becoming increasingly unable to determine what's real anymore, in large part thanks to the way in which our technologies are influencing our thinking. Our attention spans are shrinking, our ability to think critically is deteriorating along with our creativity.

I am personally not against AI, I sometimes use open source models and I believe that there is a place for it if done correctly and responsibly. We are not regulating it even remotely adequately. Instead, we're hastily shoving it into every classroom, refrigerator, toaster, and pair of socks, in the name of making it all smart, as we ourselves grow ever dumber and less sane in response. Anyone else here worried that we might end up digitally lobotomizing our kids?

Thank you for kicking this hornet's nest. There is a lot of great info and enthusiasm here, all of which is sorely needed.

We have massive and widespread attention paid to every cause under the sun by social and traditional media, with movements and protests (deservedly) filling the streets. Yet this issue which is as central and crucial to our freedoms as any rights currently being fought for (it intersects with each of them directly), continues to be sidelined and given the foil hat treatment.

We can't even adequately talk about things like disinformation, political extremism, and even mental health without addressing the role our technologies play, which has been hijacked by these bad actors, robber barons selling us ease and convenience and promises of bright, shiny, and Utopian futures while conning us out of our liberty.

With the widespread, rapidly declining state of society, and the dramatic rise and spread of technologies like AI, there has never been a more urgent need to act collectively against these invasive practices claiming every corner of our lives.

We need those of you recognize this crisis for what it is, we need your voices in the discussions surrounding the many problems and challenges we face at this critical moment. We need public awareness to have hope of changing this situation for the better.

As many of you have pointed out, the most immediate step we need to take is disengagement with the products and services that are surveiling, exploiting, and manipulating us. Look to alternatives, ask around, don't be afraid to try something new. Deprive them of both your engagement and your data.

Keep going, keep resisting, do the small things you can do. As the saying goes, small things add up over time. Keep going.

[Edited slightly for clarity]