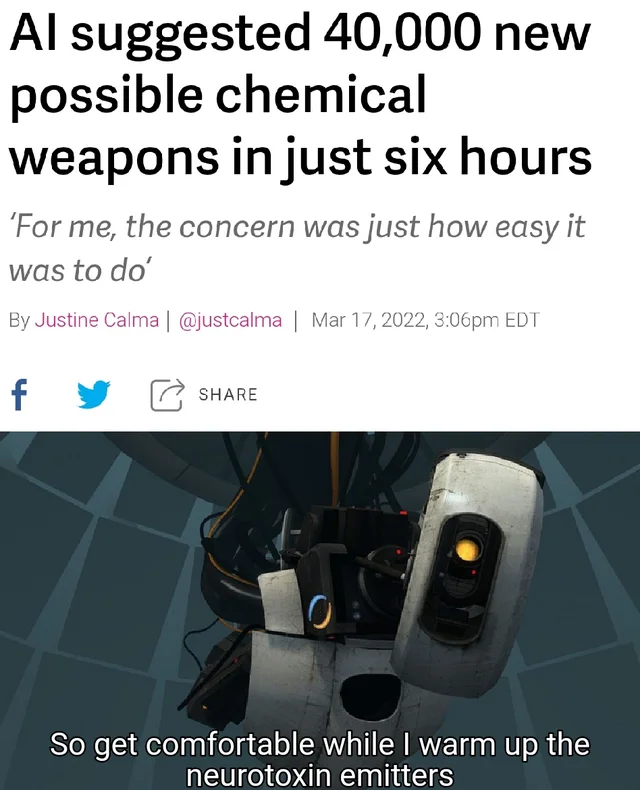

News stories like that are nice and all, but this is what the current state of AI is:

The news story just talked about how many neurotoxines it suggested, not how many of them are actually neurotoxines.

It probably printed 40k random chemical formulae.