thinking about how often code will be like

[-1] * len(array) # -1 is a place holder because I don't know the vocabulary term "sentinel value"

and justified because "it just needs to work"

and now we have professional vibe coders

Big brain tech dude got yet another clueless take over at HackerNews etc? Here's the place to vent. Orange site, VC foolishness, all welcome.

This is not debate club. Unless it’s amusing debate.

For actually-good tech, you want our NotAwfulTech community

thinking about how often code will be like

[-1] * len(array) # -1 is a place holder because I don't know the vocabulary term "sentinel value"

and justified because "it just needs to work"

and now we have professional vibe coders

The context for this essay is serious, high-stakes communication: papers, technical blog posts, and tweet threads.

More recently, in the comments:

After reading your comments and @Jiro 's below, and discussing with LLMs on various settings, I think I was too strong in saying....

It's like watching people volunteer for a lobotomy.

Ian Lance Taylor (of GOLD, Go, and other tech fame) had a take on chatbots being AGI that I liked to see from an influential person of computing. https://www.airs.com/blog/archives/673

The summary is that chatbots are not AGI, using the current AI wave as the usher to AGI is not it, and all around dislikes in a very polite way that chatbot LLMs are seen as AI.

Apologies if this was posted when published.

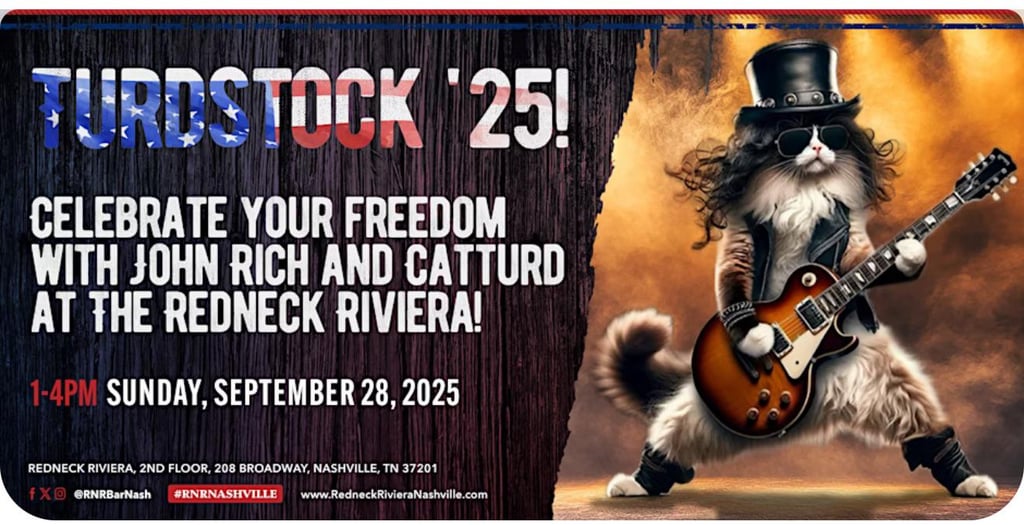

Democratizing graphic design, Nashville style

Description: genAI artifact depicting guitarist Slash as a cat. This cursed critter is advertising a public appearance by a twitter poster. The event is titled "TURDSTOCK 2025". Also, the cat doesn't appear to be polydactyl, which seems like a missed opportunity tbh.

Turdstock? Wow, the name immediately says this is a festival worth attending! The picture only strengthens the feeling.

Intentionally being on Broadway at 1-4 PM on a Sunday is a whole vibe, and that's before considering whatever the fuck this is.

Found an unironic AI bro in the wild on Bluesky:

You want my unsolicited thoughts on the line between man and machine, I feel this bubble has done more to clarify that line then to blur it, both by showcasing the flaws and limitations inherent to artificial intelligence, and by highlighting the aspects of human minds which cannot be replicated.

I have been thinking about the true cost of running LLMs (of course, Ed Zitron and others have written about this a lot).

We take it for granted that large parts of the internet are available for free. Sure, a lot of it is plastered with ads, and paywalls are becoming increasingly common, but thanks to economies of scale (and a level of intrinsic motivation/altruism/idealism/vanity), it still used to be viable to provide information online without charging users for every bit of it. Same appears to be true for the tools to discover said information (search engines).

Compare this to the estimated true cost of running AI chatbots, which (according to the numbers I'm familiar with) may be tens or even hundreds of dollars a month for each user. For this price, users would get unreliable slop, and this slop could only be produced from the (mostly free) information that is already available online while disincentivizing creators from producing more of it (because search engine driven traffic is dying down).

I think the math is really abysmal here, and it may take some time to realize how bad it really is. We are used to big numbers from tech companies, but we rarely break them down to individual users.

Somehow reminds me of the astronomical cost of each bitcoin transaction (especially compared to the tiny cost of processing a single payment through established payment systems).

I've done some of the numbers here, but don't stand by them enough to share. I do estimate that products like Cursor or Claude are being sold at roughly an 80-90% discount compared to what's sustainable, which is roughly in line with what Zitron has been saying, but it's not precise enough for serious predictions.

Your last paragraph makes me think. We often idealize blockchains with VMs, e.g. Ethereum, as a global distributed computer, if the computer were an old Raspberry Pi. But it is Byzantine distributed; the (IMO excessive) cost goes towards establishing a useful property. If I pick another old computer with a useful property, like a radiation-hardened chipset comparable to a Gamecube or G3 Mac, then we have a spectrum of computers to think about. One end of the spectrum is fast, one end is cheap, one end is Byzantine, one end is rad-hardened, etc. Even GPUs are part of this; they're not that fast, but can act in parallel over very wide data. In remarkably stark contrast, the cost of Transformers on GPUs doesn't actually go towards any useful property! Anything Transformers can do, a cheaper more specialized algorithm could have also done.

Daniel Koko's trying to figure out how to stop the AGI apocalypse.

How might this work? Install TTRPG afficionados at the chip fabs and tell them to roll a saving throw.

Similarly, at the chip production facilities, a committee of representatives stands at the end of the production line basically and rolls a ten-sided die for each chip; chips that don't roll a 1 are destroyed on the spot.

And if that doesn't work? Koko ultimately ends up pretty much where Big Yud did: bombing the fuck out of the fabs and the data centers.

"For example, if a country turns out to have a hidden datacenter somewhere, the datacenter gets hit by ballistic missiles and the country gets heavy sanctions and demands to allow inspectors to pore over other suspicious locations, which if refused will lead to more missile strikes."

Suppose further that enough powerful people are concerned about the poverty in Ireland, anti-catholic discrimination, food insecurity, and/or loss of rental revenue, that there's significant political will to Do Something. Should we ban starvation? Should we decolonise? Should we export their produce harder to finally starve Ireland? Should we sign some kind of treaty? Should we have a national megaproject to replace the population with the British? Many of these options are seriously considered.

Enter the baseline option: Let the Irish sell their babies as a delicacy.

Similarly, at the chip production facilities, a committee of representatives stands at the end of the production line basically and rolls a ten-sided die for each chip; chips that don’t roll a 1 are destroyed on the spot.

Ah, yes, artificially kneecap chip fabs' yields, I'm sure that will go over well with the capitalist overlords who own them

Someone didn't get the memo about nVidia's stock price, and how is Jensen supposed to sign more boobs if suddenly his customers all get missile'd?

Copy/pasting a post I made in the DSP driver subreddit that I might expand over at morewrite because it's a case study in how machine learning algorithms can create massive problems even when they actually work pretty well.

It's a machine learning system, not an actual human boss. The system is set up to try and find the breaking point, where if you finish your route on time it assumes you can handle a little bit more and if you don't it backs off.

The real problem is that everything else in the organization is set up so that finishing your routes on time is a minimum standard while the algorithm that creates the routes is designed to make doing so just barely possible. Because it's not fully individualized, this means that doing things like skipping breaks and waiving your lunch (which the system doesn't appear to recognize as options) effectively push the edge of what the system thinks is possible out a full extra hour, and then the rest of the organization (including the decision-makers about who gets to keep their job) turn that edge into the standard. And that's how you end up where we are now, where actually taking your legally-protected breaks is at best a luxury for top performers or people who get an easy route for the day, rather than a fundamental part of keeping everyone doing the job sane and healthy.

Part of that organizational problem is also in the DSP setup itself, since it allows Amazon to avoid taking responsibility or accountability for those decisions. All they have to do is make sure their instructions to the DSP don't explicitly call for anything illegal and they get to deflect all criticism (or LNI inquiries) away from themselves and towards the individual DSP, and if anyone becomes too much of a problem they can pretend to address it by cutting that DSP.

Better Offline was rough this morning in some places. Props to Ed for keeping his cool with the guests.

Oof, that Hollywood guest (Brian Koppelman) is a dunderhead. "These AI layoffs actually make sense because of complexity theory". "You gotta take Eliezer Yudkowsky seriously. He predicted everything perfectly."

I looked up his background, and it turns out he's the guy behind the TV show "Billions". That immediately made him make sense to me. The show attempts to lionize billionaires and is ultimately undermined not just by its offensive premise but by the world's most block-headed and cringe-inducing dialog.

Terrible choice of guest, Ed.