this post was submitted on 26 Jan 2026

513 points (99.4% liked)

memes

19134 readers

2155 users here now

Community rules

1. Be civil

No trolling, bigotry or other insulting / annoying behaviour

2. No politics

This is non-politics community. For political memes please go to !politicalmemes@lemmy.world

3. No recent reposts

Check for reposts when posting a meme, you can only repost after 1 month

4. No bots

No bots without the express approval of the mods or the admins

5. No Spam/Ads/AI Slop

No advertisements or spam. This is an instance rule and the only way to live. We also consider AI slop to be spam in this community and is subject to removal.

A collection of some classic Lemmy memes for your enjoyment

Sister communities

- !tenforward@lemmy.world : Star Trek memes, chat and shitposts

- !lemmyshitpost@lemmy.world : Lemmy Shitposts, anything and everything goes.

- !linuxmemes@lemmy.world : Linux themed memes

- !comicstrips@lemmy.world : for those who love comic stories.

founded 2 years ago

MODERATORS

you are viewing a single comment's thread

view the rest of the comments

view the rest of the comments

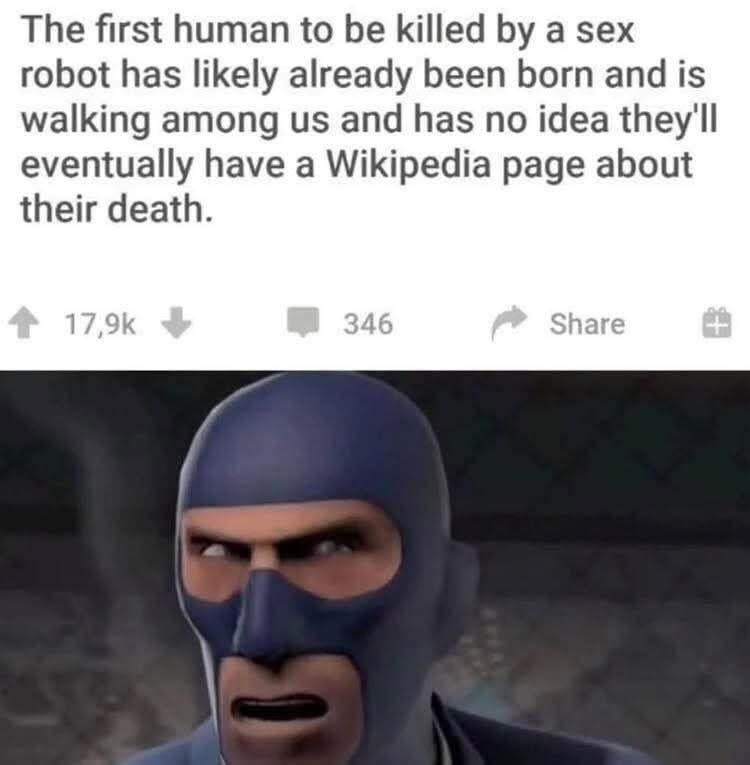

Interesting but not a robot.

Not only that, but the operative word is "killed". Wongbandue had dementia and went to the train station, and it's profoundly disgusting that Facebook has a product that would deceive a senile old man into going to New York to meet someone who doesn't exist, but he died after falling while jogging. Clearly not at all what's being implied by the OP and really stretching the proximate cause for being "killed".

I'm frustrated by how meta deals with it's bots. They put them next to real people on messenger with the same profile picture and green dot format (it would be trivial to make the dot blue so we can differentiate between people and bots) but they also randomly add them without your consent. I find myself wondering who this person is, not remembering them being on my friends list, only to find out it's a bot.

Obviously, anyone that's even mildly deficient is going to think it's a real person.

Yeah, a much better and earlier example is the 14 year old who was told to kill himself by his AI girlfriend

A legit sexbot murder involves a robot squeezing the life out of its victim with its cold, robot hands. I will accept nothing less.

What about sensor errors resulting in deadly amounts of penetration?

Is a chatbot a sexbot? In that case multiple deaths can be traced to chatbots hallucinating or going off the deep end