this post was submitted on 25 Jun 2024

118 points (100.0% liked)

technology

24270 readers

334 users here now

On the road to fully automated luxury gay space communism.

Spreading Linux propaganda since 2020

- Ways to run Microsoft/Adobe and more on Linux

- The Ultimate FOSS Guide For Android

- Great libre software on Windows

- Hey you, the lib still using Chrome. Read this post!

Rules:

- 1. Obviously abide by the sitewide code of conduct. Bigotry will be met with an immediate ban

- 2. This community is about technology. Offtopic is permitted as long as it is kept in the comment sections

- 3. Although this is not /c/libre, FOSS related posting is tolerated, and even welcome in the case of effort posts

- 4. We believe technology should be liberating. As such, avoid promoting proprietary and/or bourgeois technology

- 5. Explanatory posts to correct the potential mistakes a comrade made in a post of their own are allowed, as long as they remain respectful

- 6. No crypto (Bitcoin, NFT, etc.) speculation, unless it is purely informative and not too cringe

- 7. Absolutely no tech bro shit. If you have a good opinion of Silicon Valley billionaires please manifest yourself so we can ban you.

founded 5 years ago

MODERATORS

you are viewing a single comment's thread

view the rest of the comments

view the rest of the comments

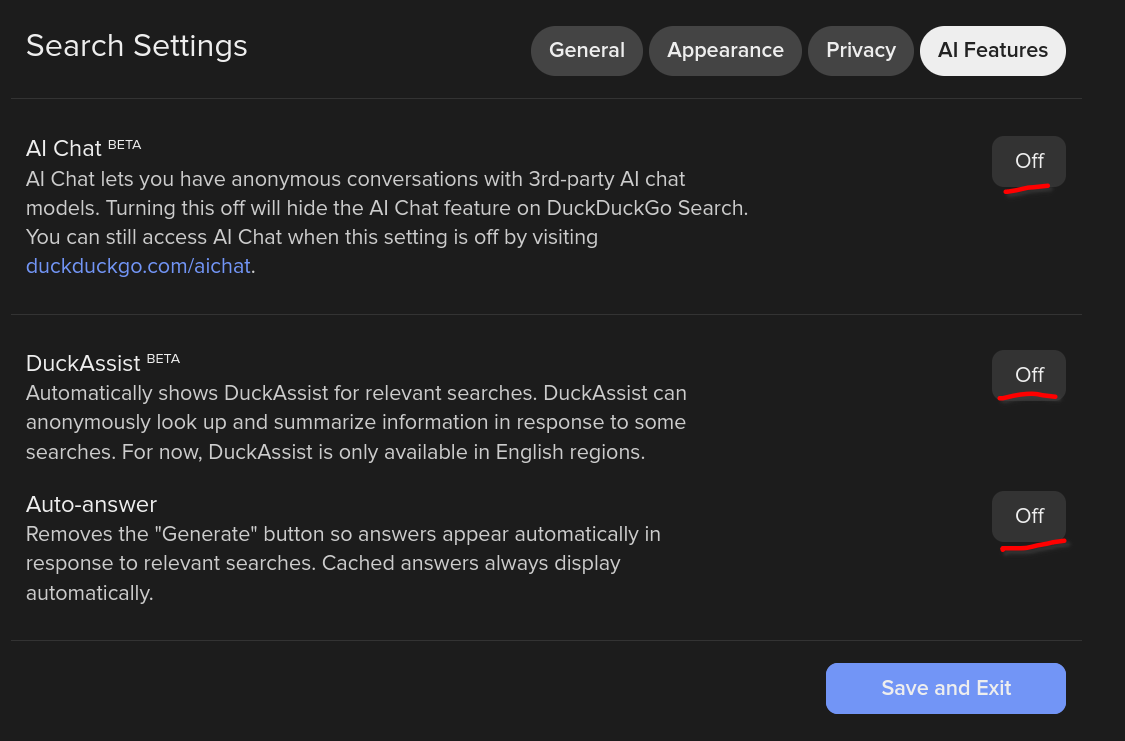

The AI chat is helpful though

please demonstrate a case where this has been useful to you

I asked it the difference between soy sauce and tamari and it told me.

oooh tamari

so like, could you have answered that question without spinning up a 200W gpu somewhere to do the llm "inference"?

literally just google "wikipedia tamari"

and essentially all it's doing is plagiarizing a dozen other answers from various websites.

tamarind the fruit or tamarin the genus?

and what part of that required an AI?

I never said it did. It was just faster than searching through multiple search results and reading through multiple paragraphs.

How did you know the answer was correct?

How do you know any information is correct?

Do you really not see why I asked my rhetorical question or do you just want to bicker?

I wasn't bickering. You're the one trying to argue. It sounds like you're implying that information from AI is inherently incorrect which simply isn't true.

First, at the risk of being a pedant, bickering and arguing are distinct activities. Second, I didn't imply llm's results are inherently incorrect. However, it is undeniable that they sometimes make shit up. Thus without other information from a more trustworthy source, an LLM's outputs can't be trusted.