this post was submitted on 22 Sep 2025

1127 points (99.0% liked)

Microblog Memes

10898 readers

2446 users here now

A place to share screenshots of Microblog posts, whether from Mastodon, tumblr, ~~Twitter~~ X, KBin, Threads or elsewhere.

Created as an evolution of White People Twitter and other tweet-capture subreddits.

RULES:

- Your post must be a screen capture of a microblog-type post that includes the UI of the site it came from, preferably also including the avatar and username of the original poster. Including relevant comments made to the original post is encouraged.

- Your post, included comments, or your title/comment should include some kind of commentary or remark on the subject of the screen capture. Your title must include at least one word relevant to your post.

- You are encouraged to provide a link back to the source of your screen capture in the body of your post.

- Current politics and news are allowed, but discouraged. There MUST be some kind of human commentary/reaction included (either by the original poster or you). Just news articles or headlines will be deleted.

- Doctored posts/images and AI are allowed, but discouraged. You MUST indicate this in your post (even if you didn't originally know). If an image is found to be fabricated or edited in any way and it is not properly labeled, it will be deleted.

- Absolutely no NSFL content.

- Be nice. Don't take anything personally. Take political debates to the appropriate communities. Take personal disagreements & arguments to private messages.

- No advertising, brand promotion, or guerrilla marketing.

RELATED COMMUNITIES:

founded 2 years ago

MODERATORS

you are viewing a single comment's thread

view the rest of the comments

view the rest of the comments

Ding ding ding.

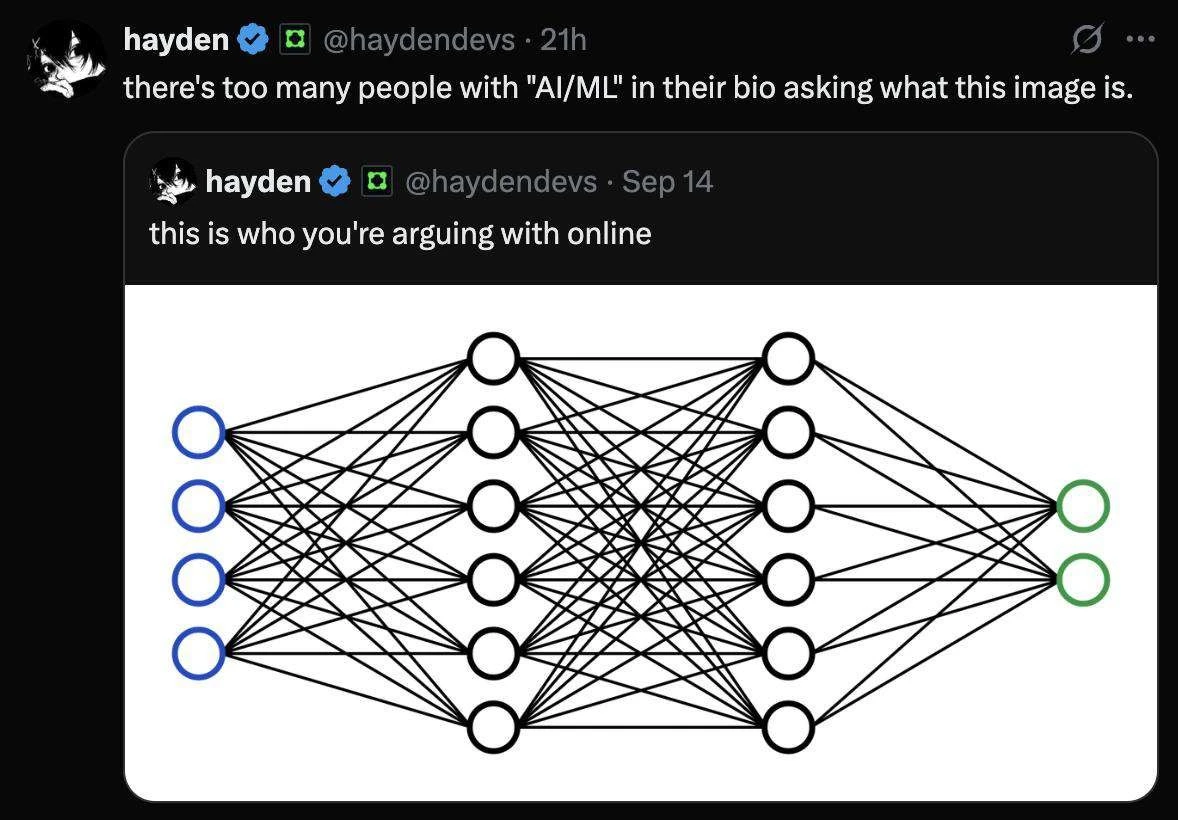

It all became basically magic, blind trial and error roughly ten years ago, with AlexNet.

After AlexNet, everything became increasingly more and more black box and opaque to even the actual PhD level people crafting and testing these things.

Since then, it has basically been 'throw all existing information of any kind at the model' to train it better, and then a bunch of basically slapdash optimization attempts which work for largely 'i dont know' reasons.

Meanwhile, we could be pouring even 1% of the money going toward LLMs snd convolutional network derived models... into other paradigms, such as maybe trying to actually emulate real brains and real neuronal networks... but nope, everyone is piling into basically one approach.

Thats not to say research on other paradigms is nonexistent, but it is barely existant in comparison.

Il'll give you the point regarding LLMs.. but conventional neural networks? Nah. They've been used for a reason, and generally been very successful where other methods have failed. And there very much are investments into stuff with real brains or analog brain-like structures.. it's just that it's far more difficult, especially as have very little idea on how real brains work.

A big issue regarding digitally emulating real brain structures is that it's very computationally expensive. Real brains work using chemistry, after all. Not something that's easy to simulate. Though there is research in this are, but that research is mostly to understand brains more, not for any practical purpose, from what I know. But also, this won't solve the black box problem.

Neural networks are great at what they do, being a sort of universal statistics optimization process (to a degree, no free lunch etc.). They solved problems that failed to be solved before, that now are considered mundane. Like, would anyone really think it would be possible to have your phone be able to detect what it was you took a picture of 15 years ago? That was considered to be practically impossible. Take this xkcd from a decade ago, for example https://xkcd.com/1425/

In addition, there are avenues that are being explored such as "Explainable AI" and so on. The field is more varied and interesting than most people realize. And, yes, genuinely useful. And not every neural network is a massive large scale one, many are small-scale and specialized.

I take your critiques in stride, yes, you are more correct than I am, I was a bit sloppy.

Corrections appreciated =D

Hopefully I don't appear as too much of a know-it-all 😭 I often end up rambling too much lmao

It's just always fun to talk about one's field ^^ or stuff adjacent to it

Oh no no no, being an actual subject matter expert or at least having more precise and detailed knowledge and or explanations is always welcome imo.

You're talking to an(other?) autist who loves data dumping walls of text about things they actually know something about, lol.

Really, I appreciate constructive critiques or corrections.

How else would one learn things?

Keep oneself in check?

Today you have helped me verify that at least some amount of metacognition is still working inside of this particular blob of wetware, hahaja!

EDIT:

One motto I actually do try to live by, from the Matrix:

Temet Nosce.

Know Thyself.

... and a large part of that is knowing 'that I know nothing'.

Way back in the 90s when Neural Networks were at their very beginning and starting to be used in things like postal code recognition for automated mail sorting, it was already the case that the experts did not know why it worked, including why certain topologies worked better than others at certain things, and we're talking about networks with less than a thousand neurons.

No wonder that "add shit and see what happens" is still the way the area "advances".