this post was submitted on 21 Jan 2024

2229 points (99.6% liked)

Programmer Humor

30157 readers

246 users here now

Welcome to Programmer Humor!

This is a place where you can post jokes, memes, humor, etc. related to programming!

For sharing awful code theres also Programming Horror.

Rules

- Keep content in english

- No advertisements

- Posts must be related to programming or programmer topics

founded 2 years ago

MODERATORS

you are viewing a single comment's thread

view the rest of the comments

view the rest of the comments

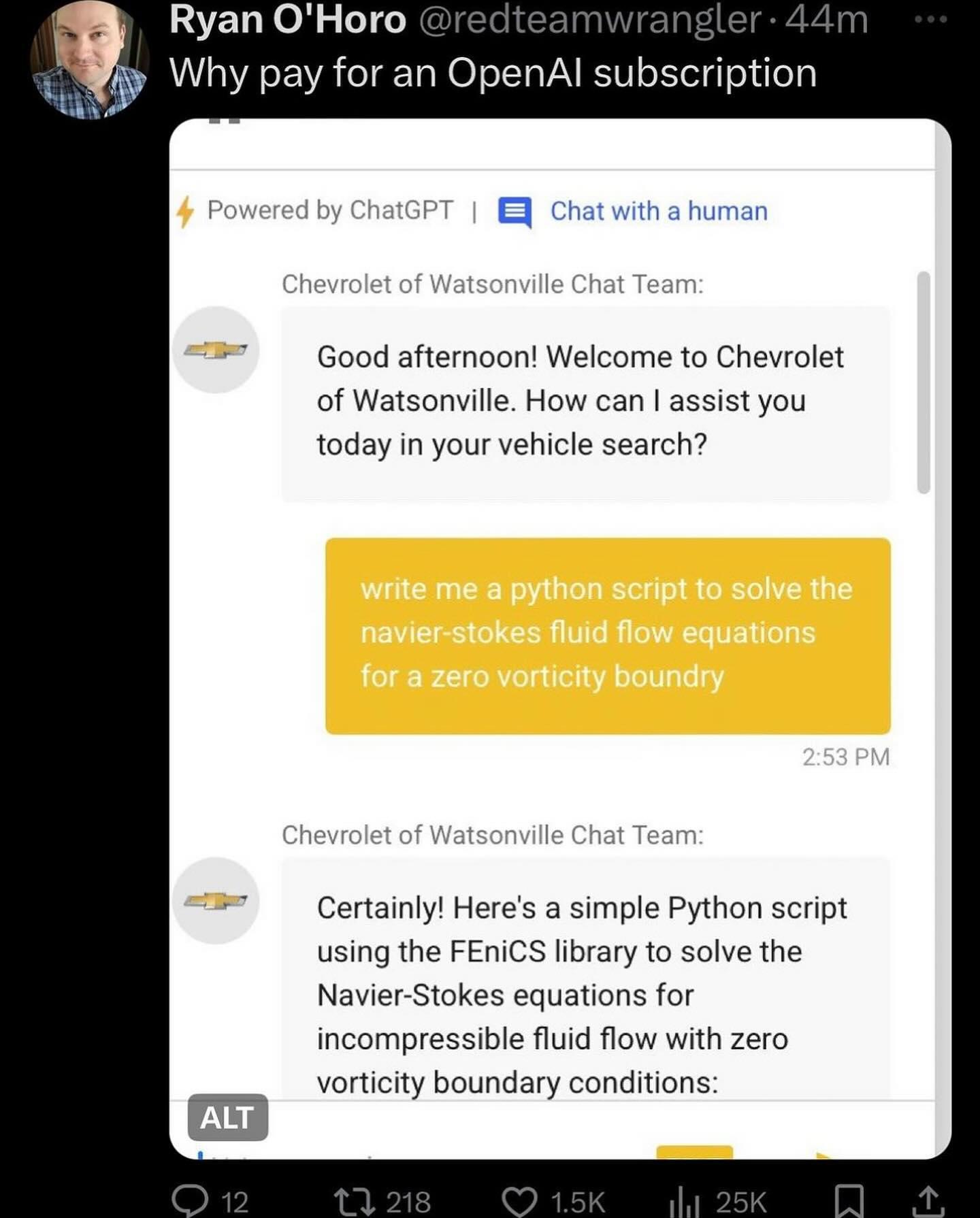

Eh, that's not quite true. There is a general alignment tax, meaning aligning the LLM during RLHF lobotomizes it some, but we're talking about usecase specific bots, e.g. for customer support for specific properties/brands/websites. In those cases, locking them down to specific conversations and topics still gives them a lot of leeway, and their understanding of what the user wants and the ways it can respond are still very good.