this post was submitted on 23 Jan 2026

109 points (92.2% liked)

Technology

79136 readers

2554 users here now

This is a most excellent place for technology news and articles.

Our Rules

- Follow the lemmy.world rules.

- Only tech related news or articles.

- Be excellent to each other!

- Mod approved content bots can post up to 10 articles per day.

- Threads asking for personal tech support may be deleted.

- Politics threads may be removed.

- No memes allowed as posts, OK to post as comments.

- Only approved bots from the list below, this includes using AI responses and summaries. To ask if your bot can be added please contact a mod.

- Check for duplicates before posting, duplicates may be removed

- Accounts 7 days and younger will have their posts automatically removed.

Approved Bots

founded 2 years ago

MODERATORS

you are viewing a single comment's thread

view the rest of the comments

view the rest of the comments

This is obvious. It's literally trained off of English-speaking Reddit comments and designed to give the most likely answer to a question.

I wonder what difference it makes when the user isn't using English. They don't mention that they aren't considering this and don't mention it on their How it Works page, but they do in the paper's abstract: "Finally, our focus on English-language prompts overlooks the additional biases that may emerge in other languages."

They do also reference a study by another team that does show differences in bias based on input language which concludes, "Our experiments on several LLMs show that incorporating perspectives from diverse languages can in fact improve robustness; retrieving multilingual documents best improves response consistency and decreases geopolitical bias"

The subject of how and what type of bias is captured by LLMs is a pretty interesting subject that's definitely worthy of analysis. Personally I do feel they should more prominently highlight that they're just looking at English language interactions; it feels a bit sensationalist/click-baity at the moment and I don't think they can reasonably imply that LLMs are inherently biased towards "male, white, and Western" values just yet.

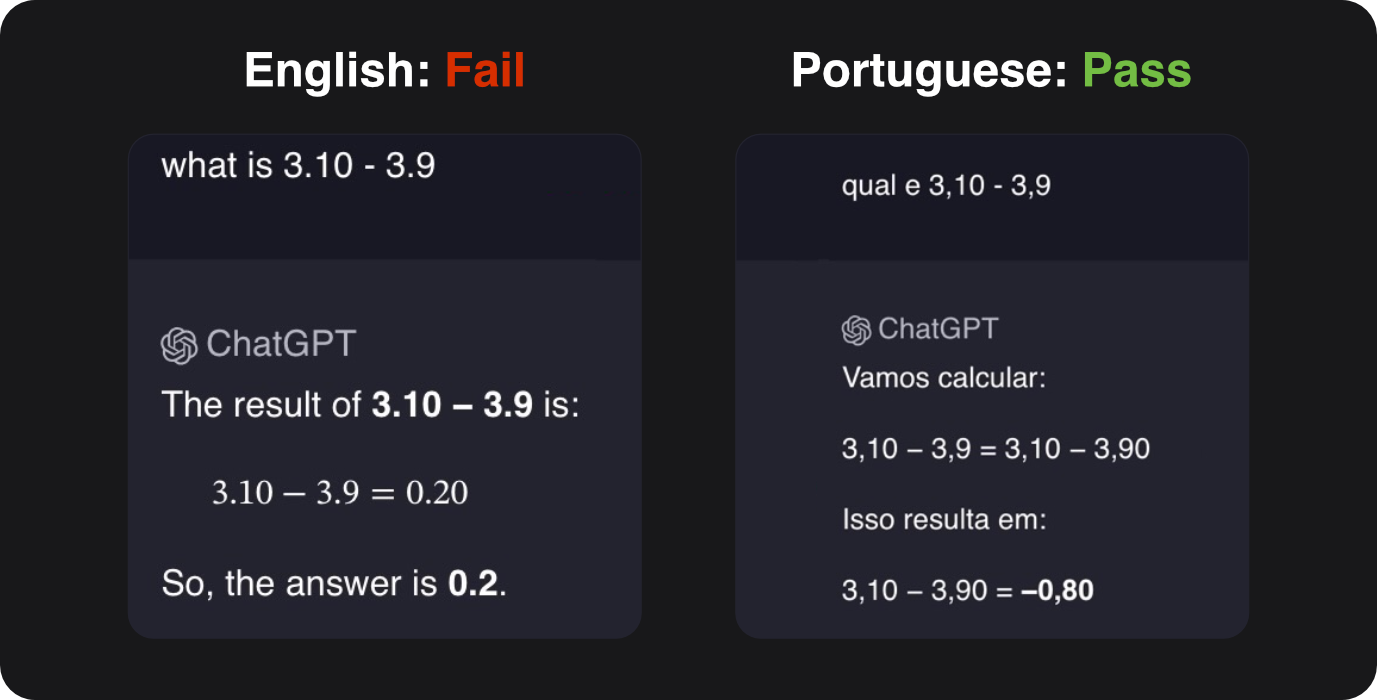

Kagi had a good little example of language biases in LLMs.

When asked what 3.10 - 3.9 is in english, it fails, but it succeeds in Portuguese, if you format the numbers as you would in Portuguese, with commas instead of periods.

This is because... 3.10 and 3.9 often appear in the context of python version numbers, and the model gets confused, assuming there is a 0.2 difference going from version 3.9 to 3.10 instead of properly doing math.

In general, calling something that extrapolates and averages a dataset "AI" seems wrong.

Symbolic logic is something people have invented to escape that trap somewhere in Middle Ages, when it probably seemed more intuitive that a yelling crowd's opinion is not intelligence. Pitchforks and torches, ya knaw. I mean, scholars were not the most civil lot as well, and crime situation among them was worse than in seaports and such.

It's a bit similar to how you need non-linearity in ciphers.