this post was submitted on 22 Sep 2025

1127 points (99.0% liked)

Microblog Memes

10926 readers

2008 users here now

A place to share screenshots of Microblog posts, whether from Mastodon, tumblr, ~~Twitter~~ X, KBin, Threads or elsewhere.

Created as an evolution of White People Twitter and other tweet-capture subreddits.

RULES:

- Your post must be a screen capture of a microblog-type post that includes the UI of the site it came from, preferably also including the avatar and username of the original poster. Including relevant comments made to the original post is encouraged.

- Your post, included comments, or your title/comment should include some kind of commentary or remark on the subject of the screen capture. Your title must include at least one word relevant to your post.

- You are encouraged to provide a link back to the source of your screen capture in the body of your post.

- Current politics and news are allowed, but discouraged. There MUST be some kind of human commentary/reaction included (either by the original poster or you). Just news articles or headlines will be deleted.

- Doctored posts/images and AI are allowed, but discouraged. You MUST indicate this in your post (even if you didn't originally know). If an image is found to be fabricated or edited in any way and it is not properly labeled, it will be deleted.

- Absolutely no NSFL content.

- Be nice. Don't take anything personally. Take political debates to the appropriate communities. Take personal disagreements & arguments to private messages.

- No advertising, brand promotion, or guerrilla marketing.

RELATED COMMUNITIES:

founded 2 years ago

MODERATORS

you are viewing a single comment's thread

view the rest of the comments

view the rest of the comments

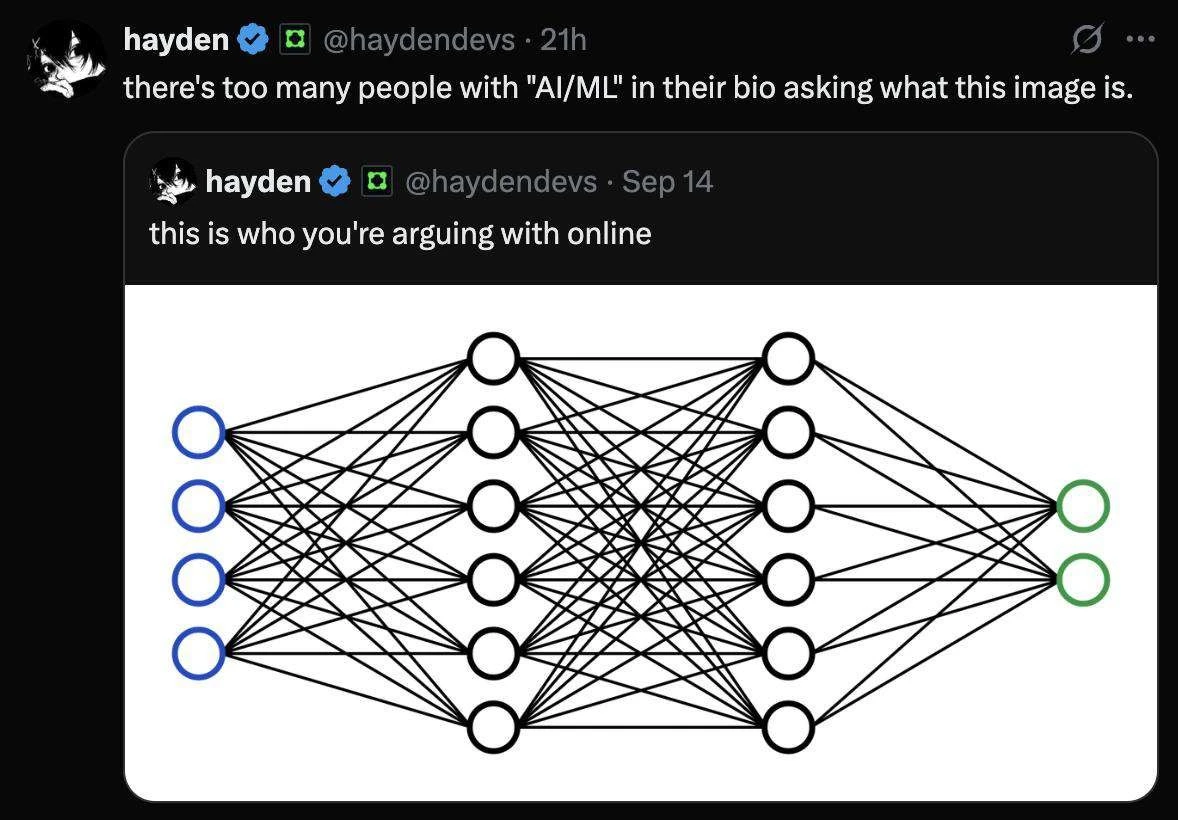

I mean I don't know for sure but I think they often just code program logic in to filter for some requests that they do not want.

My evidence for that is that I can trigger some "I cannot help you with that" responses by asking completely normal things that just use the wrong word.

It's not 100%, and you're more or less just asking the LLM to behave, and filtering the response through another non-perfect model after that which is trying to decide if it's malicious or not. It's not standard coding in that it's a boolean returned - it's a probability that what the user asked is appropriate according to another model. If the probability is over a threshold then it rejects.