All links for this story are shit -- Cloudflare or paywalls. So I linked the archive and will dump the text below. Note the difference between my title and the original. I think mine is more accurate. The AG seems to view feature phones as a tool for criminals. But also says having no phone is suspect as well, so the original title is also correct.

Georgia AG claims not having a phone makes you a criminal

That’s dangerous for constitutional rights

SAMANTHA HAMILTON

FEBRUARY 12, 2024 6:52 PM

The ubiquity of smartphones is causing some to pine for simpler times, when we didn’t have the entire history of humankind’s knowledge at our fingertips on devices that tracked our every move. There’s a growing trend, particularly among young people, to use non-smartphones, or “basic phones.” The reasons range from aesthetic to financial to concern for mental health. But according to Georgia Attorney General Chris Carr, having a basic phone, or a phone with no data on it, or no phone at all in the year 2024, is evidence of criminal intent. The AG’s position poses grave dangers for all Georgians’ constitutional rights.

Last month, Deputy Attorney General John Fowler argued in state court that mere possession of a basic cellphone indicates criminal intent to commit conspiracy under Georgia’s racketeer influenced and corrupt organizations statute, better known as RICO.

His accusation was directed at 19-year-old Ayla King, one of 61 people indicted last summer on RICO charges linked to protests in the South River Forest where the $109 million Atlanta Public Safety Training Center, nicknamed “Cop City” by its opponents, is slated to be built. The RICO charges against King and the 60 other RICO defendants have been widely criticized as a political prosecution running contrary to the First Amendment. King is the first of these defendants to stand trial.

During the Jan. 8 hearing in Fulton County Superior Court, Fowler argued that a cellphone in King’s possession on the day of their arrest, which he characterized as a “burner phone,” should be admissible as evidence of wrongdoing, even though it contained no data. He went even further to suggest that not possessing a cellphone at all also indicates criminal intent. Judge Kimberly Adams agreed to admit evidence of King’s cellphone.

Civil liberty groups are decrying the AG’s argument and court’s action as violations of constitutional rights under the First Amendment and Fourth Amendment. In an open letter to Attorney General Carr, the groups wrote, “It is alarming that prosecutors sworn to uphold the Constitution would even make such arguments—let alone that a sitting judge would seriously entertain them, and allow a phone to be searched and potentially admitted into evidence without any indication that it was used for illegal purposes.”

The Supreme Court recognized in the 2014 case Riley v. California that cellphones carry enough personal information—photos, text messages, calendar entries, internet history, and more—to reconstruct a person’s life using smartphone data alone. “Prior to the digital age, people did not typically carry a cache of sensitive personal information with them as they went about their day,” the Court noted. “Now it is the person who is not carrying a cellphone, with all that it contains, who is the exception.”

On the dark side of smartphones’ interconnectivity is their susceptibility to surveillance. In 2022, it was reported that the U.S. Department of Justice had purchased for testing a version of the Phantom spyware from NSO Group, an Israeli firm which sold its surveillance technology to governments like Mexico and Saudi Arabia to spy on journalists and political dissidents. Phantom could be used to hack into the encrypted data of any smartphone located anywhere in the world, without the hacker ever touching the phone and without the phone’s user ever knowing. The U.S. federal government denied using Phantom in any criminal investigation, but concerns about surveillance in the U.S. have led some folks to obtain basic phones.

Flip phones have made a comeback, and the potential for invasion of privacy is one of the reasons why. I’m not talking about the recent wave of smartphones that flip open. I’m talking about early 2000s-era basic phones, whose smartest feature was the game Snake or, if you were lucky, the ability to set your favorite song as your ringtone.

Folks are returning to basic phones—or in the case of Gen Z, turning for the first time—out of recognition that doom scrolling on a smartphone for hours each day is not good for mental health. For some older adults, basic phones, which offer few features beyond calling and texting, are preferable to smartphones for their simplicity. There are lots of reasons why someone might have a basic phone—not to mention they’re cheaper and more durable than a lot of smartphones.

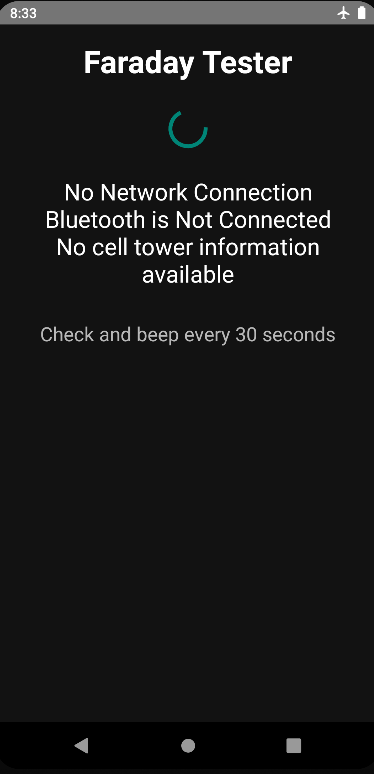

Using simple phones that have little data on them is a legitimate, and common, practice for journalists, whistleblowers, human rights activists, and other people seeking to protect their identities or those of others from surveillance by the government or malicious actors. The Committee to Protect Journalists recommends that journalists cycle through “low-cost burner phones every few months” to maintain their safety and that of their sources. Even athletes competing in the 2022 Beijing Olympics were advised to use burner phones in light of the overreaching state surveillance in China.

Using a burner phone is not evidence of criminal intent—it’s a reasonable response to the threat of surveillance and government overreach. While burner phones are not immune from location tracking via cell towers, the fact that they contain much less data than a smartphone can make them a more secure form of communication.

How deeply invasive of privacy rights will the AG’s logic extend? Will the prosecution argue that using a virtual private network (VPN) is evidence of criminal intent? What about communicating via encrypted messaging apps, like Signal? The First Amendment protects the right to anonymous speech, and the use of privacy protection measures like VPNs and Signal has become commonplace in today’s world. The AG has already asserted in the RICO indictment that anonymous speech communicated online constitutes a conspiracy, but if the AG argues that using VPNs and Signal is evidence of criminal intent, he would be going even further by claiming that the very tools which make people feel safe to communicate online are themselves evidence of criminal intent, thereby assuming criminality before the speech has even taken place.

The position the AG has taken in Ayla King’s case has the potential to make all of us suspects. If you have a smartphone with data on it, the information on the phone can be used as evidence against you. And if you have a phone with no data on it or no phone at all, that can be used as evidence against you.

The state’s use of the absence of evidence as affirmative evidence is an unsettling development, and one that seems desperate. Is it—and perhaps the RICO charges themselves—a sign of prosecutorial weakness in a case intended to silence criticism and criminalize First Amendment expression?

(update) possible awareness campaign action: Would it be worthwhile for people who do not carry a smartphone to write to the Georgia AG to say they don’t carry a smartphone? The idea being to improve the awareness of the AG.

take action