this post was submitted on 21 Oct 2024

526 points (98.2% liked)

Facepalm

3571 readers

1 users here now

founded 2 years ago

MODERATORS

you are viewing a single comment's thread

view the rest of the comments

view the rest of the comments

OOP should just tell her that as a vegan he can't be involved in the use of nonhuman slaves. Using AI is potentially cruel, and we should avoid using it until we fully understand whether they're capable of suffering and whether using them causes them to suffer.

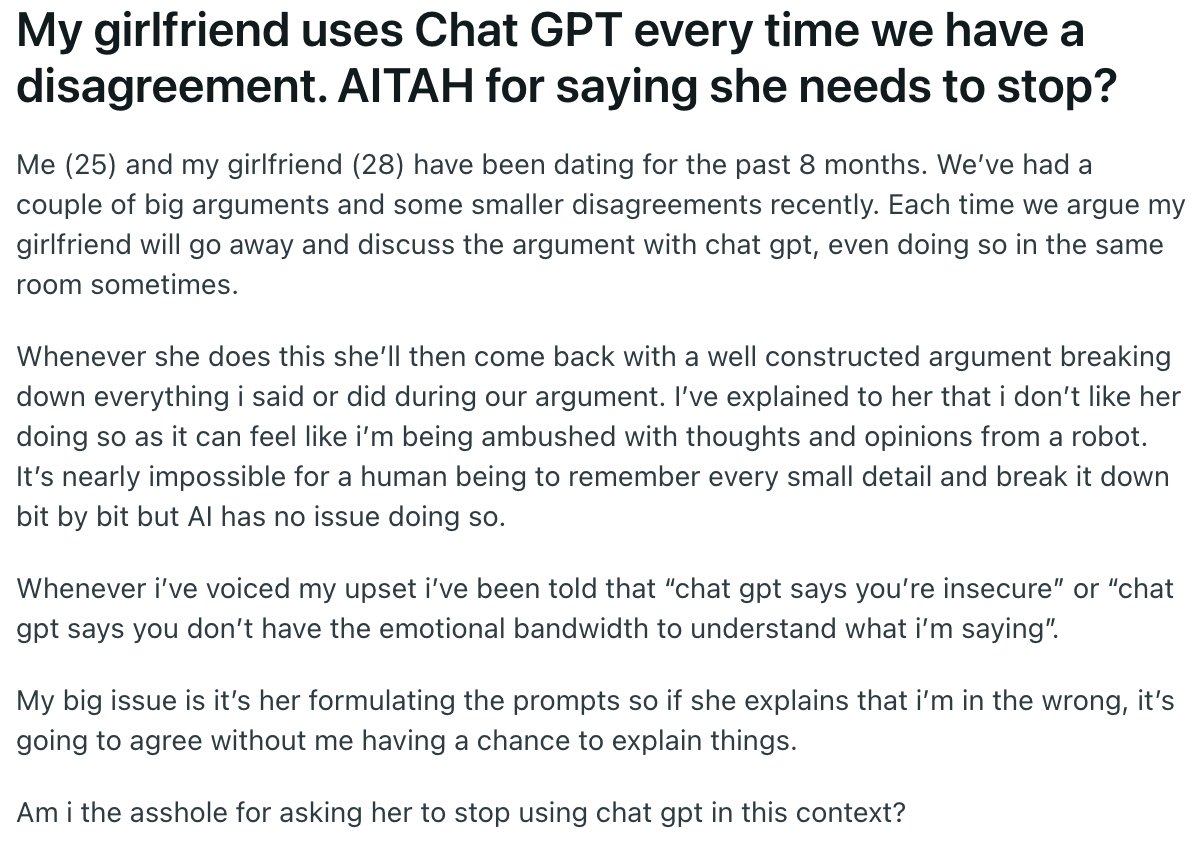

This is a woman who asks chatGPT for relationship advice.

Sentient and capable of suffering are two different things. Ants aren't sentient, but they have a neurological pain response. Drag thinks LLMs are about as smart as ants. Whether they can feel suffering like ants can is an unsolved scientific question that we need to answer BEFORE we go creating entire industries of AI slave labour.

Show drag a scientific paper demonstrating that ants or animals of similar intelligence can't suffer. You're claiming the problem is solved, show the literature.

No, drag didn't refer to dragself in the third person.

I PROMISE everyone ants are smarter than a 2024 LLM. (edit to add:) Claiming they’re not sentient is a big leap.

But I’m glad you recognise they can feel pain!

Cite a study that shows it.

BEEF DOESNT NEED STUDY BEEF HAVE KNOWING and knowing is half the battle GEE EYE JOEEE

https://www.nature.com/articles/s41559-022-01784-1

Big LLMs are far more complex, but they're also made be people who don't have a clue what they're doing, so in the future we'll probably have much smaller ones.