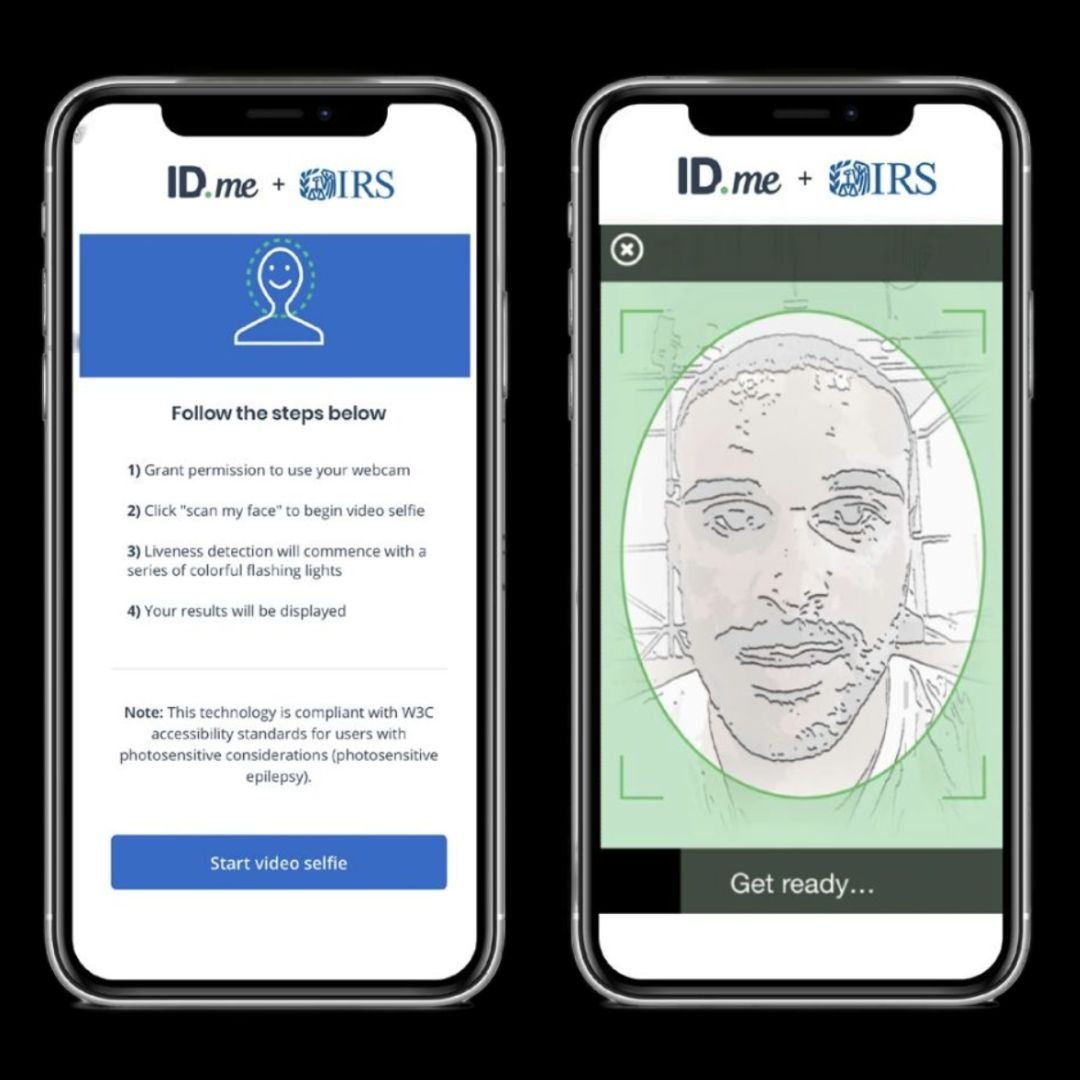

Google is testing facial recognition on one of its campuses, and refusing to be subjected to this is not an option for the giant’s employees.

In other words, opt-out is not a feature of the surveillance scheme – the only possibility available to employees is to fill out a form and declare they don’t want images recorded by security cameras, taken from their company IDs, stored.

Reports are saying that this is happening in Kirkland, a suburb of Seattle, where facial recognition tech is used to identify employees using images on their ID badges, in order to keep those unauthorized from entering the premises.

And while in the testing phase badges are being used, that won’t be the case in the future, Google representatives have said, but reports quoting them do not clarify what type of ID – or images – might be used instead.

According to Google and its division behind the project, Security and Resilience Services (GSRS), the purpose is to mitigate possible security risks.

Google “guinea-pigging” its own employees is seen as part of a wider push by the corporation to position itself in the expanding AI-driven surveillance development and deployment, regardless of this being yet another privacy controversy being added to Google’s already existing huge privacy controversy “portfolio.”

A spokesperson for Google insisted that the testing in Kirkland and eventual implementation of the technology is squarely security-driven, and reports mention one serious incident, the 2018 shooting at the YouTube office in California, as justification for the measures now being put in place.

However, there is already evidence that this type of employee surveillance is being used to control and discipline them as well.

According to an internal document seen by CNBC, the Kirkland experiment is “initially” taking place there, suggesting facial recognition will be deployed elsewhere on Google campuses; and the officially stated goal is to identify persons who “may pose a security risk to Google’s people, products, or locations.”

But even before the more sophisticated and elaborate surveillance trials started, Google used security camera footage to identify a number of employees who protested over labor conditions, as well as the giant’s Project Nimbus, which involves the company in the conflict in the Middle East. More than 50 people got fired.