this post was submitted on 17 Mar 2025

1400 points (99.8% liked)

Programmer Humor

40905 readers

39 users here now

Post funny things about programming here! (Or just rant about your favourite programming language.)

Rules:

- Posts must be relevant to programming, programmers, or computer science.

- No NSFW content.

- Jokes must be in good taste. No hate speech, bigotry, etc.

founded 6 years ago

MODERATORS

you are viewing a single comment's thread

view the rest of the comments

view the rest of the comments

The fact that “AI” hallucinates so extensively and gratuitously just means that the only way it can benefit software development is as a gaggle of coked-up juniors making a senior incapable of working on their own stuff because they’re constantly in janitorial mode.

So no change to how it was before then

Different shit, same smell

Plenty of good programmers use AI extensively while working. Me included.

Mostly as an advance autocomplete, template builder or documentation parser.

You obviously need to be good at it so you can see at a glance if the written code is good or if it's bullshit. But if you are good it can really speed things up without any risk as you will only copy cody that you know is good and discard the bullshit.

Obviously you cannot develop without programming knowledge, but with programming knowledge is just another tool.

I maintain strong conviction that if a good programmer uses llm in their work, they just add more work for themselves, and if less than good one does it, they add new exciting and difficult to find bugs, while maintaining false confidence in their code and themselves.

I have seen so much code that looks good on first, second, and third glance, but actually is full of shit, and I was able to find that shit by doing external validation like talking to the dev or brainstorming the ways to test it, the things you categorically cannot do with unreliable random words generator.

That's why you use unit test and integration test.

I can write bad code myself or copy bad code from who-knows where. It's not something introduced by LLM.

Remember famous Linus letter? "You code this function without understanding it and thus you code is shit".

As I said, just a tool like many other before it.

I use it as a regular practice while coding. And to be true, reading my code after that I could not distinguish what parts where LLM and what parts I wrote fully by myself, and, to be honest, I don't think anyone would be able to tell the difference.

It would probably a nice idea to do some kind of turing test, a put a blind test to distinguish the AI written part of some code, and see how precisely people can tell it apart.

I may come back with a particular piece of code that I specifically remember to be an output from deepseek, and probably withing the whole context it would be indistinguishable.

Also, not all LLM usage is for copying from it. Many times you copy to it and ask the thing yo explain it to you, or ask general questions. For instance, to seek for specific functions in C# extensive libraries.

Good start, but not even close to being enough. What if code introduces UB? Unless you specifically look for that, and nobody does, neither unit nor on-target tests will find it. What if it's drastically ineffective? What if there are weird and unusual corner cases?

Now you spend more time looking for all of that and designing tests that you didn't need to do if you had proper practices from the beginning.

But that's worse! You do realise how that's worse, right? You lose all the external ways to validate the code, now you have to treat all the code as malicious.

And spend twice as much time trying to understand why can't you find a function that your LLM just invented with absolute certainty of a fancy autocomplete. And if that's an easy task for you, well, then why do you need this middle layer of randomness. I can't think of a reason why not to search in the documentation instead of introducing this weird game of "will it lie to me"

Any human written code can and will introduce UB.

Also I don't see how you will take more that 5 second to verify that a given function does not exist. It has happen to me, llm suggesting unexisting function. And searching by function name in the docs is instantaneous.

I you don't want to use it don't. I have been more than a year doing so and I haven't run into any of those catastrophic issues. It's just a tool like many others I use for coding. Not even the most important, for instance I think LSP was a greater improvement on my coding efficiency.

It's like using neovim. Some people would post me a list of all the things that can go bad for making a Frankenstein IDE in a ancient text editor. But if it works for me, it works for me.

And there is enormous amount of safeguards, tricks, practices and tools we come up with to combat it. All of those are categorically unavailable to an autocomplete tool, or a tool who exclusively uses autocomplete tool to code.

Which means you can work with documentation. Which means you really, really don't need the middle layer, like, at all.

Glad you didn't, but also, I've reviewed enough generated code to know that a lot of the time people think they're OK, when in reality they just introduced an esoteric memory leak in a critical section. People who didn't do it by themselves, but did it because LLM told them to.

It's not about me. It's about other people introducing shit into our collective lives, making it worse.

You can actually apply those tools and procedures to automatically generated code, exactly the same as in any other piece of code. I don't see the impediment here....

You must be able to understand that searching by name is not the same as searching by definition, nothing more to add here...

Why would you care of the shit code submitted to you is bad because it was generated with AI, because it was copied from SO, or if it's brand new shit code written by someone. If it's bad is bad. And bad code have existed since forever. Once again, I don't see the impact of AI here. If someone is unable to find that a particular generated piece of code have issues, I don't see how magically is going to be able to see the issue in copypasted code or in code written by themselves. If they don't notice they don't, no matter the source.

I will go back to the Turing test. If you don't even know if the bad code was generated, copied or just written by hand, how are you even able to tell that AI is the issue?

The things I am talking about are applied to the development process before you start writing code. Rules from NASA's the power of 10, MISRA, ISO-26262, DO-178C, and so on, as well as the general experience and understanding of the data flow or memory management. Stuff like that you fundamentally can't apply to a system that takes random pieces of text from the Internet and puts it into a string until it looks like something.

There is an enormous gray zone between so called good code (which might actually not exist), and bad code that doesn't work and has obvious problems from the beginning. That's the most dangerous part of it, when your code looks like something that can pass your "Turing test", that's where the most insidious parts get introduced, and since you completely removed that planning part and all the written in blood rules it introduced, and you eliminated experience element, you basically have to treat all the code as the most malicious parts of it, and since it's impossible, you just dropped your standards to the ground.

It's like pouring sugar into concrete. When there is a lot of it, it's obvious and concrete will never set. When there is just enough of it, it will, but structurally it will be undetectably weaker, and you have no idea when it will crack.

Not every program is written for spacecraft, and does not net the critique level of safety and efficiency as the code for the Apollo program.

I don't even know. If memory issues are your issue then using any program with safe memory embedded into it is the way to go. As most things are actually made right now. Unless you are working in legacy applications most programmers would never actually run into that many memory issues nowadays. Not that most programmers would even properly understand memory. Do you think the typical JavaScript bootcamp rookie can even differentiate when something is stored in the stack or the heap?

You are talking like every human made code have Linux Kernel levels of quality, and that's not the case, not by far.

And it doesn't need to. Not all computer programs are critically important, people be coding in lua for pico-8 in a gamejam, what's the issue for them to use AI tools for assistance?

And AI have not existed before a couple of years and our critically important programs are everywhere. Written by smart humans who are making mistakes all the time. I still do not see the anti-AI point here.

Also programming is not concrete, and AI is not sugar. If you use AI to create a fast tree structure and it works fine, it's not going to poison anything. It's probably be just the same function that the programmer would have written, just faster.

Also, not addressing the fact thar if AI is bad because it's just copying, then it's the same as the most common programming texhnique, copying code from Stack Overflow.

I have a genuine question, how many programmers do you think that code in the way you just described?

There is an exception to this I think. I don't make ai write much, but it is convenient to give it a simple Java class and say "write a tostring" and have it spit out something usable.

Depending on what it is you're trying to make, it can actually be helpful as one of many components to help get your feet wet. The same way modding games can be a path to learning a lot by fiddling with something that's complete, getting suggestions from an LLM that's been trained on a bunch of relevant tutorials can give you enough context to get started. It will definitely hallucinate, and figuring out when it's full of shit is part of the exercise.

It's like mid-way between rote following tutorials, modding, and asking for help in support channels. It isn't as rigid as the available tutorials, and though it's prone to hallucination and not as knowledgeable as support channel regulars, it's also a lot more patient in many cases and doesn't have its own life that it needs to go live.

Decent learning tool if you're ready to check what it's doing step by step, look for inefficiencies and mistakes, and not blindly believe everything it says. Just copying and pasting while learning nothing and assuming it'll work, though? That's not going to go well at all.

It'll just keep better at it over time though. The current ai is way better than 5 years ago and in 5 years it'll be way better than now.

That's certainly one theory, but as we are largely out of training data there's not much new material to feed in for refinement. Using AI output to train future AI is just going to amplify the existing problems.

Just generate the training material, duh.

DeepSeek

This is certainly the pattern that is actively emerging.

I mean, the proof is sitting there wearing your clothes. General intelligence exists all around us. If it can exist naturally, we can eventually do it through technology. Maybe there needs to be more breakthroughs before it happens.

"more breakthroughs" spoken like we get these once everyday like milk delivery.

I mean - have you followed AI news? This whole thing kicked off maybe three years ago, and now local models can render video and do half-decent reasoning.

None of it's perfect, but a lot of it's fuckin' spooky, and any form of "well it can't do [blank]" has a half-life.

Seen a few YouTube channels now that just print out AI generated content. Usually audio only with a generated picture on screen. Vast amounts could be made so cheaply like that, Google is going to have fun storing all that when each only gets like 25 views. I think at some point they are going to have to delete stuff.

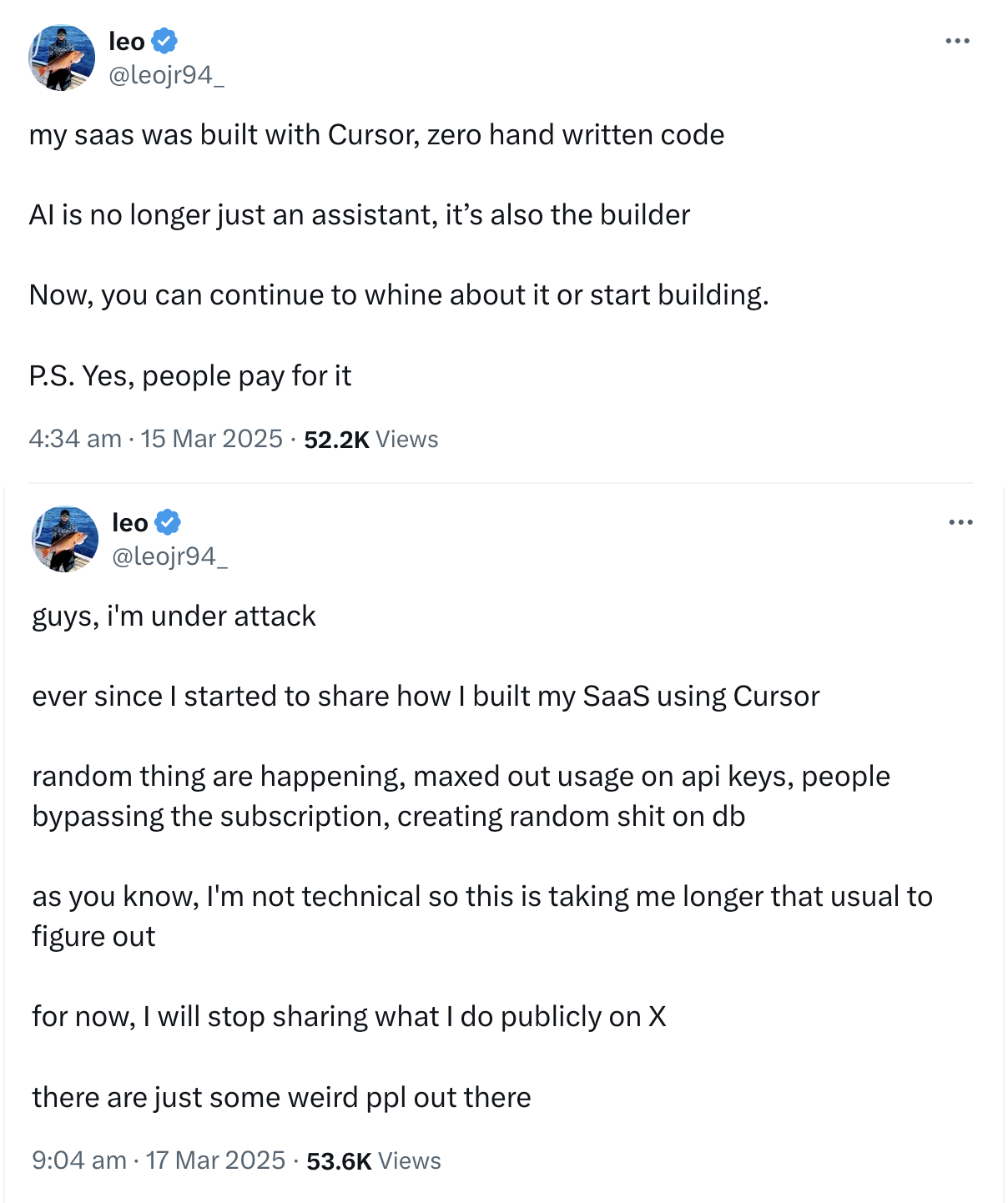

Dipshits going "I made this!" is not indicative of what this makes possible.

I kid you not, I took ML back in 2014 as a extra semester in my undergrad. The complaints then were the same as complaints now: too much power requirement, too many false positives. The latter of the two has evolved into hallucinations.

If normal people going "I made this!" is not convincing enough that it is easily identified then who is this going to replace? you still need the right expert right? all it creates is more work for experts to come and fix broken AI output.

Despite results improving at an insane rate, very recently. And you think this is proof of a problem with... the results? Not the complaints?

People went "I made this!" with fucking Terragen. A program that renders wild alien landscapes which became generic after about the fifth one you saw. The problem there is not expertise. It's immense quantity for zero effort. None of that proves CGI in general is worthless non-art. It's just shifting what the computer will do for free.

At some point, we will take it for granted that text-to-speech can do an admirable job reading out whatever. It'll be a button you push when you're busy sometimes. The dipshits mass-uploading that for popular articles, over stock footage, will be as relevant as people posting seven thousand alien sunsets.

the results do keep improving of course. But it's not some silver bullet. Yes, your enthusiasm is warranted.. but you peddle it like the 2nd coming of christ which I don't like encouraging.

I've done no such thing.

I called it half-decent, spooky, and admirable.

That turns out to be good enough, for a bunch of applications. Even the parts that are just a chatbot fooling people are useful. And massively better than the era you're comparing this to.

We have to deal with this honestly. Neural networks have officially caught on, and anything with examples can be approximated. Anything. The hard part is reminding people what "approximated" means. Being wrong sometimes is normal. Humans are wrong about all kinds of stuff. But for some reason, people think computers bring unflinching perfection - and approach life-or-death scenarios with this sloppy magic.

Personally I'm excited for position tracking with accelerometers. Naively integrating into velocity and location immediately sends you to outer space. Clever filtering almost sorta kinda works. But it's a complex noisy problem, with a minimal output, where approximate answers get partial credit. So long as it's tuned for walking around versus riding a missile, it should Just Work.

Similarly restrained use-cases will do minor witchcraft on a pittance of electricity. It's not like matrix math is hard, for computers. LLMs just try to do as much of it as possible.

If you follow AI news you should know that it’s basically out of training data, that extra training is inversely exponential and so extra training data would only have limited impact anyway, that companies are starting to train AI on AI generated data -both intentionally and unintentionally, and that hallucinations and unreliability are baked-in to the technology.

You also shouldn’t take improvements at face value. The latest chatGPT is better than the previous version, for sure. But its achievements are exaggerated (for example, it already knew the answers ahead of time for the specific maths questions that it was denoted answering, and isn’t better than before or other LLMs at solving maths problems that it doesn’t have the answers already hardcoded), and the way it operates is to have a second LLM check its outputs. Which means it takes,IIRC, 4-5 times the energy (and therefore cost) for each answer, for a marginal improvement of functionality.

The idea that “they’ve come on in leaps and bounds over the Last 3 years therefore they will continue to improve at that rate isn’t really supported by the evidence.

We don't need leaps and bounds, from here. We're already in science fiction territory. Incremental improvement has silenced a wide variety of naysaying.

And this is with LLMs - which are stupid. We didn't design them with logic units or factoid databases. Anything they get right is an emergent property from guessing plausible words, and they get a shocking amount of things right. Smaller models and faster training will encourage experimentation for better fundamental goals. Like a model that can only say yes, no, or mu. A decade ago that would have been an impossible sell - but now we know data alone can produce a network that'll fake its way through explaining why the answer is yes or no. If we're only interested in the accuracy of that answer, then we're wasting effort on the quality of the faking.

Even with this level of intelligence, where people still bicker about whether it is any level of intelligence, dumb tricks keep working. Like telling the model to think out loud. Or having it check its work. These are solutions an author would propose as comedy. And yet: it helps. It narrows the gap between "but right now it sucks at [blank]" and having to find a new [blank]. If that never lets it do math properly, well, buy a calculator.

I’m not saying they don’t have applications. But the idea of them being a one size fits all solution to everything is something being sold to VC investors and shareholders.

As you say - the issue is accuracy. And, as you also say - that’s not what these things do, and instead they make predictions about what comes next and present that confidently. Hallucinations aren’t errors, they’re what they were built to do.

If you want something which can set an alarm for you or find search results then something that responds to set inputs correctly 100% of the time is better than something more natural-seeming which is right 99%of the time.

Maybe along the line there will be a new approach, but what is currently branded as AI is never going to be what it’s being sold as.

If you want something more complex than an alarm clock, this does kinda work for anything. Emphasis on "kinda."

Neural networks are universal approximators. People get hung-up on the approximation part, like that cancels out the potential in... universal. You can make a model that does any damn thing. Only recently has that seriously meant you and can - backpropagation works, and it works on video-game hardware.

"AI is whatever hasn't been done yet" has been the punchline for decades. For any advancement in the field, people only notice once you tell them it's related to AI, and then they just call it "AI," and later complain that it's not like on Star Trek.

And yet it moves. Each advancement makes new things possible, and old things better. Being right most of the time is good, actually. 100% would be better than 99%, but the 100% version does not exist, so 99% is better than never.

Telling the grifters where to shove it should not condemn the cool shit they're lying about.

I’m not sure we’re disagreeing very much, really.

My main point WRT “kinda” is that there are a tonne of applications that 99% isn’t good enough for.

For example, one use that all the big players in the phone world seem to be pushing ATM is That of sorting your emails for you. If you rely on that and it classifies an important email as unimportant so you miss it, then that’s actually a useless feature. Either you have to check all your emails manually yourself, in which case it’s quicker to just do that in the first place and the AI offers no value, or you rely on it and end up missing something that it es important you didn’t miss.

And it doesn’t matter if it gets it wrong one time in a hundred, that one time is enough to completely negate all potential positives of the feature.

As you say, 100% isn’t really possible.

I think where it’s useful is for things like analysing medical data and helping coders who know what they’re doing with their work. In terms of search it’s also good at “what’s the name of that thing that’s kinda like this?”-type queries. Kind of the opposite of traditional search engines where you’re trying to find out information about a specific thing, where i think non-Google engines are still better.

Your example of catastrophic failure is... e-mail? Spam filters are wrong all the time, and they're still fantastic. Glancing in the folder for rare exceptions is cognitively easier than categorizing every single thing one-by-one.

If there's one false negative, you don't go "Holy shit, it's the actual prince of Nigeria!"

But sure, let's apply flawed models somewhere safe, like analyzing medical data. What?

Obviously fucking not.

Even in car safety, a literal life-and-death context, a camera that beeps when you're about to screw up can catch plenty of times where you might guess wrong. Yeah - if you straight-up do not look, and blindly trust the beepy camera, bad things will happen. That's why you have the camera and look.

If a single fuckup renders the whole thing worthless, I have terrible news about human programmers.

Okay, so you can’t conceive of the idea of an email that it’s important that you don’t miss.

Let’s go with what Apple sold Apple Intelligence on, shall we? You say to Siri “what time do I need to pick my mother up from the airport?” and Siri coombs through your messages for the flight time, checks the time of arrival from the airline’s website, accesses maps to get journey time accounting for local traffic, and tells you when you need to leave.

With LLMs, absolutely none of those steps can be trusted. You have to check each one yourself. Because if they’re wrong, then the output is wrong. And it’s important that the output is right. And if you have to check the input of every step, then what do you save by having Siri do it in the first place? It’s actually taking you more time than it would have to do everything yourself.

AI assistants are being sold as saving you time and taking meaningless busywork away from you. In some applications, like writing easy, boring code, or crunching more data than a human could in a very short time frame, they are. But for the applications they’re being sold on for phones? Not without being reliable. Which they can’t be, because of their architecture.

This absolutism is jarring against your suggestion of applying the same technology to medicine.

Siri predates this architecture by a decade. And you still want to write off the whole thing as literally useless if it's ever ever ever wrong... because god forbid you have to glance at whatever e-mail it points to. Like skimming one e-mail to confirm it's from your mom, about a flight, and mentions the time... is harder than combing through your inbox by hand.

Confirming an answer is a lot easier than finding it from scratch. And if you're late to the airport anyway, oh no, how terrible. Everything is ruined forever. Burn your computers and live in the woods, apparently, because one important e-mail was skipped. Your mother had to call you and then wait comfortably for an entire hour.

Perfect reliability does not exist. No technology provides it. Even with your prior example, phone alarms - I've told Android to re-use the last timer, when I said I wanted twenty minutes, and it didn't go off until 6:35 PM, because yesterday I said that at 6:15. I've had physical analog alarm clocks fail to go off in the morning. I did not abandon the concept of time, following that betrayal.

The world did not end because a machine fucked up.

That's your interpretation.

that's reality. Unless you're too deluded to think it's magic.

No i meant to say you're interpretation of what I said.

Everything possible in theory. Doesn't mean everything happened or just about to happen

To get better it would need better training data. However there are always more junior devs creating bad training data, than senior devs who create slightly better training data.

And now LLMs being trained on data generated by LLMs. No possible way that could go wrong.

My hobby: extrapolating.