Anthropic's Claude AI

Anthropic's Claude AI

Anthropic's Claude AI is a next-generation AI assistant that can power a wide variety of conversational and text processing tasks. It's been rigorously tested with key partners like Notion, Quora, and DuckDuckGo and is now ready for wider use.

Claude can help with tasks including summarization, search, creative and collaborative writing, Q&A, coding, and more. Early adopters report that Claude is less likely to produce harmful outputs, easier to converse with, and more steerable. Claude can also be directed on personality, tone, and behavior.

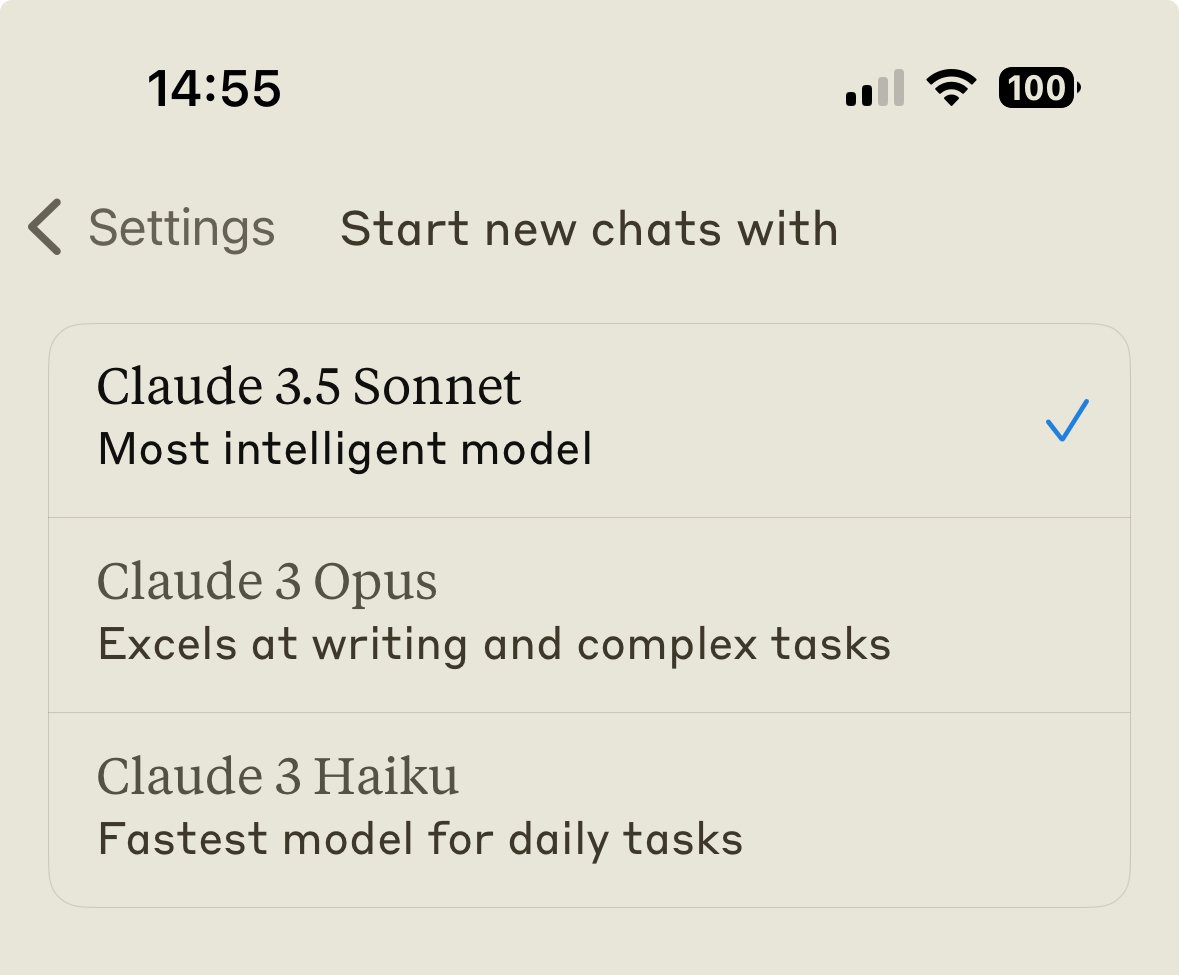

There are two versions of Claude: Claude and Claude Instant. Claude is a high-performance model, while Claude Instant is a faster, less expensive, but still efficient version.

Claude has been successfully integrated into various platforms:

- Quora offered Claude to users through Poe, an AI chat app, where users found Claude's answers to be detailed and easily understood.

- Notion users have been benefiting from Claude's creative writing and summarization abilities to increase their productivity.

Anthropic's Claude AI

Anthropic's Claude AI is a next-generation AI assistant that can power a wide variety of conversational and text processing tasks. It's been rigorously tested with key partners like Notion, Quora, and DuckDuckGo and is now ready for wider use.

Claude can help with tasks including summarization, search, creative and collaborative writing, Q&A, coding, and more. Early adopters report that Claude is less likely to produce harmful outputs, easier to converse with, and more steerable. Claude can also be directed on personality, tone, and behavior.

There are two versions of Claude: Claude and Claude Instant. Claude is a high-performance model, while Claude Instant is a faster, less expensive, but still efficient version.

Claude has been successfully integrated into various platforms:

- Quora offered Claude to users through Poe, an AI chat app, where users found Claude's answers to be detailed and easily understood.

- Notion users have been benefiting from Claude's creative writing and summarization abilities to increase their productivity.

- Slack has partnered with Anthropic to integrate Claude.

For businesses or individuals interested in using Claude, you can request access here.

For businesses or individuals interested in using Claude, you can request access here.

it happening as part of the script

it happening as part of the script

You can see from interaction 6/7 below that claude thinks it did respond to these queries

You can see from interaction 6/7 below that claude thinks it did respond to these queries