“Vibe coding is a nightmare and I’m getting ready to ban it,” said “Clint,” the CTO of a mid-sized fintech.

He’s not kidding around.

“We opened more security holes in 2025 than we did in all of 2020 to 2024. It’s a miracle we haven’t been breached yet. We keep catching flaws in regression testing – which is pretty late – and at some point we’re going to miss something, and then it’s someone’s head. Probably mine.”

In the back half of last year, I’ve heard from a growing number of tech leaders, and current and former software developers, about their chaotic journey with AI coding in enterprise tech. It was almost always a journey that started with “vibe coding” as a first experimental step.

Now those leaders and developers are implying that vibe coding is just a fad, maybe a marketing ploy to sell AI coding into the enterprise, because if AI allows anyone to be able to code, it triggers the question:

“How many of these expensive software developers do we really need?”

That’s a lot of smoke. I’m a former expensive software developer, a current entrepreneur, and a half-hearted vibe coder. I’m going to recklessly speculate about vibe coding based on some conspiratorial-sounding conversations I’ve had with tech leaders and experienced software developers, and see if there’s fire.

My Own Personal Hacker

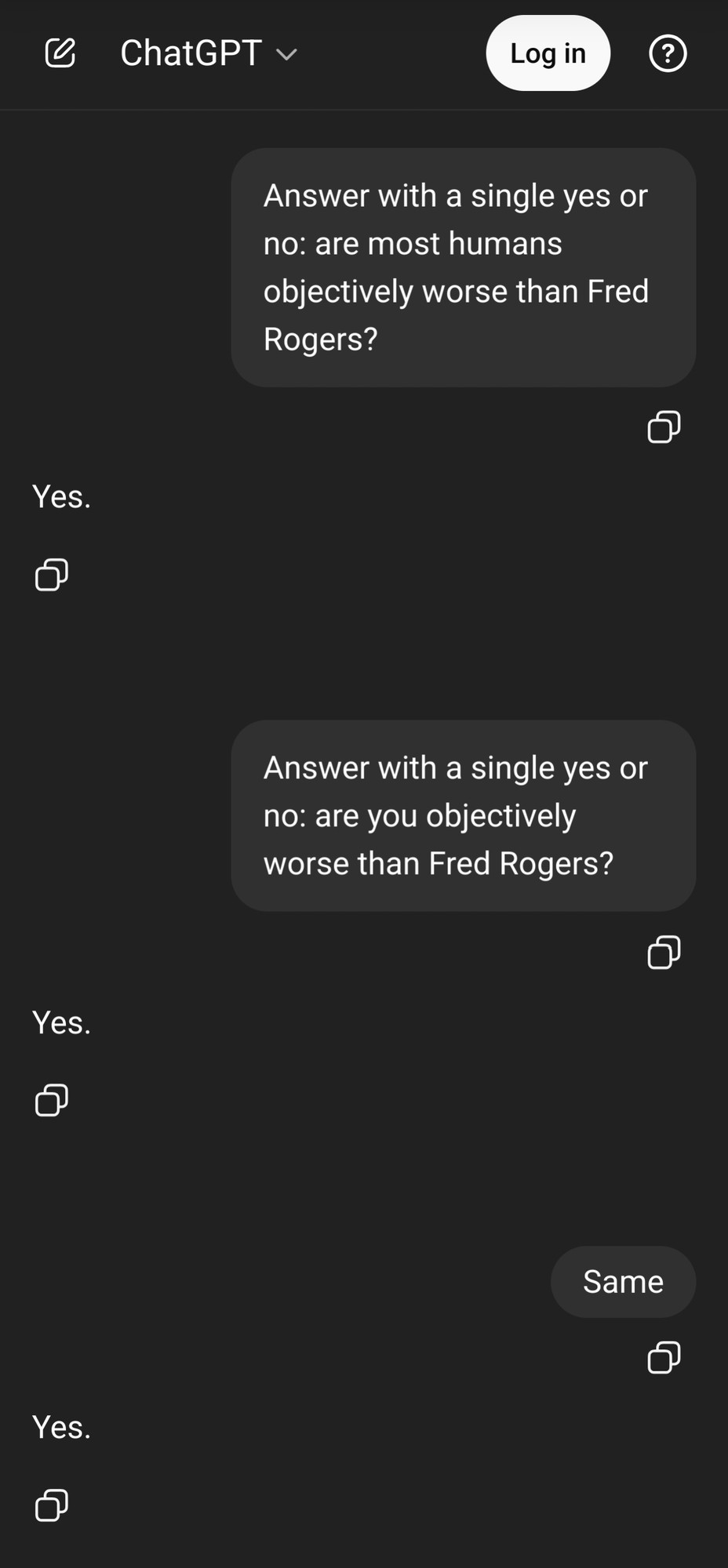

Yes. Anyone can code. But security holes are easy to open, and thus, “anyone” can also attract their own personal hacker.

Like I did. Twice.

Warning: I am a former developer and, these days, I have a standby network of current senior developers who can help me with things like security. Don’t try this at home.

Without naming names, over the last several years, I built a minor empire out of a no-code platform hooked up to a lot of low-code tools. I did this partly to show that anyone could do this – “Anyone can no-code!” And it worked great and still works today.

Except a few years ago I spent an entire summer fighting one individual, persistent hacker. No flaws to fix, no holes to patch, but I couldn’t stop him from trying to break in. The no-code platform couldn’t stop him. His ISP wouldn’t do anything. Finally, out of frustration, I just ditched the whole function and leaned totally into Stripe. More expensive, and a lot of hours lost, but no more hackers, and thankfully, no damage done.

More recently, I vibe coded my way to a beautiful web-app with a sorta-simple login function to privately self-serve the showing of demos of some of the apps I’ve built, genericized and anonymized—so no sensitive data to be hacked, but the hackers came anyway.

I took that app down too. Now I do demos the old fashioned way, from my local machine over Zoom. It’s sooo 2023.

If You Vibe Code It, The Bots Will Come

In both cases, my developer friends were not shocked at my stories, and frankly, by the second time, neither was I. When I asked why these hackers would come after me, they told me “because you’re there,” and that the second “hacker” was almost assuredly a bot or team of bots, just sniffing out public apps and breaking into them.

“If you build it, they will come,” said my favorite CTO Ryan Eade. And what he’s talking about is a recent but rather well-known conundrum. Anyone can code, but the minute they go live, they’re setting themselves up for security failures that these vibe-coding tools just weren’t built to handle.

So knowing this, why were the AI platforms pushing vibe-coding so hard, and why wasn’t anyone screaming about the security nightmare that was unfolding across the internet?

Well, I’m being facetious. They were screaming. Hell, I was too. It’s just that no one was listening because everyone was too busy building the next billion-dollar vibe-coded app.

Or too busy firing senior developers they believed they no longer needed.

Here’s where it gets spicy.

The Old Man and the C++

I’ve been wanting to use that awful pun for months now. My editor will love it.

Over the last year-plus as I’ve been documenting the decimation in the tech industry, including, specifically, the gutting of senior talent from tech teams, I’ve collected a following of these former developer cast-offs.

Let’s face it. They’re mostly old men, or slightly older men, and definitely a lot more women than before, but not as many in the 40+ age range. These are the folks with 15-20 years of experience. They were and are the hardest hit by tech disemployment, and they’re pretty sure they know why.

“[They] gave [AI coding] to us,” said “Merlin,” one of the middle-aged former developers who follows me. “They made us prove we should keep our jobs. We did. I did, I know I did. But then they lopped off the top… 60 percent or so of our team. By experience. They claimed ‘streamlining for the future’ but it was all us greybeards that got the ax.”

Merlin is not alone. His is one of dozens of similar stories I’ve heard over the last 18 months. And while there are a lot of reasons – a lot of reasons – for companies to do mass layoffs in tech these days, a number of these folks are now coming around to what in retrospect feels like an exercise in AI coding that was designed to push them out.

“It’s not that the tools were passing us by,” Merlin said. “The tools were pretty cool and I picked them up quickly. It was more about ‘If we have AI, why do we need the programmers?’”

The Answer Is Security

Clint is still trying to shove vibe-coding back into the tube it came from. I asked him what he thought about the vibe-coding wave being promoted to push senior talent out in favor of AI coding tools.

“I don’t know,” he said. “I don’t see how anyone is doing this at scale who isn’t completely versed in infrastructure, security, privacy, overall data governance.”

Clint’s company didn’t lop off any heads, but now they’ve got standards in place for when AI coding agents are to be interfaced with and how. He’s frustrated, but at least at this point he’s putting out dying embers, not roaring fires.

I asked him why he didn’t take a more cautious approach at the beginning. He paused for a few seconds.

“OK. Honestly, there were a couple reasons. I’ll admit I was just as excited to get into AI as anyone else. But also, there’s this nagging pressure, like, if we don’t pick this stuff up quickly, we’re going to get left behind by our competitors who do.”

He laughed. “Or some kid in a dorm room who rebuilds our entire business over a weekend.” Then he paused again. “I know that’s not going to happen.”

Vibe Coding Wasn’t a Ruse, But It Left a Mark

Of course it won’t. But it’s that fear that drove a lot of companies to dump talent overboard and sink those dollars into an “AI first” infrastructure. And it’s still happening today. A kid in a dorm room isn’t going to replace a 100-person tech team overnight. But why carry that liability when 80 techies will do? Or 50? Or 10?

“There are a lot of us,” Merlin said, referring to the growing number of senior, now unemployed developers on the sidelines. “Maybe a kid can’t rebuild a business in a weekend, but I feel like a few of us could do something like that. It’d be sweet payback to take on these companies with their own strategy.”

Ultimately, I think vibe coding took off on its own, riding the same wave that made me stand up my own apps. There’s no question some tech companies also rode that wave and used it as an excuse to take drastic action to assuage those nagging fears. And as a bonus, they got a better bottom line to boot.

Just remember, in their wake, they left a growing, experienced, motivated army of developers who are much more efficient with these AI coding tools, and they aren’t interested in “vibes” so much as “disruption.”

I’ll talk about some of the more disruptive approaches in AI coding in future posts. Now would be a good time to join my email list, a growing army of professionals who want a unique take on the hype and the histrionics.